I am migrating my tomb of stuff from my current FreeNAS 11.3U5 to my new NAS running the latest TrueNAS Scale 22.12. I got quite excited seeing information like in this thread showing how good ZSTD compression method is and how I can squeeze little more from my data vs. LZ4 I have used on FreeNAS. So excited...

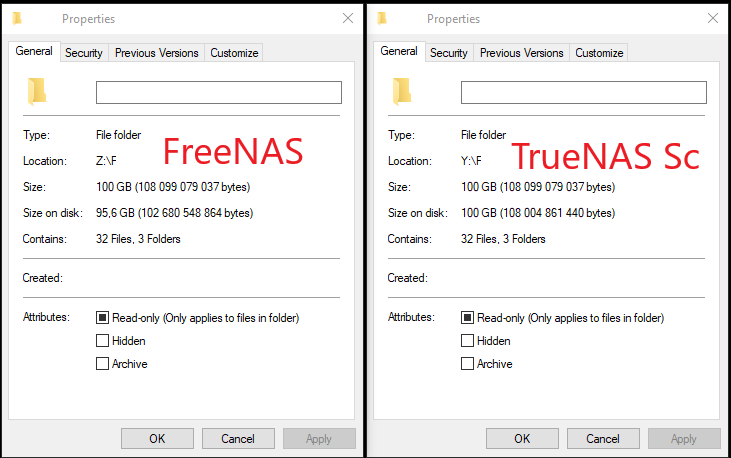

However, soon I noticed that folders with videos are hardly compressed, if any. Something didn't seem right as I could swear same data on my FreeNAS have a decent compression (some 5%) and I was right. Here's an example of two identical folders

the result on the right is from Scale and it is about the same for both, LZ4 and ZSTD. The one on left is my LZ4-compressed FreeNAS storage. No de-duplication in either of cases. Additionally, this compression ratio of about 5% is pretty consistent with video files, e.g. a parent folder of the above is 20TB and reporting only 18.9TB of the size on disk.

Is there something I am missing? Could that be just a reporting error in Windows? A different LZ4 compression level?

However, soon I noticed that folders with videos are hardly compressed, if any. Something didn't seem right as I could swear same data on my FreeNAS have a decent compression (some 5%) and I was right. Here's an example of two identical folders

the result on the right is from Scale and it is about the same for both, LZ4 and ZSTD. The one on left is my LZ4-compressed FreeNAS storage. No de-duplication in either of cases. Additionally, this compression ratio of about 5% is pretty consistent with video files, e.g. a parent folder of the above is 20TB and reporting only 18.9TB of the size on disk.

Is there something I am missing? Could that be just a reporting error in Windows? A different LZ4 compression level?