jperham-ct

Cadet

- Joined

- Feb 23, 2022

- Messages

- 5

Hello,

I'm having speed transfer issues with my TrueNAS system when compression is enabled on a dataset.

The issue is the transfer takes a good 30 seconds to start and then the speed drops down to 5.54MB/s, then down to 0 bytes, maybe speeds up for a bit and then stalls out again and repeats the cycle. The problem presents itself when highly compressed file is copied from a compression enabled dataset to any other dataset. It doesn't matter whether the destination dataset has compression enabled or not.

The specs of the system are:

Intel(R) Xeon(R) Silver 4210R CPU @ 2.40GHz

128GB ECC RAM

53 WDC WUH721818AL5201 drives in a RAIDZ pool

TrueNAS-13.0-U5.3

I've recorded some video of what I'm seeing.

The video below is copying a highly compressible file from a non-compression enabled dataset to gzip enabled dataset. No speed issues with this transfer are observed.

drive.google.com

drive.google.com

The video below is copying that same highly compressible file within a compression enabled dataset to another folder within the compression enabled dataset. This is the issue behavior:

drive.google.com

drive.google.com

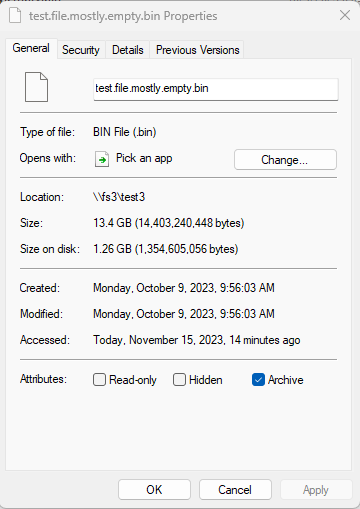

The highly compressible file stats look like this:

I've tried lz4 and gzip compression and it's the same behavior with both. I've also confirmed it's not networking related by completely wiping a Windows 11 Pro machine, connecting it directly to the TrueNAS system via ethernet and attempting the same transfers as above. The issue is the same on that test device.

Any ideas?

I'm having speed transfer issues with my TrueNAS system when compression is enabled on a dataset.

The issue is the transfer takes a good 30 seconds to start and then the speed drops down to 5.54MB/s, then down to 0 bytes, maybe speeds up for a bit and then stalls out again and repeats the cycle. The problem presents itself when highly compressed file is copied from a compression enabled dataset to any other dataset. It doesn't matter whether the destination dataset has compression enabled or not.

The specs of the system are:

Intel(R) Xeon(R) Silver 4210R CPU @ 2.40GHz

128GB ECC RAM

53 WDC WUH721818AL5201 drives in a RAIDZ pool

TrueNAS-13.0-U5.3

I've recorded some video of what I'm seeing.

The video below is copying a highly compressible file from a non-compression enabled dataset to gzip enabled dataset. No speed issues with this transfer are observed.

copyfromnocomptocomp.mp4

drive.google.com

drive.google.com

The video below is copying that same highly compressible file within a compression enabled dataset to another folder within the compression enabled dataset. This is the issue behavior:

compressioncopy.mp4

drive.google.com

drive.google.com

The highly compressible file stats look like this:

I've tried lz4 and gzip compression and it's the same behavior with both. I've also confirmed it's not networking related by completely wiping a Windows 11 Pro machine, connecting it directly to the TrueNAS system via ethernet and attempting the same transfers as above. The issue is the same on that test device.

Any ideas?