finsfree

Dabbler

- Joined

- Jan 7, 2015

- Messages

- 46

Why are my files not being compressed?

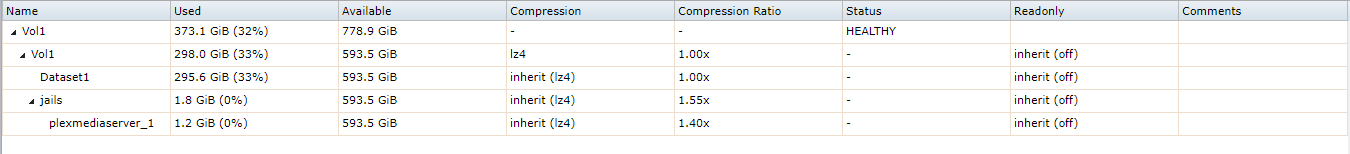

I have created a Volume (Vol1) and a Dataset (Dataset1). The Dataset1 is inheriting the lz4 compression from the Volume (Vol1). However, when I compare a file that is in the Dataset with the same file on my c: drive they are the same size. I do not see where it is being compressed.

I am viewing/comparing the files using File Explorer running on Windows 10. I have a mapped drive to my FreeNAS box (FreeNAS 11.1-U2).

Here is a screen shot of my storage. You can see the lz4 compression is enabled.

I have created a Volume (Vol1) and a Dataset (Dataset1). The Dataset1 is inheriting the lz4 compression from the Volume (Vol1). However, when I compare a file that is in the Dataset with the same file on my c: drive they are the same size. I do not see where it is being compressed.

I am viewing/comparing the files using File Explorer running on Windows 10. I have a mapped drive to my FreeNAS box (FreeNAS 11.1-U2).

Here is a screen shot of my storage. You can see the lz4 compression is enabled.