Hi, looking for a bit of advice about migrating my data to another pool in SCALE. I have TrueNAS-SCALE-22.02.0.

I have replication set up and it is working fine - I can see the expected files at the destination.

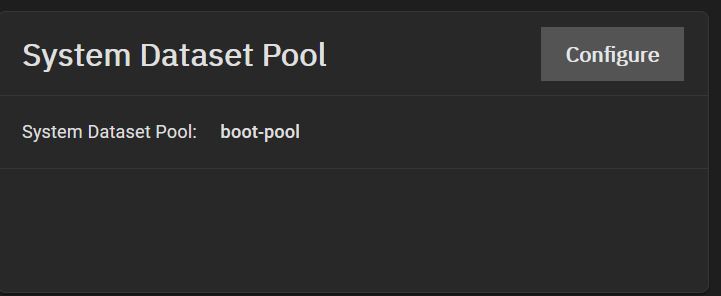

I have moved the system dataset pool to boot-pool and rebooted. At this point I'd expect that all system processes are running on boot-pool.

I'm aiming to follow the process outlined here: Howto: migrate data from one pool to a bigger pool

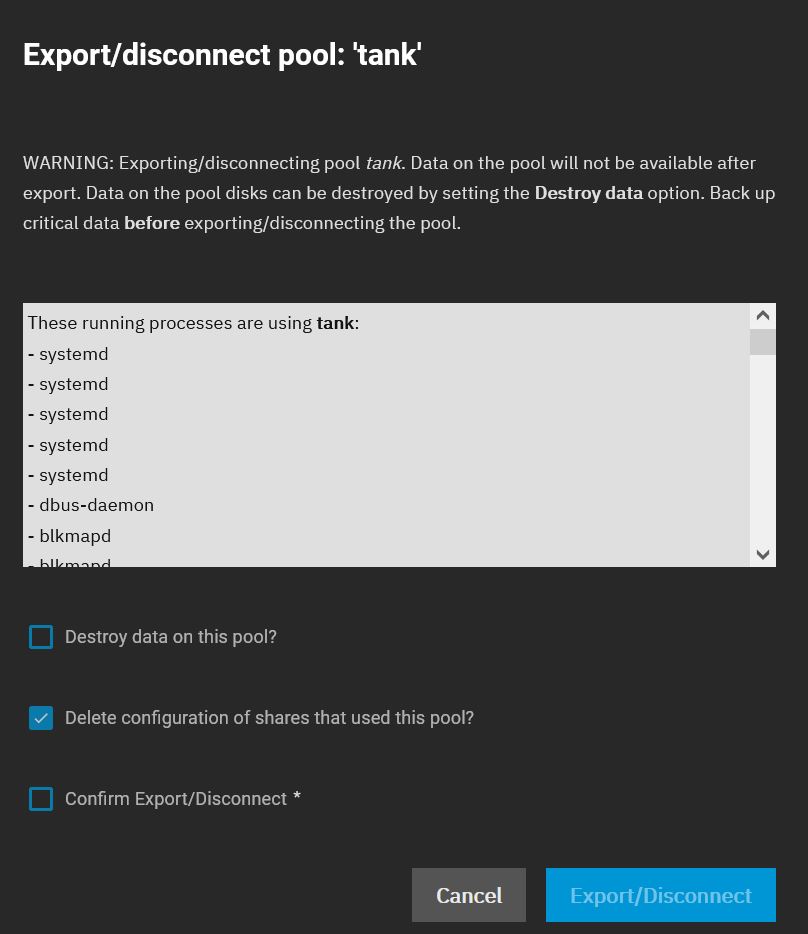

However, when I try to export/disconnect the replication source, tank, I get the warning:

and there is a long list of processes using tank:

Clearly, this is not going to work... if I go ahead and export/disconnect anyway, the GUI crashes and systemd keeps restarting on the console until I get fed up and reboot.

Here is the confirmation that I've moved the system processes to boot-pool:

but it doesn't look like this is working as expected.

Have I missed something or is this a bug ?

Thanks in advance!

I have replication set up and it is working fine - I can see the expected files at the destination.

I have moved the system dataset pool to boot-pool and rebooted. At this point I'd expect that all system processes are running on boot-pool.

I'm aiming to follow the process outlined here: Howto: migrate data from one pool to a bigger pool

However, when I try to export/disconnect the replication source, tank, I get the warning:

and there is a long list of processes using tank:

- systemd

- systemd

- systemd

- systemd

- systemd

- dbus-daemon

- blkmapd

- blkmapd

- blkmapd

- systemd-udevd

- middlewared (wo

- middlewared (wo

- python3

- python3

- python3

- python3

- python3

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- dhclient

- middlewared (wo

- middlewared (wo

- middlewared (wo

- middlewared (wo

- middlewared (wo

- middlewared (wo

- mdadm

- systemd-journal

- systemd-journal

- systemd-journal

- systemd-journal

- systemd-journal

- rpcbind

- smartd

- smartd

- smartd

- nscd

- nscd

- nscd

- systemd-logind

- systemd-logind

- systemd-logind

- systemd-logind

- systemd-logind

- zed

- zed

- zed

- zed

- zed

- zed

- zed

- syslog-ng

- syslog-ng

- syslog-ng

- winbindd

- winbindd

- winbindd

- nginx

- nginx

- nginx

- nginx

- nginx

- nginx

- nginx

- nginx

- cli

- cli

- cli

- cli

- cli

- cli

- cli

- cron

- winbindd

- winbindd

- winbindd

- rrdcached

- rrdcached

- rrdcached

- winbindd

- winbindd

- winbindd

- systemd-machine

- libvirtd

- winbindd

- winbindd

- winbindd

- avahi-daemon

- avahi-daemon

- wsdd.py

- smbd

- smbd

- smbd

- smbd-notifyd

- smbd-notifyd

- smbd-notifyd

- cleanupd

- cleanupd

- cleanupd

- virtlogd

- collectd

- ntpd

- ntpd

- ntpd

- smbd

- smbd

- smbd

- smbd

- smbd

Clearly, this is not going to work... if I go ahead and export/disconnect anyway, the GUI crashes and systemd keeps restarting on the console until I get fed up and reboot.

Here is the confirmation that I've moved the system processes to boot-pool:

but it doesn't look like this is working as expected.

Have I missed something or is this a bug ?

Thanks in advance!