Brink2Three

Cadet

- Joined

- Jan 25, 2019

- Messages

- 7

Hello everyone!

Yes I have done the UNTHINKABLE, dastardly evil: I run TrueNAS Scale-22.02.2.1 as a VM!! *gasps*

Infodump:

Proxmox v6.4-15

TrueNAS Scale-22.02.2.1 OR Core v13 (initial release and U1.1)

Seriously though I'm stumped on this issue. Every 8 hours to 2ish days, TrueNAS locks up entirely. WebUI, SSH, Console, Shares, Apps, you name it.

This has been happening for over a month now. I have also tried rebuilding the VM and re-adding all of the drives through Proxmox, Switching between Core v13 and Scale-22.02, changing device IDs in the VM's config file i440fx vs q35, different CPU flags, UEFI (OVMF) vs BIOS (SeaBIOS), and multiple reinstalls of both Core and Scale.

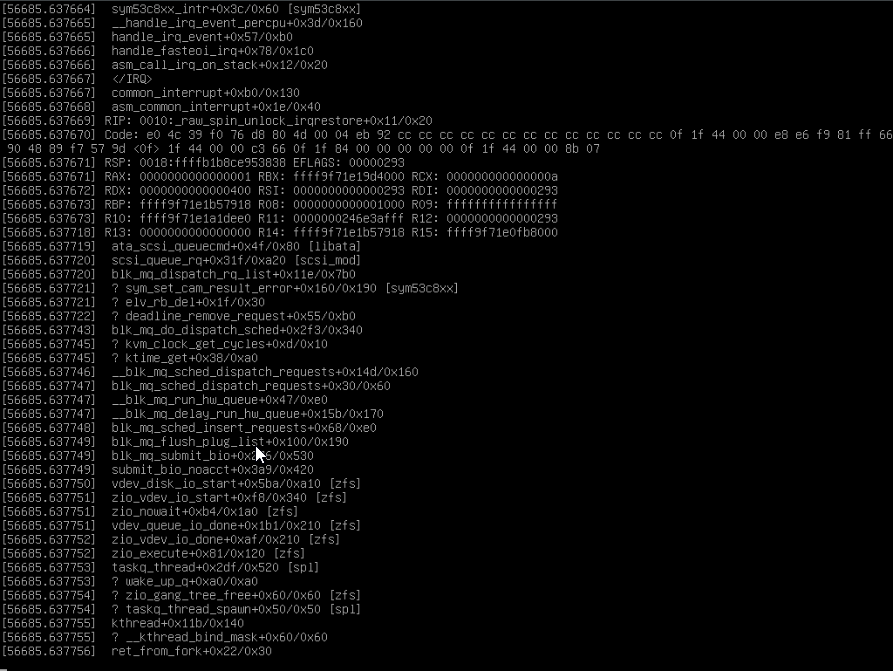

Whenever it locks up, the console has a screen that is some variation of this:

It looked like an IRQ fault which usually means a faulty motherboard, RAM or Processor (See sig for hardware). I found it odd though that this would be the only VM out of the 9 virtual machines to have issues.

Never the less, I shut Proxmox down, set it to run MemTest86 overnight and through my work day. 18 hours total netted 2 full passes through MemTest86 with no errors, so I changed a flag in the BIOS to log any load errors. The SMBios Log is still blank even now.

I've looked at the SysLog for Proxmox, which shows nothing out of the ordinary around that time.

I've looked at daemon, Syslog, middlewared, k3s_daemon, error log, cron, and debug logs from TrueNAS... I'll post them as code snippets below but it all appears to be normal activity.

Here are some logs from the crash yesterday morning. Crash happened at 4:57AM roughly (Uptime-Kuma alerted me at 4:58)

Proxmox Syslog:

These logs look exactly the same going back for hours.

NOTE: the clock is set differently for Proxmox. it is currently set to EST, and all of Truenas' logs are in PST, so Truenas logs will show 01:xx:xx instead of 04:xx:xx

TrueNAS Syslog:

I wasn't awake to restart it at 1:57AM PST, so you'll see the logs of it turning back on start at 4:12 AM PST, which is 7:12AM EST (about when I woke up)

I will HAPPILY post more if it makes the probability of this getting solved higher, I just don't want to needlessly spam information if it won't help.

Thanks in advance. :)

Yes I have done the UNTHINKABLE, dastardly evil: I run TrueNAS Scale-22.02.2.1 as a VM!! *gasps*

Infodump:

Proxmox v6.4-15

TrueNAS Scale-22.02.2.1 OR Core v13 (initial release and U1.1)

Seriously though I'm stumped on this issue. Every 8 hours to 2ish days, TrueNAS locks up entirely. WebUI, SSH, Console, Shares, Apps, you name it.

This has been happening for over a month now. I have also tried rebuilding the VM and re-adding all of the drives through Proxmox, Switching between Core v13 and Scale-22.02, changing device IDs in the VM's config file i440fx vs q35, different CPU flags, UEFI (OVMF) vs BIOS (SeaBIOS), and multiple reinstalls of both Core and Scale.

Whenever it locks up, the console has a screen that is some variation of this:

It looked like an IRQ fault which usually means a faulty motherboard, RAM or Processor (See sig for hardware). I found it odd though that this would be the only VM out of the 9 virtual machines to have issues.

Never the less, I shut Proxmox down, set it to run MemTest86 overnight and through my work day. 18 hours total netted 2 full passes through MemTest86 with no errors, so I changed a flag in the BIOS to log any load errors. The SMBios Log is still blank even now.

I've looked at the SysLog for Proxmox, which shows nothing out of the ordinary around that time.

I've looked at daemon, Syslog, middlewared, k3s_daemon, error log, cron, and debug logs from TrueNAS... I'll post them as code snippets below but it all appears to be normal activity.

Here are some logs from the crash yesterday morning. Crash happened at 4:57AM roughly (Uptime-Kuma alerted me at 4:58)

Proxmox Syslog:

Jul 27 04:50:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:50:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:50:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:51:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:51:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:51:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:52:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:52:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:52:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:53:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:53:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:53:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:53:42 pve apcupsd[1420]: Communications with UPS lost.

Jul 27 04:54:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:54:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:54:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:55:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:55:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:55:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:56:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:56:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:56:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:57:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:57:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:57:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:58:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:58:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:58:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 04:59:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 04:59:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 04:59:01 pve systemd[1]: Started Proxmox VE replication runner.

Jul 27 05:00:00 pve systemd[1]: Starting Proxmox VE replication runner...

Jul 27 05:00:01 pve systemd[1]: pvesr.service: Succeeded.

Jul 27 05:00:01 pve systemd[1]: Started Proxmox VE replication runner.

These logs look exactly the same going back for hours.

NOTE: the clock is set differently for Proxmox. it is currently set to EST, and all of Truenas' logs are in PST, so Truenas logs will show 01:xx:xx instead of 04:xx:xx

TrueNAS Syslog:

Jul 27 01:50:00 freenas.local systemd[1]: Starting system activity accounting tool...

Jul 27 01:50:00 freenas.local systemd[1]: sysstat-collect.service: Succeeded.

Jul 27 01:50:00 freenas.local systemd[1]: Finished system activity accounting tool.

Jul 27 01:50:01 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.UrnLyS.mount: Succeeded.

Jul 27 01:50:01 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.fuM2M4.mount: Succeeded.

Jul 27 01:50:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.nCv0Je.mount: Succeeded.

Jul 27 01:50:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.r7gEOz.mount: Succeeded.

Jul 27 01:50:31 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.y1uOXX.mount: Succeeded.

Jul 27 01:50:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.73THlK.mount: Succeeded.

Jul 27 01:50:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.efDnej.mount: Succeeded.

Jul 27 01:50:56 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.rKAV5x.mount: Succeeded.

Jul 27 01:51:05 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.JHZ4lE.mount: Succeeded.

Jul 27 01:51:06 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.JAQRaa.mount: Succeeded.

Jul 27 01:51:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.4rQE9B.mount: Succeeded.

Jul 27 01:51:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.JE2iLj.mount: Succeeded.

Jul 27 01:51:31 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.yxpRXf.mount: Succeeded.

Jul 27 01:52:01 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.CTt19w.mount: Succeeded.

Jul 27 01:52:15 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.6mCbBa.mount: Succeeded.

Jul 27 01:52:26 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.7kJL3j.mount: Succeeded.

Jul 27 01:52:36 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.PgDjZE.mount: Succeeded.

Jul 27 01:52:45 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.TAEXTV.mount: Succeeded.

Jul 27 01:52:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.S1WnJe.mount: Succeeded.

Jul 27 01:52:56 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.QuEZBb.mount: Succeeded.

Jul 27 01:53:01 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.dcSi0C.mount: Succeeded.

Jul 27 01:53:15 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.kJW3YF.mount: Succeeded.

Jul 27 01:53:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.RwuFH0.mount: Succeeded.

Jul 27 01:53:31 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.2bpDoI.mount: Succeeded.

Jul 27 01:53:36 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.ZQVoN1.mount: Succeeded.

Jul 27 01:53:38 freenas.local env[16427]: E0727 01:53:38.741992 16427 network_services_controller.go:193] Failed to replace route to service VIP 192.168.0.217 configured on kube-dummy-if. Error: exit status 2, Output: RTNETLINK answers: File exists

Jul 27 01:53:38 freenas.local env[16427]: E0727 01:53:38.744835 16427 network_services_controller.go:193] Failed to replace route to service VIP 192.168.0.217 configured on kube-dummy-if. Error: exit status 2, Output: RTNETLINK answers: File exists

Jul 27 01:53:38 freenas.local env[16427]: E0727 01:53:38.747080 16427 network_services_controller.go:193] Failed to replace route to service VIP 192.168.0.217 configured on kube-dummy-if. Error: exit status 2, Output: RTNETLINK answers: File exists

Jul 27 01:53:38 freenas.local env[16427]: E0727 01:53:38.749346 16427 network_services_controller.go:193] Failed to replace route to service VIP 192.168.0.217 configured on kube-dummy-if. Error: exit status 2, Output: RTNETLINK answers: File exists

Jul 27 01:53:38 freenas.local env[16427]: E0727 01:53:38.751679 16427 network_services_controller.go:193] Failed to replace route to service VIP 192.168.0.217 configured on kube-dummy-if. Error: exit status 2, Output: RTNETLINK answers: File exists

Jul 27 01:53:56 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.nbzIrD.mount: Succeeded.

Jul 27 01:54:35 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.CJDJDz.mount: Succeeded.

Jul 27 01:54:45 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.udDhPG.mount: Succeeded.

Jul 27 01:54:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.VpMHFY.mount: Succeeded.

Jul 27 01:55:06 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.Q7FkX5.mount: Succeeded.

Jul 27 01:55:15 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.iM2yGb.mount: Succeeded.

Jul 27 01:55:25 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.qEVlKF.mount: Succeeded.

Jul 27 01:55:26 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.4xyE0V.mount: Succeeded.

Jul 27 01:55:31 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.dw1nmT.mount: Succeeded.

Jul 27 01:55:36 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.2PUuOY.mount: Succeeded.

Jul 27 01:55:45 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.yNqakn.mount: Succeeded.

Jul 27 01:55:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.lPsfPC.mount: Succeeded.

Jul 27 01:56:03 freenas.local dhclient[2322]: DHCPREQUEST for 192.168.0.217 on ens18 to 192.168.0.1 port 67

Jul 27 01:56:03 freenas.local dhclient[2322]: DHCPACK of 192.168.0.217 from 192.168.0.1

Jul 27 01:56:03 freenas.local dhclient[2322]: bound to 192.168.0.217 -- renewal in 2935 seconds.

Jul 27 01:56:06 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.HVaA3p.mount: Succeeded.

Jul 27 01:56:15 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.9HtSd4.mount: Succeeded.

Jul 27 01:56:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.z5MHQl.mount: Succeeded.

Jul 27 01:56:25 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.kFFTrf.mount: Succeeded.

Jul 27 01:56:31 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.vIvkPg.mount: Succeeded.

Jul 27 01:56:31 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.GHklEx.mount: Succeeded.

Jul 27 01:56:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.6ULAjF.mount: Succeeded.

Jul 27 01:56:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.QNqe31.mount: Succeeded.

Jul 27 01:56:55 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.srfsiK.mount: Succeeded.

Jul 27 01:56:56 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.0WvoDZ.mount: Succeeded.

Jul 27 01:57:16 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.XT1PTX.mount: Succeeded.

Jul 27 01:57:25 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.HPk4u1.mount: Succeeded.

Jul 27 01:57:36 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.DzH0Gr.mount: Succeeded.

Jul 27 01:57:45 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-7e91062c335cafa9b5e71d04d68c584adaff4c9175c170166303cac098929ccd-runc.8G9fI6.mount: Succeeded.

Jul 27 01:57:46 freenas.local systemd[1]: run-docker-runtime\x2drunc-moby-46477a9fe301db9f376efdff49ccf4c0eaf094aa8e07032dd395dcb37f86e4cc-runc.HmEIc2.mount: Succeeded.

Jul 27 04:14:45 freenas.local syslog-ng[5435]: syslog-ng starting up; version='3.28.1'

Jul 27 04:12:49 freenas.local kernel: Linux version 5.10.120+truenas (root@tnsbuilds01.tn.ixsystems.net) (gcc (Debian 10.2.1-6) 10.2.1 20210110, GNU ld (GNU Binutils for Debian) 2.35.2) #1 SMP Wed Jul 6 22:13:20 UTC 2022

Jul 27 04:12:49 freenas.local kernel: Command line: BOOT_IMAGE=/ROOT/22.02.2.1@/boot/vmlinuz-5.10.120+truenas root=ZFS=boot-pool/ROOT/22.02.2.1 ro console=ttyS0,9600 console=tty1 libata.allow_tpm=1 systemd.unified_cgroup_hierarchy=0 amd_iommu=on iommu=pt kvm_amd.npt=1 kvm_amd.avic=1 intel_iommu=on zfsforce=1

Jul 27 04:12:49 freenas.local kernel: x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

Jul 27 04:12:49 freenas.local kernel: x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

Jul 27 04:12:49 freenas.local kernel: x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

Jul 27 04:12:49 freenas.local kernel: x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256

Jul 27 04:12:49 freenas.local kernel: x86/fpu: Enabled xstate features 0x7, context size is 832 bytes, using 'standard' format.

Jul 27 04:12:49 freenas.local kernel: BIOS-provided physical RAM map:

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000000000000-0x000000000009ffff] usable

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000000100000-0x00000000007fffff] usable

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000000800000-0x0000000000807fff] ACPI NVS

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000000808000-0x000000000080ffff] usable

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000000810000-0x00000000008fffff] ACPI NVS

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000000900000-0x00000000bf8eefff] usable

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bf8ef000-0x00000000bf9eefff] reserved

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bf9ef000-0x00000000bfaeefff] type 20

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bfaef000-0x00000000bfb6efff] reserved

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bfb6f000-0x00000000bfb7efff] ACPI data

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bfb7f000-0x00000000bfbfefff] ACPI NVS

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bfbff000-0x00000000bff0ffff] usable

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bff10000-0x00000000bff2ffff] reserved

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000bff30000-0x00000000bfffffff] ACPI NVS

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x00000000ffe00000-0x00000000ffffffff] reserved

Jul 27 04:12:49 freenas.local kernel: BIOS-e820: [mem 0x0000000100000000-0x0000000660afffff] usable

Jul 27 04:12:49 freenas.local kernel: NX (Execute Disable) protection: active

Jul 27 04:12:49 freenas.local kernel: efi: EFI v2.70 by EDK II

Jul 27 04:12:49 freenas.local kernel: efi: SMBIOS=0xbf9ac000 ACPI=0xbfb7e000 ACPI 2.0=0xbfb7e014 MEMATTR=0xbed41018

Jul 27 04:12:49 freenas.local kernel: SMBIOS 2.8 present.

Jul 27 04:12:49 freenas.local kernel: DMI: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 0.0.0 02/06/2015

Jul 27 04:12:49 freenas.local kernel: Hypervisor detected: KVM

I wasn't awake to restart it at 1:57AM PST, so you'll see the logs of it turning back on start at 4:12 AM PST, which is 7:12AM EST (about when I woke up)

I will HAPPILY post more if it makes the probability of this getting solved higher, I just don't want to needlessly spam information if it won't help.

Thanks in advance. :)