Hi, this is my first post and my first build. I have a large TrueNAS build with over 600TiB in storage and I am using it to record Video Footage from a AxxonNext System hosted on 3x Windows 10 servers. The problem I am having is the AxxonNext server is showing I have 4TB currently stored on a ISCSI drive connected to my TrueNAS system and when I look at the Dataset for the ISCSI, I see that its consuming approximately double the space. I am using a Dataset and ISCSI file storage because this is the last thing I have tried to resolve the issue. I originally configured the ISCSI drive using a zvol with 120TiB, but after it started to fill, it increased to over 220TiB. I removed this and ran test with a New Dataset with a Quota of 10GiB and a ISCSI zvol with 2GiB to see in small scale what would happen. It seemed to show promising results as it was holding at the 2TiB size. After the test, I increased the Dataset and zvol to 120TiB just like before. As the drive started to fill, I could see it was causing the same issue of doubling in space on the TrueNAS. I then ended up removing all the changes and then setting up a New Dataset with a ISCSI as a File instead of a device which is still showing the same problem. I am not sure what will happen now that I have a Quota on the root dataset, but from what I can read, it will probably throw errors with Windows.

I have also tried to change the Block size of both the ISCSI and the Dataset to 4K and it had no effect.

Since this was my first build, I set all 48 drives to RAIDZ1 under 1 zdev (+ 2x hot spare). I know this is not the smartest move and will change it if needed.

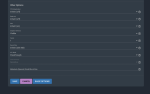

I am at a loss with a lot riding on getting this to work. I would appreciate any help anyone can provide. We are still in the Beta stages of the project so I can make Major changes if needed. I have also attached the current settings of the Dataset and what Axxon is showing.

TrueNAS Build:

Version: TrueNAS-12.0-U1

CPU: 2x Intel(R) Xeon(R) Silver 4208

Drives: 50x Segate SAS Exos 16TB

Raid: HBA Controller

I have also tried to change the Block size of both the ISCSI and the Dataset to 4K and it had no effect.

Since this was my first build, I set all 48 drives to RAIDZ1 under 1 zdev (+ 2x hot spare). I know this is not the smartest move and will change it if needed.

I am at a loss with a lot riding on getting this to work. I would appreciate any help anyone can provide. We are still in the Beta stages of the project so I can make Major changes if needed. I have also attached the current settings of the Dataset and what Axxon is showing.

TrueNAS Build:

Version: TrueNAS-12.0-U1

CPU: 2x Intel(R) Xeon(R) Silver 4208

Drives: 50x Segate SAS Exos 16TB

Raid: HBA Controller