Vincent Saelzler

Cadet

- Joined

- Mar 25, 2018

- Messages

- 9

I am wondering whether IO the performance I am experiencing in iSCSI-backed VMs is expected for my hardware.

I know the 5400 RPM drives are going to slow things down, but I still feel it might be slower than it should be.

I've just started up a two test VMs to see how things work. I anticipate running a total of 10-20 VMs once fully operational. They will be hosting full cryptocurrency nodes, web servers, and database servers.

The two machines are the home lab boxes in my signature.

The main problem is ~400K (not M) sync writes. I can deal with slow, but that's almost crippling.

I know the 5400 RPM drives are going to slow things down, but I still feel it might be slower than it should be.

I've just started up a two test VMs to see how things work. I anticipate running a total of 10-20 VMs once fully operational. They will be hosting full cryptocurrency nodes, web servers, and database servers.

The two machines are the home lab boxes in my signature.

- They are directly connected with a cat 6 cable (no switch - straight from one network card to the other).

- The IPs are statically set to a separate subnet from other traffic. Only iSCSI is on these interfaces/network.

- Sync Disabled

- Ram Disk SLOG (as described in Testing the benefits of SLOG using a RAM disk!)

- Sync Enabled, No SLOG

The main problem is ~400K (not M) sync writes. I can deal with slow, but that's almost crippling.

Code:

# zpool status

pool: iscsi-tank0

state: ONLINE

scan: scrub repaired 0 in 0 days 00:18:03 with 0 errors on Sun Jun 9 00:18:05 2019

config:

NAME STATE READ WRITE CKSUM

iscsi-tank0 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/a5761110-6dc9-11e9-8737-90b11c250e64 ONLINE 0 0 0

gptid/ae44d0b6-6dc9-11e9-8737-90b11c250e64 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/d9ab4007-6dc9-11e9-8737-90b11c250e64 ONLINE 0 0 0

gptid/e3699d01-6dc9-11e9-8737-90b11c250e64 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/1daa3574-6dca-11e9-8737-90b11c250e64 ONLINE 0 0 0

gptid/2a5d71cc-6dca-11e9-8737-90b11c250e64 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/5293781d-6dca-11e9-8737-90b11c250e64 ONLINE 0 0 0

gptid/5fac570e-6dca-11e9-8737-90b11c250e64 ONLINE 0 0 0

spares

gptid/a3c3b09c-6dca-11e9-8737-90b11c250e64 AVAIL

Code:

## Sync Enabled

-----------------------------------------------------------------------

CrystalDiskMark 6.0.2 x64 (UWP) (C) 2007-2018 hiyohiyo

Crystal Dew World : https://crystalmark.info/

-----------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

Sequential Read (Q= 32,T= 1) : 64.591 MB/s

Sequential Write (Q= 32,T= 1) : 9.618 MB/s

Random Read 4KiB (Q= 8,T= 8) : 5.138 MB/s [ 1254.4 IOPS]

Random Write 4KiB (Q= 8,T= 8) : 0.413 MB/s [ 100.8 IOPS]

Random Read 4KiB (Q= 32,T= 1) : 5.225 MB/s [ 1275.6 IOPS]

Random Write 4KiB (Q= 32,T= 1) : 0.425 MB/s [ 103.8 IOPS]

Random Read 4KiB (Q= 1,T= 1) : 4.300 MB/s [ 1049.8 IOPS]

Random Write 4KiB (Q= 1,T= 1) : 0.426 MB/s [ 104.0 IOPS]

Test : 500 MiB [C: 35.8% (22.7/63.4 GiB)] (x5) [Interval=5 sec]

Date : 2019/06/17 21:48:18

OS : Windows 10 Professional [10.0 Build 18362] (x64)

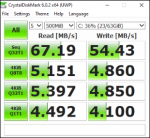

##RAM SLOG

-----------------------------------------------------------------------

CrystalDiskMark 6.0.2 x64 (UWP) (C) 2007-2018 hiyohiyo

Crystal Dew World : https://crystalmark.info/

-----------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

Sequential Read (Q= 32,T= 1) : 67.187 MB/s

Sequential Write (Q= 32,T= 1) : 54.430 MB/s

Random Read 4KiB (Q= 8,T= 8) : 5.151 MB/s [ 1257.6 IOPS]

Random Write 4KiB (Q= 8,T= 8) : 4.860 MB/s [ 1186.5 IOPS]

Random Read 4KiB (Q= 32,T= 1) : 5.397 MB/s [ 1317.6 IOPS]

Random Write 4KiB (Q= 32,T= 1) : 4.850 MB/s [ 1184.1 IOPS]

Random Read 4KiB (Q= 1,T= 1) : 4.492 MB/s [ 1096.7 IOPS]

Random Write 4KiB (Q= 1,T= 1) : 4.100 MB/s [ 1001.0 IOPS]

Test : 500 MiB [C: 35.8% (22.7/63.4 GiB)] (x5) [Interval=5 sec]

Date : 2019/06/17 22:01:14

OS : Windows 10 Professional [10.0 Build 18362] (x64)

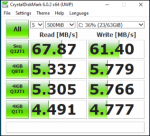

## Sync Disabled

-----------------------------------------------------------------------

CrystalDiskMark 6.0.2 x64 (UWP) (C) 2007-2018 hiyohiyo

Crystal Dew World : https://crystalmark.info/

-----------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

Sequential Read (Q= 32,T= 1) : 67.869 MB/s

Sequential Write (Q= 32,T= 1) : 61.400 MB/s

Random Read 4KiB (Q= 8,T= 8) : 5.337 MB/s [ 1303.0 IOPS]

Random Write 4KiB (Q= 8,T= 8) : 5.779 MB/s [ 1410.9 IOPS]

Random Read 4KiB (Q= 32,T= 1) : 5.305 MB/s [ 1295.2 IOPS]

Random Write 4KiB (Q= 32,T= 1) : 5.766 MB/s [ 1407.7 IOPS]

Random Read 4KiB (Q= 1,T= 1) : 4.491 MB/s [ 1096.4 IOPS]

Random Write 4KiB (Q= 1,T= 1) : 4.777 MB/s [ 1166.3 IOPS]

Test : 500 MiB [C: 35.8% (22.7/63.4 GiB)] (x5) [Interval=5 sec]

Date : 2019/06/17 22:52:21

OS : Windows 10 Professional [10.0 Build 18362] (x64)