iamafish

Dabbler

- Joined

- Jan 13, 2016

- Messages

- 10

I'm somewhat new to FreeNAS and ZFS but have been configuring Hyper-V and iSCSI for several years. I'm using this newly constructed system:

FreeNAS 9.3.1: Supermicro 6048R-E1CR36L, 1x Xeon E5 2603v3, 64GB ECC DDR4

OS: 2x Kingston V300 60GB

Volume1: 8x WD Red Pro 3TB (4x2 Mirror), 2x 200GB Intel S3710 (ZIL/SLOG), 1x Intel 750 800GB PCI-E (L2ARC)

Volume2: 4x Samsung 850 EVO (2x2 Mirror)

Storage is accessed by 2x clustered Hyper-V 2012 R2 nodes using the Windows iSCSI initiator and through a Netgear XS712T 10Gb switch. The NICs are all Intel X540 AT2 for which I've installed the latest drivers on the Windows nodes, set jumbo frames to 9216 on the switch and Windows and "mtu 9000" on the FreeNAS. I've got MPIO configured with 2 paths per target.

I'm currently using 3 targets for iSCSI on ZVOL device extents, a 3TB volume for data on volume 1 and a 1GB cluster quorum plus 1TB volume for VM OS on volume 2. Apart from the quorum these are present on the Hyper-V nodes as Cluster Shared Volumes (CSV).

Typical VM workload is MSSQL feeding our internal data analysis tools written in Java or Python, ideally though the data working set is in memory so the SQL and OS traffic will be the main source of disk usage.

I'm in search of the optimal filesystem and volume configuration and I'm looking at these parameters:

- Dataset record size

- ZVOL block size

- ZFS compression

- Logical block size in the iSCSI extent

- NTFS/CSVFS allocation unit size used by the Hyper-V hosts for the CSV

- NTFS allocation unit size used by the guest within the VHDX

What is the best relationship between these values, are their optimal ratios or matching to be made?

With the parameters at

- Default/inherit

- 16KB

- lz4

- 4096

- 4KB

- 4KB for OS, 64KB for SQL data volume

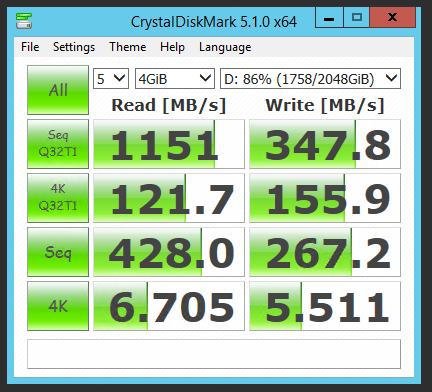

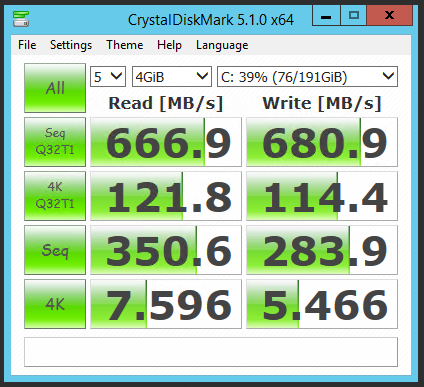

I'm using CrystalDiskMark to benchmark inside an otherwise idle Windows Server 2012 VM, at the moment sequential performance looks OK and sometimes I have hit nearly 2GB/s on Seq Q32T1 tests so the iSCSI networking and MPIO seems to be working, but that result is sporadic and it's usually more like the screenshots below.

However the 4K random results seem appalling (less than a single basic SSD) for test file sizes that should fit in the ARC/L2ARC and so be coming from RAM or SSDs whichever volume I am using.

I just got the following from CrystalDiskMark on volume1:

And volume 2:

Any ideas where I can make some improvements?

Thanks!

FreeNAS 9.3.1: Supermicro 6048R-E1CR36L, 1x Xeon E5 2603v3, 64GB ECC DDR4

OS: 2x Kingston V300 60GB

Volume1: 8x WD Red Pro 3TB (4x2 Mirror), 2x 200GB Intel S3710 (ZIL/SLOG), 1x Intel 750 800GB PCI-E (L2ARC)

Volume2: 4x Samsung 850 EVO (2x2 Mirror)

Storage is accessed by 2x clustered Hyper-V 2012 R2 nodes using the Windows iSCSI initiator and through a Netgear XS712T 10Gb switch. The NICs are all Intel X540 AT2 for which I've installed the latest drivers on the Windows nodes, set jumbo frames to 9216 on the switch and Windows and "mtu 9000" on the FreeNAS. I've got MPIO configured with 2 paths per target.

I'm currently using 3 targets for iSCSI on ZVOL device extents, a 3TB volume for data on volume 1 and a 1GB cluster quorum plus 1TB volume for VM OS on volume 2. Apart from the quorum these are present on the Hyper-V nodes as Cluster Shared Volumes (CSV).

Typical VM workload is MSSQL feeding our internal data analysis tools written in Java or Python, ideally though the data working set is in memory so the SQL and OS traffic will be the main source of disk usage.

I'm in search of the optimal filesystem and volume configuration and I'm looking at these parameters:

- Dataset record size

- ZVOL block size

- ZFS compression

- Logical block size in the iSCSI extent

- NTFS/CSVFS allocation unit size used by the Hyper-V hosts for the CSV

- NTFS allocation unit size used by the guest within the VHDX

What is the best relationship between these values, are their optimal ratios or matching to be made?

With the parameters at

- Default/inherit

- 16KB

- lz4

- 4096

- 4KB

- 4KB for OS, 64KB for SQL data volume

I'm using CrystalDiskMark to benchmark inside an otherwise idle Windows Server 2012 VM, at the moment sequential performance looks OK and sometimes I have hit nearly 2GB/s on Seq Q32T1 tests so the iSCSI networking and MPIO seems to be working, but that result is sporadic and it's usually more like the screenshots below.

However the 4K random results seem appalling (less than a single basic SSD) for test file sizes that should fit in the ARC/L2ARC and so be coming from RAM or SSDs whichever volume I am using.

I just got the following from CrystalDiskMark on volume1:

And volume 2:

Any ideas where I can make some improvements?

Thanks!

Last edited: