Hello,

I've just migrated from CORE over to SCALE (super excited for Linux over FreeBSD btw) and upon first boot, I noticed my iSCSI networking was broken.

Hardware is a Dell r720xd with 26 ssds in it, 384gb ecc ddr3 1333, 2x E5-2643 V2, perc flashed to IT mode, Dell rNDC NIC with dual 1gb Ethernet and Dual 10gbps sfp+.

Previous config under CORE was working perfectly:

10gbps SFP+ port 1 was on the 101 vlan, all the way through the switch to the NICs on the server, vmkping from the esxi host and regular ping from the truenas server were successful.

10gbps SFP+ port 2 was on the 102 vlan, same story.

After the upgrade, even after re-making the vlan interfaces and assigning ports, I can only ever get one to ping at a time, but either one can be active. I am fairly certain this is a networking issue or a bug, as there is no outbound (except for failed pings sent from truenas) nor inbound on the interface that doesn't work. It is listed as DEAD in the iSCSI paths view on VMWare esxi8.

Output of ifconfig -a:

root@Stronghold[~]# ifconfig -a

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether c8:1f:66:ec:e9:33 txqueuelen 1000 (Ethernet)

RX packets 20036112 bytes 21176045187 (19.7 GiB)

RX errors 0 dropped 116181 overruns 0 frame 0

TX packets 20409168 bytes 26716749643 (24.8 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 143 memory 0xd5000000-d57fffff

eno2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether c8:1f:66:ec:e9:35 txqueuelen 1000 (Ethernet)

RX packets 197953 bytes 21273790 (20.2 MiB)

RX errors 0 dropped 116173 overruns 0 frame 0

TX packets 246 bytes 18435 (18.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 170 memory 0xd6000000-d67fffff

eno3: flags=4098<BROADCAST,MULTICAST> mtu 1500

ether c8:1f:66:ec:e9:37 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 170 memory 0xd7000000-d77fffff

eno4: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.249 netmask 255.255.255.0 broadcast 192.168.1.255

ether c8:1f:66:ec:e9:39 txqueuelen 1000 (Ethernet)

RX packets 203629 bytes 21705551 (20.7 MiB)

RX errors 0 dropped 116163 overruns 0 frame 0

TX packets 10580 bytes 9433136 (8.9 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 191 memory 0xd8000000-d87fffff

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 6044 bytes 1572156 (1.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6044 bytes 1572156 (1.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vlan101: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.101.100 netmask 255.255.255.0 broadcast 192.168.101.255

ether c8:1f:66:ec:e9:33 txqueuelen 1000 (Ethernet)

RX packets 7296752 bytes 20066216937 (18.6 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8661678 bytes 25778147747 (24.0 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vlan102: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.102.100 netmask 255.255.255.0 broadcast 192.168.102.255

ether c8:1f:66:ec:e9:35 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 37 bytes 8165 (7.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

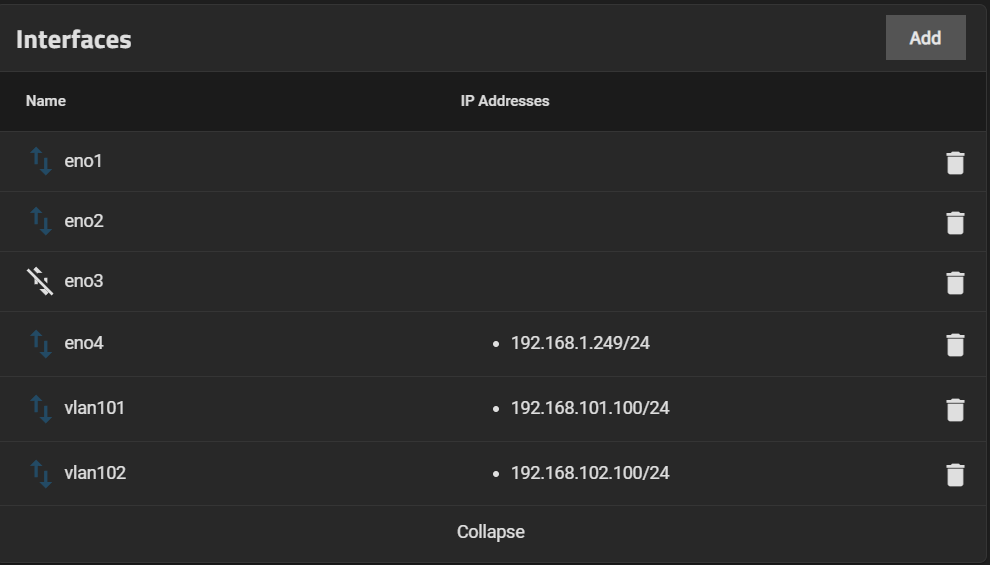

Networking settings page:

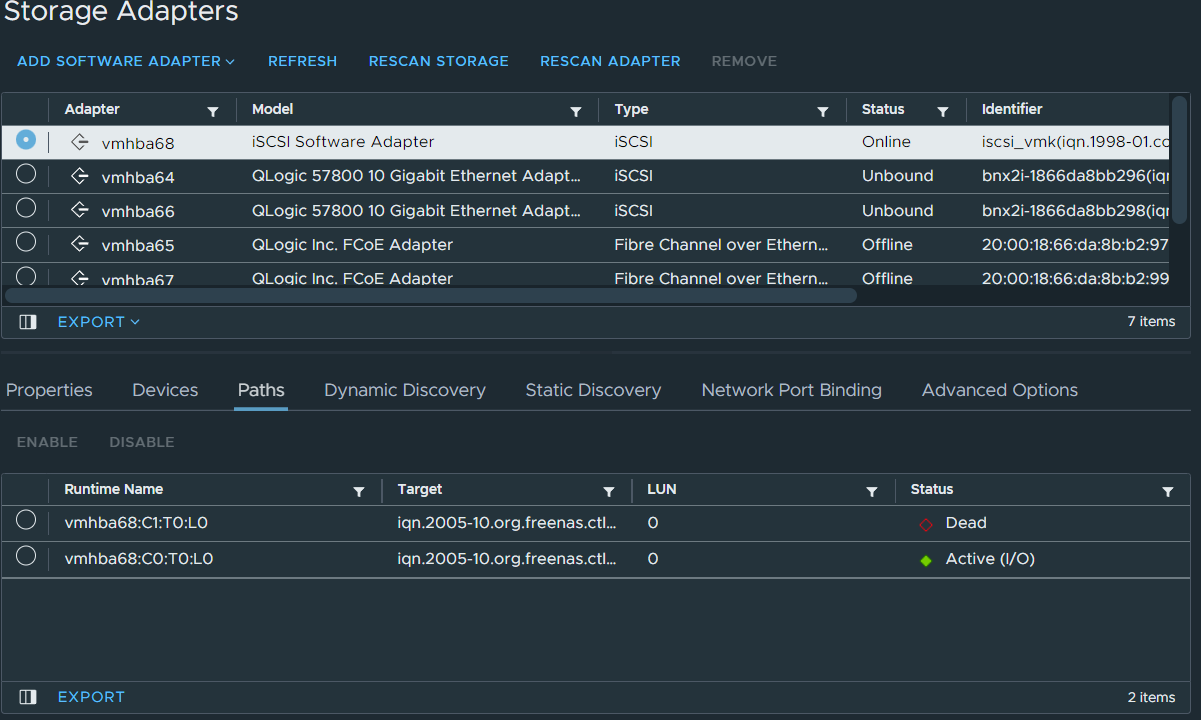

From the esxi side (hostname Bastion):

[root@Bastion:~] vmkping -I vmk1 192.168.101.100

PING 192.168.101.100 (192.168.101.100): 56 data bytes

64 bytes from 192.168.101.100: icmp_seq=0 ttl=64 time=0.146 ms

64 bytes from 192.168.101.100: icmp_seq=1 ttl=64 time=0.179 ms

64 bytes from 192.168.101.100: icmp_seq=2 ttl=64 time=0.140 ms

--- 192.168.101.100 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.140/0.155/0.179 ms

[root@Bastion:~] vmkping -I vmk2 192.168.102.100

PING 192.168.102.100 (192.168.102.100): 56 data bytes

sendto() failed (Host is down)

[root@Bastion:~]

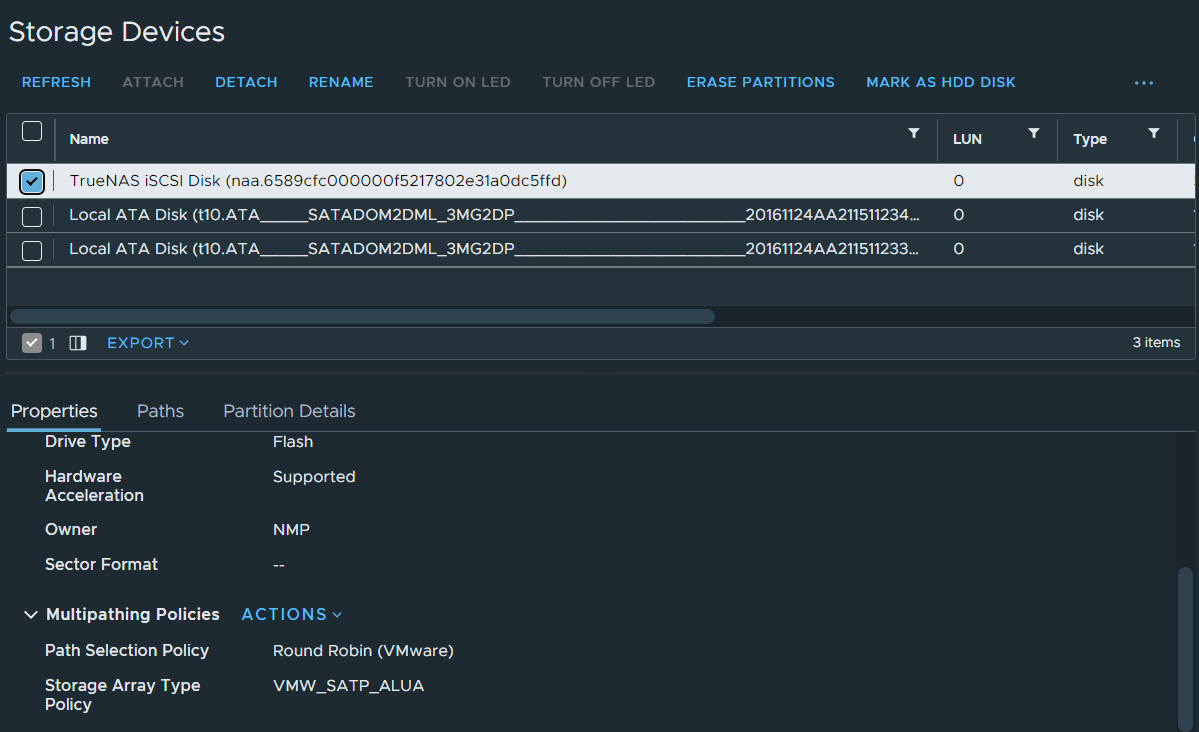

The Round Robin config set from before:

I've just migrated from CORE over to SCALE (super excited for Linux over FreeBSD btw) and upon first boot, I noticed my iSCSI networking was broken.

Hardware is a Dell r720xd with 26 ssds in it, 384gb ecc ddr3 1333, 2x E5-2643 V2, perc flashed to IT mode, Dell rNDC NIC with dual 1gb Ethernet and Dual 10gbps sfp+.

Previous config under CORE was working perfectly:

10gbps SFP+ port 1 was on the 101 vlan, all the way through the switch to the NICs on the server, vmkping from the esxi host and regular ping from the truenas server were successful.

10gbps SFP+ port 2 was on the 102 vlan, same story.

After the upgrade, even after re-making the vlan interfaces and assigning ports, I can only ever get one to ping at a time, but either one can be active. I am fairly certain this is a networking issue or a bug, as there is no outbound (except for failed pings sent from truenas) nor inbound on the interface that doesn't work. It is listed as DEAD in the iSCSI paths view on VMWare esxi8.

Output of ifconfig -a:

root@Stronghold[~]# ifconfig -a

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether c8:1f:66:ec:e9:33 txqueuelen 1000 (Ethernet)

RX packets 20036112 bytes 21176045187 (19.7 GiB)

RX errors 0 dropped 116181 overruns 0 frame 0

TX packets 20409168 bytes 26716749643 (24.8 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 143 memory 0xd5000000-d57fffff

eno2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether c8:1f:66:ec:e9:35 txqueuelen 1000 (Ethernet)

RX packets 197953 bytes 21273790 (20.2 MiB)

RX errors 0 dropped 116173 overruns 0 frame 0

TX packets 246 bytes 18435 (18.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 170 memory 0xd6000000-d67fffff

eno3: flags=4098<BROADCAST,MULTICAST> mtu 1500

ether c8:1f:66:ec:e9:37 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 170 memory 0xd7000000-d77fffff

eno4: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.249 netmask 255.255.255.0 broadcast 192.168.1.255

ether c8:1f:66:ec:e9:39 txqueuelen 1000 (Ethernet)

RX packets 203629 bytes 21705551 (20.7 MiB)

RX errors 0 dropped 116163 overruns 0 frame 0

TX packets 10580 bytes 9433136 (8.9 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 191 memory 0xd8000000-d87fffff

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 6044 bytes 1572156 (1.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6044 bytes 1572156 (1.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vlan101: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.101.100 netmask 255.255.255.0 broadcast 192.168.101.255

ether c8:1f:66:ec:e9:33 txqueuelen 1000 (Ethernet)

RX packets 7296752 bytes 20066216937 (18.6 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8661678 bytes 25778147747 (24.0 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vlan102: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.102.100 netmask 255.255.255.0 broadcast 192.168.102.255

ether c8:1f:66:ec:e9:35 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 37 bytes 8165 (7.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Networking settings page:

From the esxi side (hostname Bastion):

[root@Bastion:~] vmkping -I vmk1 192.168.101.100

PING 192.168.101.100 (192.168.101.100): 56 data bytes

64 bytes from 192.168.101.100: icmp_seq=0 ttl=64 time=0.146 ms

64 bytes from 192.168.101.100: icmp_seq=1 ttl=64 time=0.179 ms

64 bytes from 192.168.101.100: icmp_seq=2 ttl=64 time=0.140 ms

--- 192.168.101.100 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.140/0.155/0.179 ms

[root@Bastion:~] vmkping -I vmk2 192.168.102.100

PING 192.168.102.100 (192.168.102.100): 56 data bytes

sendto() failed (Host is down)

[root@Bastion:~]

The Round Robin config set from before:

Last edited: