Was wondering if someone can provide some ideas.

I am testing a new server with TrueNAS Scale 22.12 and a replacement for my old server running Legacy FreeNAS.

When setting up an iSCSI target to be used for MS SQL storage I am running into an issue with the reported block size.

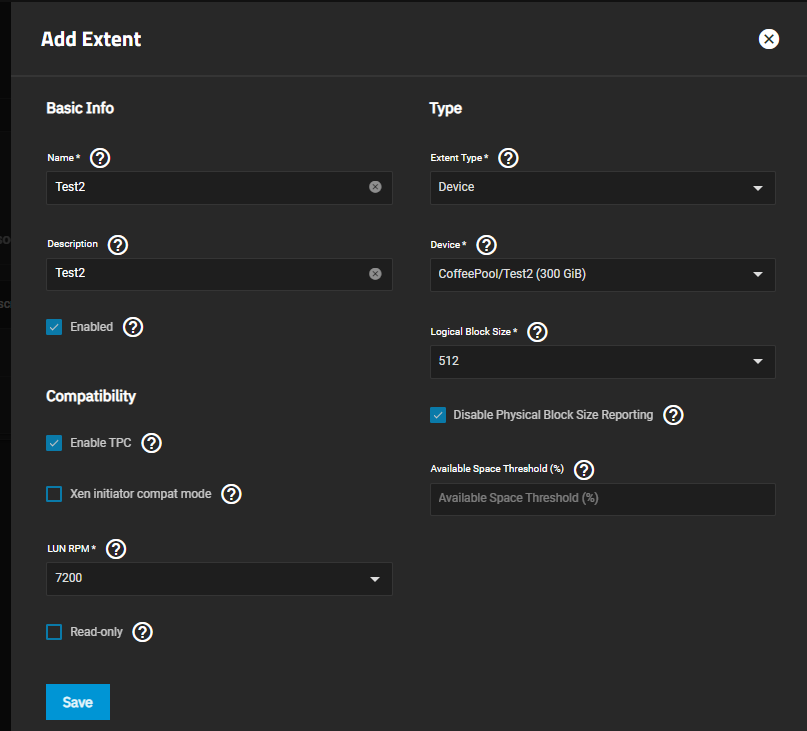

On the extent it is set to 512 and I have tested with the checkbox for "Disable Physical Block Size Reporting" both checked and unchecked and no difference.

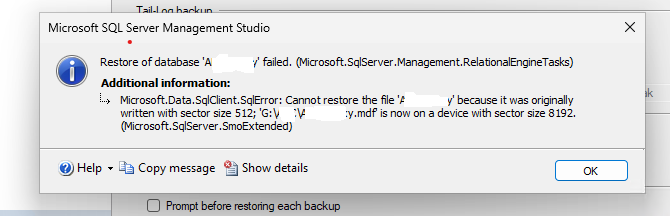

The problem is when I go to restore a database to the new drive in Windows 11 after I mount it with iSCIS initiator, I am getting this error.

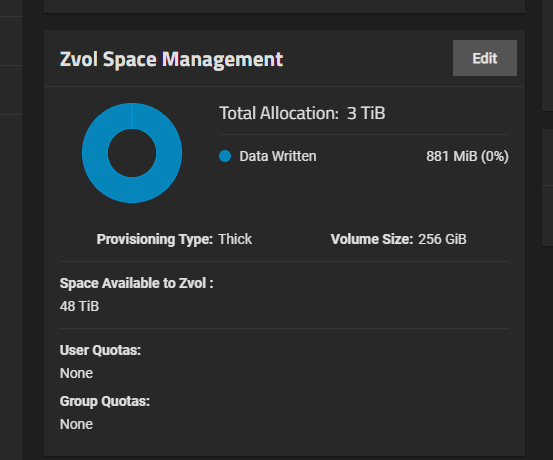

If I manually create the zvol that is the target via command line to force it down to 512 on the zvol directly, it is fine. The problem as well is though that takes up a lot more space than what is allocated to the target disk.

When I created this zvol I used this command as 512 wasn't and option in the UI.

Any help would be greatly appreciated. Going to do some other tests to see if I can figure it out but might need to submit as a bug.

I am testing a new server with TrueNAS Scale 22.12 and a replacement for my old server running Legacy FreeNAS.

When setting up an iSCSI target to be used for MS SQL storage I am running into an issue with the reported block size.

On the extent it is set to 512 and I have tested with the checkbox for "Disable Physical Block Size Reporting" both checked and unchecked and no difference.

The problem is when I go to restore a database to the new drive in Windows 11 after I mount it with iSCIS initiator, I am getting this error.

If I manually create the zvol that is the target via command line to force it down to 512 on the zvol directly, it is fine. The problem as well is though that takes up a lot more space than what is allocated to the target disk.

When I created this zvol I used this command as 512 wasn't and option in the UI.

Any help would be greatly appreciated. Going to do some other tests to see if I can figure it out but might need to submit as a bug.

Last edited: