mikesoultanian

Dabbler

- Joined

- Aug 3, 2017

- Messages

- 43

Maybe I'm not looking at it the right way, but I can't make heads or tails of the storage view. I guess I'll just list the issues I'm having and hopefully it's not too difficult to get me oriented. I'm running v11 in a hyper-v for testing purposes using the original UI.

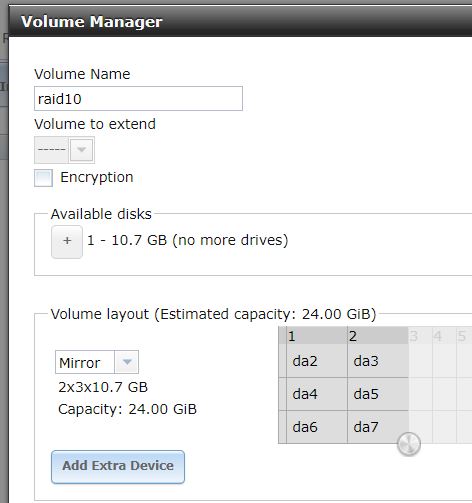

1. I created six 10GB drives and I'd like to create a RAID 10 because that's what we'll use in production (actually, we have a production server that I've inherited and I'm trying to understand how it's configured). If I'm understanding correctly, a six 10GB drive RAID 10 should yield a 30GB volume, right? So first I went to the volume manager and created a mirror called "raid10" and created a mirror of two drives, saved it, went back into volume manager, extended raid10 and added another mirror, and then did the same for the last two drives. Is this now a RAID 10 array? Is just because there are now 3 mirrors listed in volume status below the volume name "raid10", does that mean that the volume is striped across the mirrors? This is the way I've seen others make RAID 10 arrays, so I'm assuming I did it right.

Then it seems I can also go into the volume manager, name my raid, and then create a 2x3 volume layout and it says "mirror" - is this RAID 10?

2. Why does it show the capacity being 24GB? Is that because 24GB is 80% of 30GB? But it seems like the 80% rule applies to creating zvols and not wanting to use more than 80%, but you can still override that if you want to deal with the consequences when creating the zvol, so I'm not understand the capacity being shown.

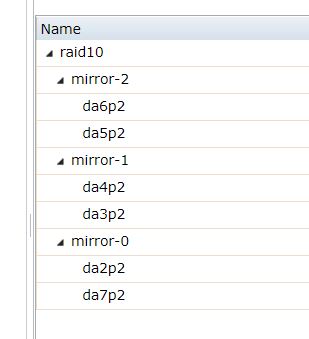

3. How do I know that I actually have a RAID 10? It seems like using either of the methods above yields the following view when I go to volume status:

I understand that it's not really RAID 10, but really RAID 1 + 0, but if this is in-fact a RAID 10 (1+0), there should be some indication showing that the mirrors are striped. The listing above just shows (to my FreeNAS-untrained eyes) that my volume contains three mirrors. Maybe it's impossible to have 3 mirrors in a volume without them being striped, but it would be nice if it was more clearly noted that drives listed under a volume are striped... it's just not very intuitive to the beginner. Yes, you could argue that a beginner shouldn't be playing with a NAS, but hey, I gotta start somewhere ;)

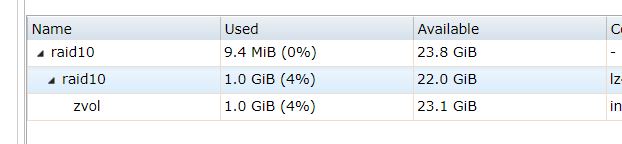

4. The main storage page makes no sense to me. If I actually created a RAID 10 using six 10GB drives, shouldn't it be 30GB (60/2)? This is what the main storage page looks like:

As you can see it says 23.8 - where is that number coming from? And what does 9.2MB used mean? Is that just space that's stolen for management purposes? If so, I'm cool with that...

5. I'm trying to create a zvol for my VM witness, it should be 1GB - I create that and now it says 1GB - that makes sense (yay, something makes sense to me!), it also makes sense that the dataset says 1GB used, but why doesn't the volume show 1GB used (or 1.0094GB to be exact)? That would make more sense because the dataset is essentially using up space contained in the volume, right?

6. If I started with 23.8GB available (which still doesn't make sense to me) and I use 1GB, how did I end up at 22GB available for the dataset? Rounding error?

7. And if I used 1GB for the zvol, why did the space available jump up to 23.1? Shouldn't it be the same as the dataset? I still don't understand exactly what a dataset is, but I'm just accepting that it's a layer that sits between the volume and the zvols...

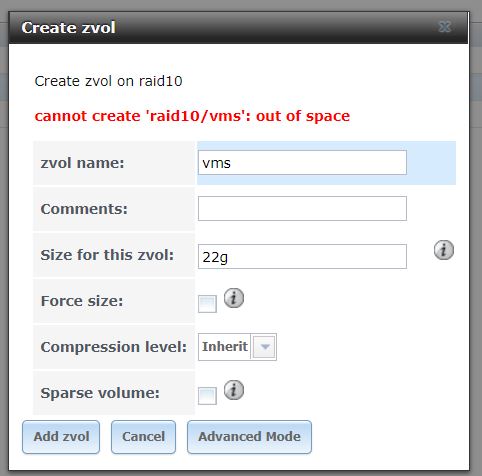

8. When I go to create another zvol, I want to create it using all the available space (is it 22.0 or 23.1?), but when I try and create a zvol for each of those, I get an error:

I know about the 80% rule (per 8.1.4 in the manual), so I should only create a zvol that is 80% of the available capacity (of the dataset?), so that would mean I should use 17.6GB? Just for kicks I use 20gb and it lets me through without having to select the "force size" checkbox - that doesn't make sense... or was the 80% being accounted for when I created the volume? It would be really helpful if the UI told me "x amount of space available for use to meet the 80% rule, otherwise select "force size" checkbox".

I think that's about all I have so far. I'm really trying to understand this and I've been staring at it for a while now, watching videos, reading posts, reading the manual, creating a test VM environment, and I just can't make sense of this. I really tried to RTFM before making this post. I appreciate anyone's help!!

Thanks!

Mike

1. I created six 10GB drives and I'd like to create a RAID 10 because that's what we'll use in production (actually, we have a production server that I've inherited and I'm trying to understand how it's configured). If I'm understanding correctly, a six 10GB drive RAID 10 should yield a 30GB volume, right? So first I went to the volume manager and created a mirror called "raid10" and created a mirror of two drives, saved it, went back into volume manager, extended raid10 and added another mirror, and then did the same for the last two drives. Is this now a RAID 10 array? Is just because there are now 3 mirrors listed in volume status below the volume name "raid10", does that mean that the volume is striped across the mirrors? This is the way I've seen others make RAID 10 arrays, so I'm assuming I did it right.

Then it seems I can also go into the volume manager, name my raid, and then create a 2x3 volume layout and it says "mirror" - is this RAID 10?

2. Why does it show the capacity being 24GB? Is that because 24GB is 80% of 30GB? But it seems like the 80% rule applies to creating zvols and not wanting to use more than 80%, but you can still override that if you want to deal with the consequences when creating the zvol, so I'm not understand the capacity being shown.

3. How do I know that I actually have a RAID 10? It seems like using either of the methods above yields the following view when I go to volume status:

I understand that it's not really RAID 10, but really RAID 1 + 0, but if this is in-fact a RAID 10 (1+0), there should be some indication showing that the mirrors are striped. The listing above just shows (to my FreeNAS-untrained eyes) that my volume contains three mirrors. Maybe it's impossible to have 3 mirrors in a volume without them being striped, but it would be nice if it was more clearly noted that drives listed under a volume are striped... it's just not very intuitive to the beginner. Yes, you could argue that a beginner shouldn't be playing with a NAS, but hey, I gotta start somewhere ;)

4. The main storage page makes no sense to me. If I actually created a RAID 10 using six 10GB drives, shouldn't it be 30GB (60/2)? This is what the main storage page looks like:

As you can see it says 23.8 - where is that number coming from? And what does 9.2MB used mean? Is that just space that's stolen for management purposes? If so, I'm cool with that...

5. I'm trying to create a zvol for my VM witness, it should be 1GB - I create that and now it says 1GB - that makes sense (yay, something makes sense to me!), it also makes sense that the dataset says 1GB used, but why doesn't the volume show 1GB used (or 1.0094GB to be exact)? That would make more sense because the dataset is essentially using up space contained in the volume, right?

6. If I started with 23.8GB available (which still doesn't make sense to me) and I use 1GB, how did I end up at 22GB available for the dataset? Rounding error?

7. And if I used 1GB for the zvol, why did the space available jump up to 23.1? Shouldn't it be the same as the dataset? I still don't understand exactly what a dataset is, but I'm just accepting that it's a layer that sits between the volume and the zvols...

8. When I go to create another zvol, I want to create it using all the available space (is it 22.0 or 23.1?), but when I try and create a zvol for each of those, I get an error:

I know about the 80% rule (per 8.1.4 in the manual), so I should only create a zvol that is 80% of the available capacity (of the dataset?), so that would mean I should use 17.6GB? Just for kicks I use 20gb and it lets me through without having to select the "force size" checkbox - that doesn't make sense... or was the 80% being accounted for when I created the volume? It would be really helpful if the UI told me "x amount of space available for use to meet the 80% rule, otherwise select "force size" checkbox".

I think that's about all I have so far. I'm really trying to understand this and I've been staring at it for a while now, watching videos, reading posts, reading the manual, creating a test VM environment, and I just can't make sense of this. I really tried to RTFM before making this post. I appreciate anyone's help!!

Thanks!

Mike