jafin

Explorer

- Joined

- May 30, 2011

- Messages

- 51

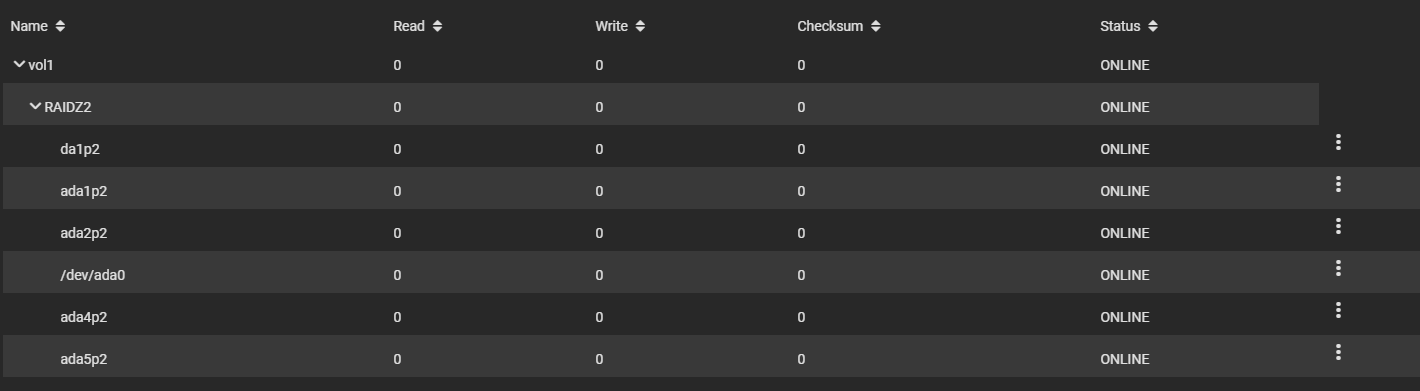

I replaced a failed disk in a raidz2 volume.

After this occurred, the drive is no longer shows as using a gptid, and instead is being shown as ada0 in the zpool pool status.

This appears to have the problem in the Truenas GUI not being able to act upon the drive, (Edit/Offline/Replace cannot act upon this drive, the functions do nothing).

In Chrome debugger when clicking an option against /dev/ada0 it console errors:

In the TrueNAS GUI it shows as

Is there a way to apply a gptid to this device? (in an attempt to let the GUI operate with this device?)

After this occurred, the drive is no longer shows as using a gptid, and instead is being shown as ada0 in the zpool pool status.

This appears to have the problem in the Truenas GUI not being able to act upon the drive, (Edit/Offline/Replace cannot act upon this drive, the functions do nothing).

In Chrome debugger when clicking an option against /dev/ada0 it console errors:

Code:

ERROR TypeError: Cannot read property 'identifier' of undefined

In the TrueNAS GUI it shows as

/dev/ada0Is there a way to apply a gptid to this device? (in an attempt to let the GUI operate with this device?)

Code:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/6838364e-5018-11eb-9efa-3ca82a4ba544 ONLINE 0 0 0

gptid/a987dc98-5204-11eb-ab4d-3ca82a4ba544 ONLINE 0 0 0

gptid/4a1fd222-b266-11e7-8c66-3ca82a4ba544 ONLINE 0 0 0

ada0 ONLINE 0 0 0

gptid/962a2678-4e36-11eb-9efa-3ca82a4ba544 ONLINE 0 0 0

gptid/5448fa00-241f-11e7-8724-3ca82a4ba544 ONLINE 0 0 0

Last edited: