Rob Townley

Dabbler

- Joined

- May 1, 2017

- Messages

- 19

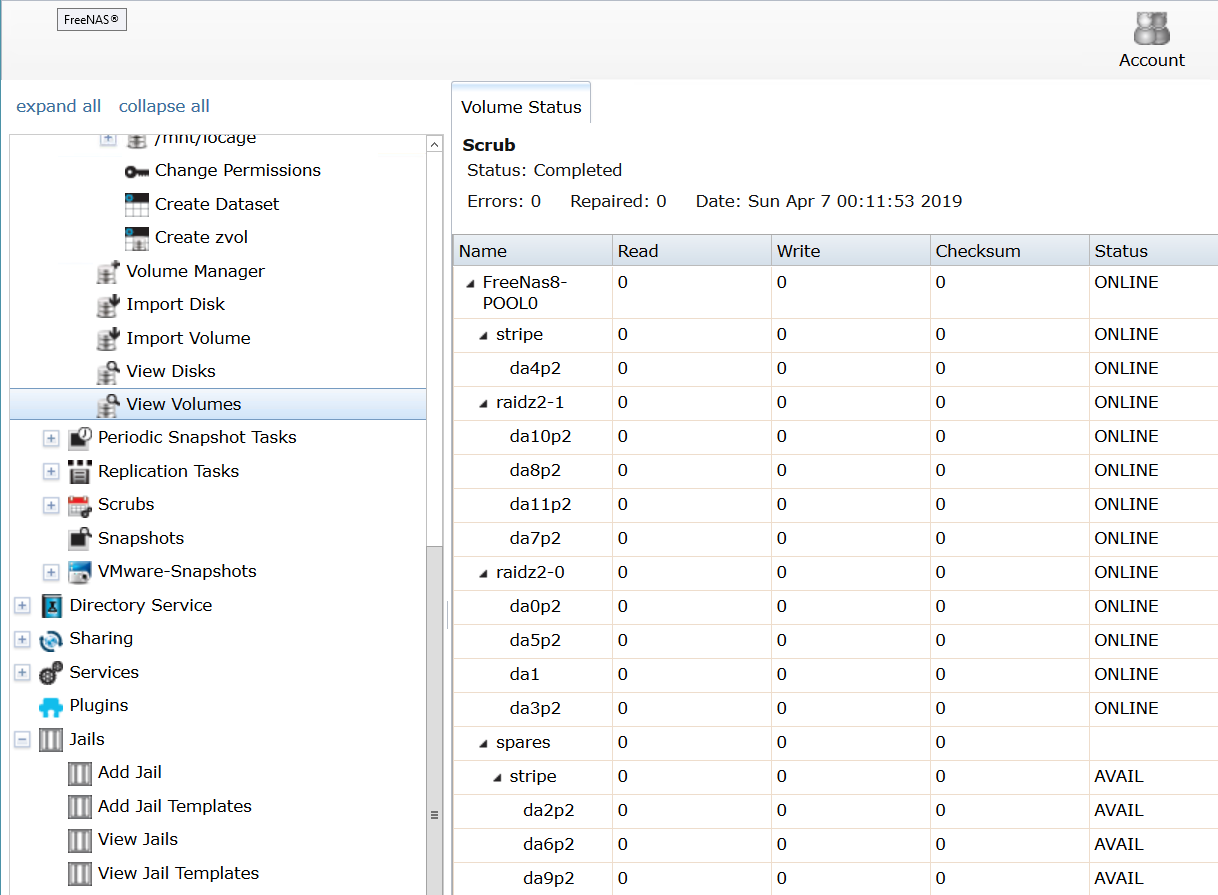

I dont want the stripe. Want to get rid of the stupid stripe and move da4p2 into one of the raidz arrays. Backstory is that at one time, i had one or two 8TB SATA drives that were only recognized as 2.2TB by the very old SAS controller. i had attempted to put them into their own RAID set to see if more of the drive space would be seen. I ended up swapping them out for 3TB SAS drives because at least the entire drive is seen. I have no idea if any of my actual data is on this 1 drive stripe. So how would one move any used blocks on the strip to the raidz2 arrays. Currently, there is only 109GiB used and 24.4TiB available. So there is tons of free space, but how does one know where the actual data resides?

Storage --> Volumes --> "View Volumes" --> highlight the pool or top line --> "Volume Status" --> click da4p2 and the options presented are "Edit, Offline, or Replace".

Under "View Disks", wipe or Edit are the options available.

Any pointers as to where to read up?

Is the only real option to move the 109GB to another machine and start from scratch on this one?

Build FreeNAS-9.10.2-U6 (561f0d7a1)

Platform Intel(R) Xeon(R) CPU L5410 @ 2.33GHz

Memory 32732MB

Load Average 0.11, 0.23, 0.23

Storage --> Volumes --> "View Volumes" --> highlight the pool or top line --> "Volume Status" --> click da4p2 and the options presented are "Edit, Offline, or Replace".

Under "View Disks", wipe or Edit are the options available.

Any pointers as to where to read up?

Is the only real option to move the 109GB to another machine and start from scratch on this one?

Build FreeNAS-9.10.2-U6 (561f0d7a1)

Platform Intel(R) Xeon(R) CPU L5410 @ 2.33GHz

Memory 32732MB

Load Average 0.11, 0.23, 0.23