Sphinxicus

Dabbler

- Joined

- Nov 3, 2016

- Messages

- 32

It's been a long time in the making and while this will not be a groundbreaking build log, i thought i would pop it up here for posterity and in the hope that it may help at least one person with something. Even if that something is to not do as i have done ;)

The requirements for my build were simple enough. I wanted to consolidate a lot of bare metal hosts which were performing various tasks onto a ESXi host, while at the same time giving me a NAS that i could easily expand while also grabbing all the advantages that the ZFS file system has to offer.

I took a lot of inspiration from the Norco build that @Stux posted years ago and went from there.

Hardware

Case: X-Case RM 424 - 24 | A 24 bay 4u rackmount case with rail kit

Motherboard: Supermicro X10DRL-I-O

CPU: E5-2620v4 x2

RAM: 32GB (x4 8GB DIMMS) Samsung DDR4 2133 RDIM ECC (M393A1G40DB0-CPB)

PSU: Corsair RMx 1000

HBA: 2x IBM ServerRaid M1015 Flashed in IT Mode (The reason for two of these explained below)

CPU Heatsink: 2x Noctua NH-U9S with NF-A9 92mm fans

ESXi Boot disk: Sandisk Cruzer Fit 16GB USB 2.0 Flash Drive

ESXi Datastore Disk: Samsung 970 EVO 250GB M.2 NVMI SSD

Hard Disks: 6x 3TB WD Reds + various other disks that will be housed in the case but not used within FreeNAS

UPS: APC SC450RMI1U

Build Process

This part is a bit picture heavy so apologies if its too much. I know that i like seeing the pictures so others may too.

The Case

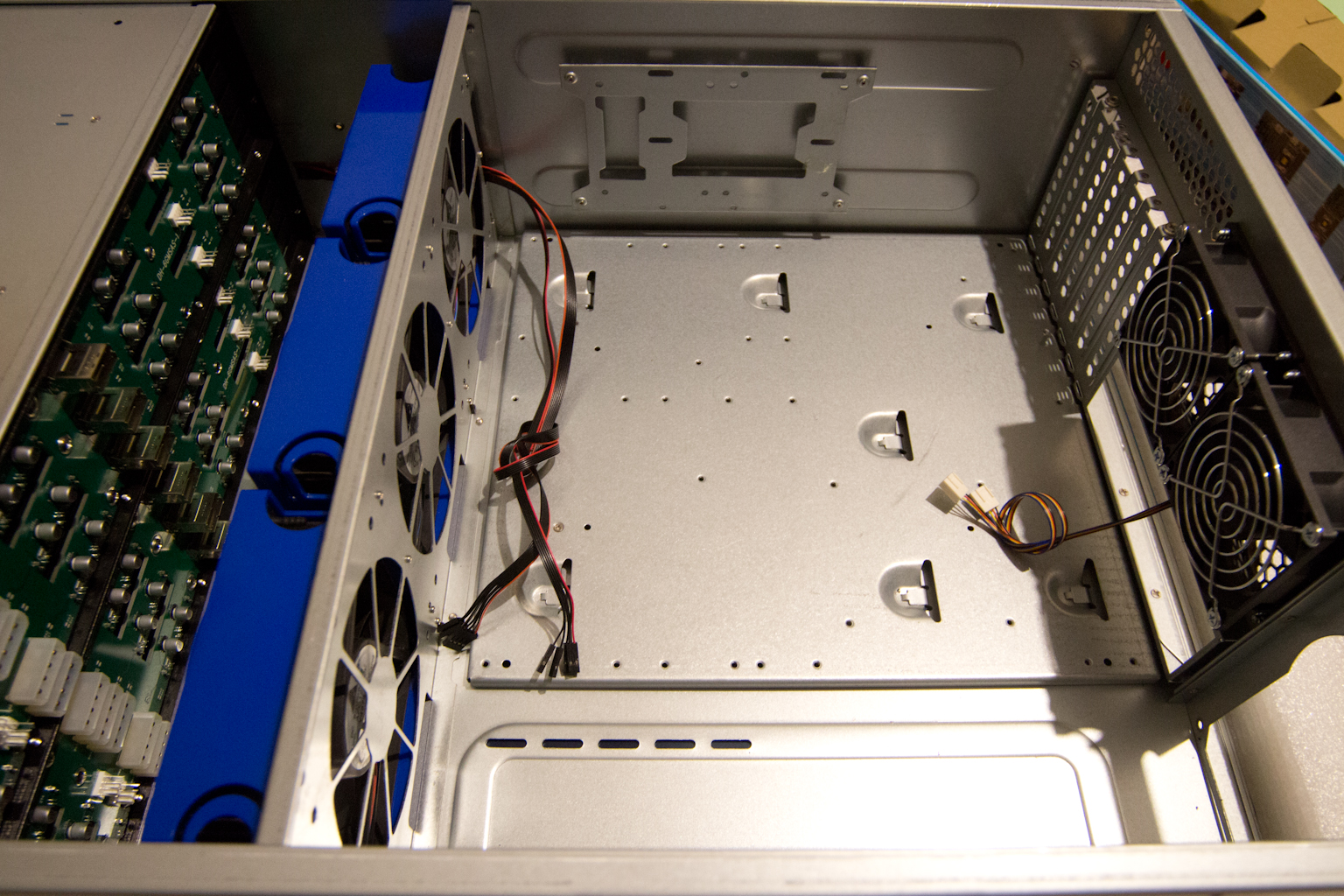

The case itself as you would expect for a 4U case is pretty spacious inside. It can take a EATX motherboard if required.

It came with 5 fans in total. 3x 120mm fans in the fanwall and 2x 80mm exhaust fans.

View of the empty case

It takes a normal ATX power supply so no screaming fans in the removable PSUs that some supermicro cases have. This is a family NAS so no redundancy required as we have no availability SLA's.

The back-plane on the case is easily accessible (especially with the fans out) and has plenty of power sockets.

Plenty of molex connectors on the back-plane

Not sure if they all had to be plugged in but i obliged as i went.

Fans

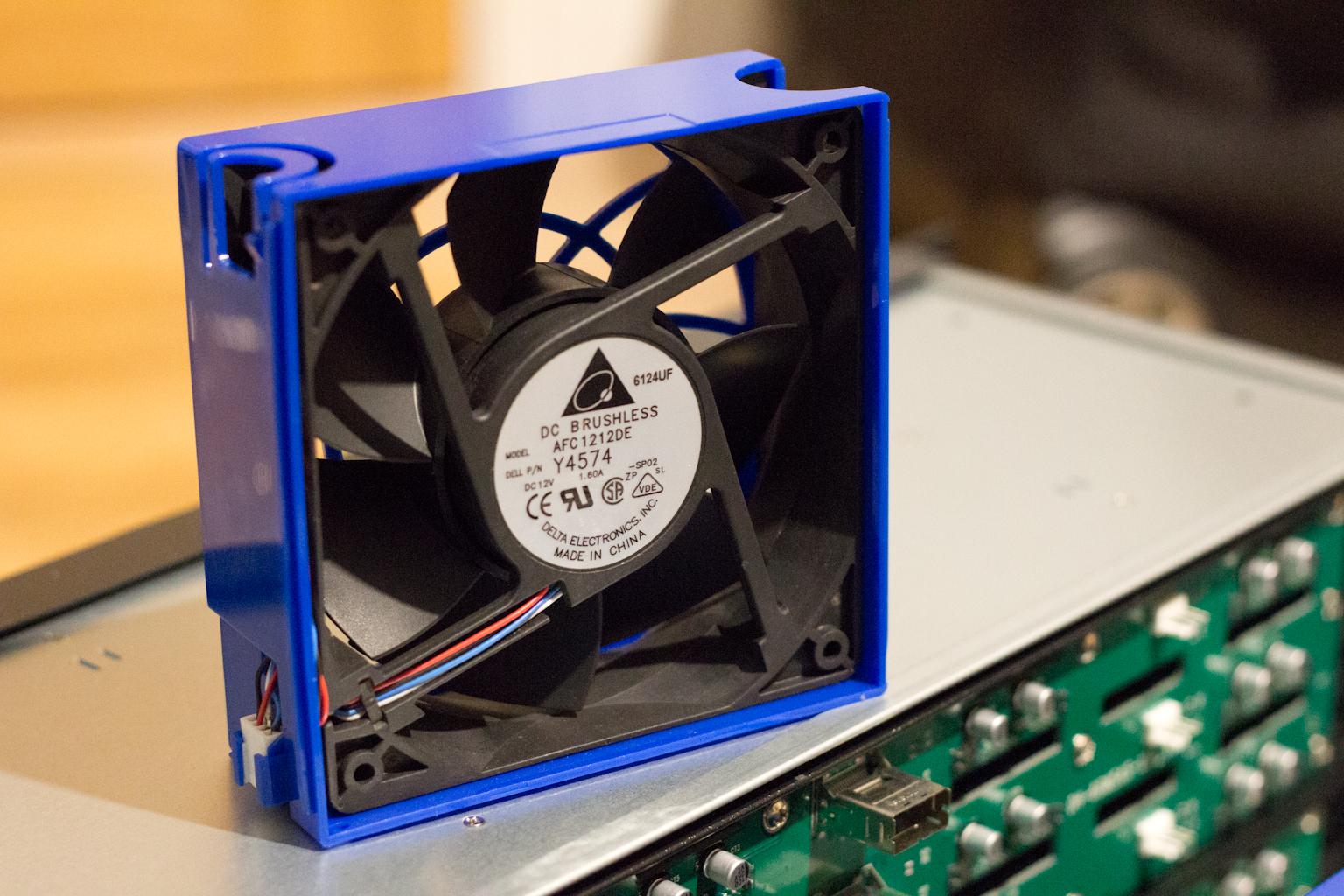

The fans on the fan wall are mounted in a quick-release housing. I'm not sure if they are hot-swap but it certainly takes the hassle out of having to use screws in a tight space, especially once the case is racked.

Removable Fan

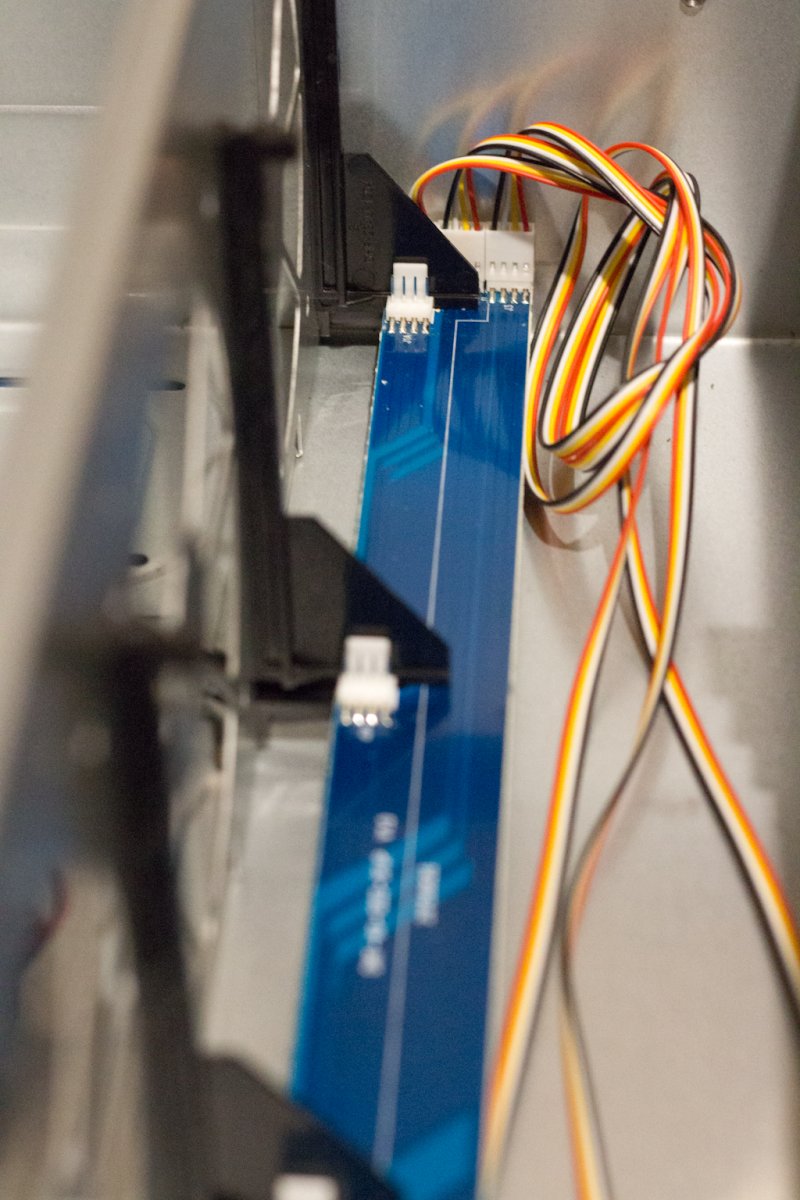

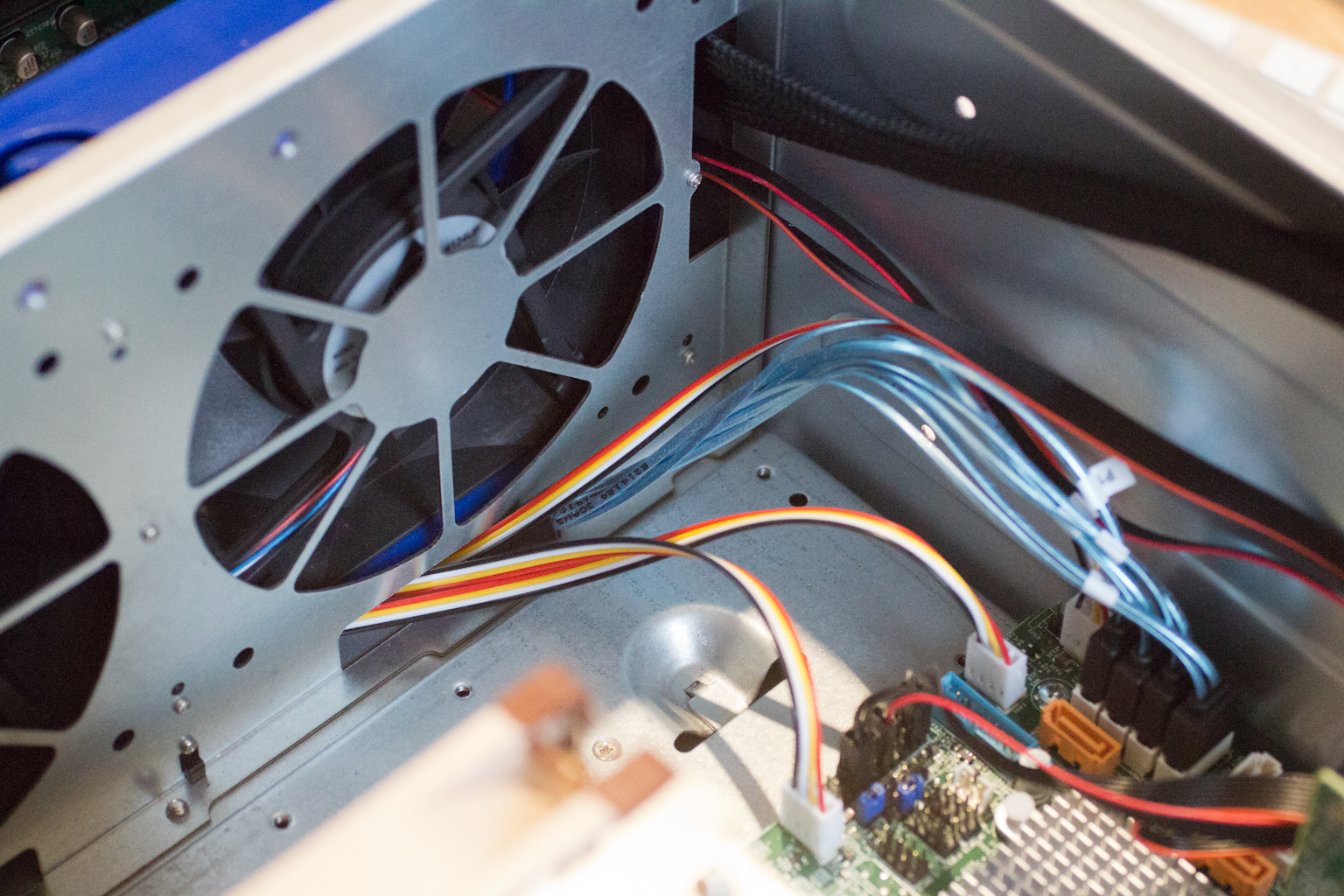

They plug into a little PWM daughter board which in turn connects to the fan headers on your motherboard.

Daughter board for fans to plug into

There are fan headers on the back-plane but i don't know if their speed can be controlled due to lack of documentation so i plugged into the motherboards fan headers.

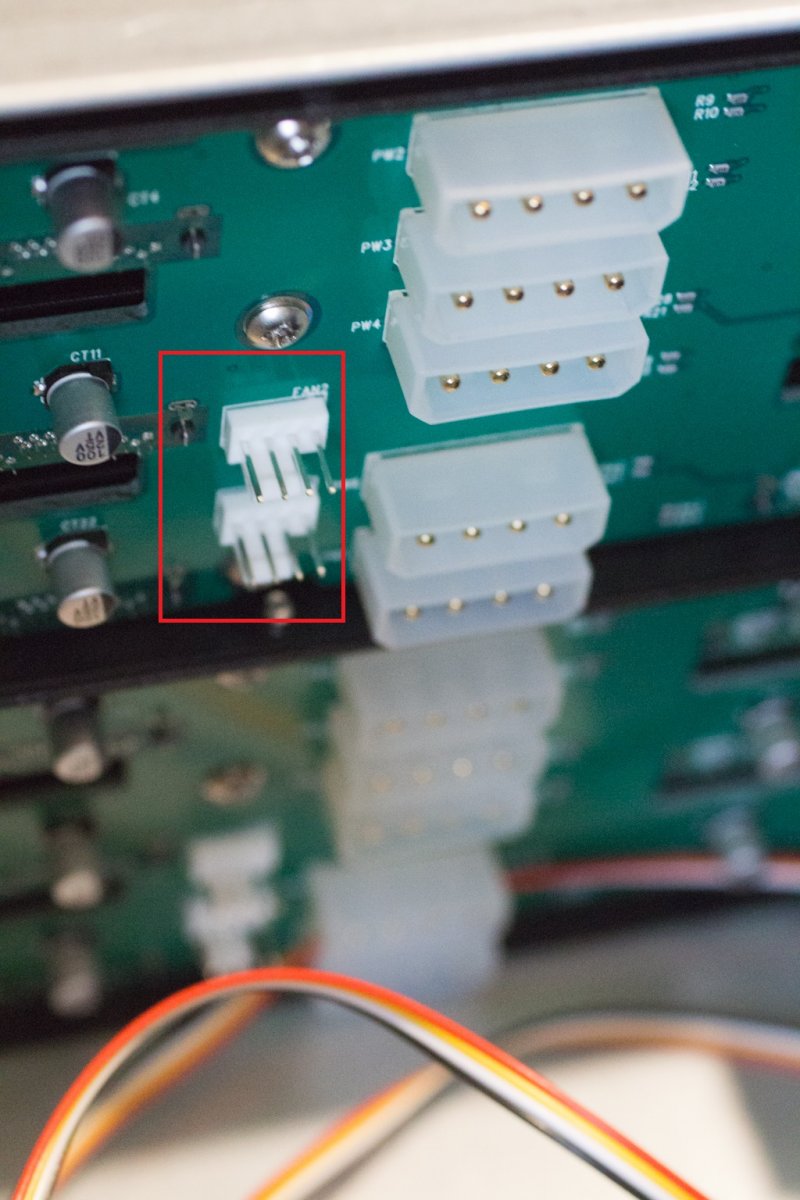

Fan Headers on back-plane

Currently I have the 2 CPU fans plugged into FAN1 & FAN2 on the motherboard and the fan wall fans are plugged into FAN4, FANA & FANB. I'm not sure if this is correct so feel free to shout if its wrong.

I was expecting 3 pin screamers but they are 4pin so can be controlled and for cheap Chinese fans they move a lot of air and are reasonably quiet.

The exhaust fans on the other hand are very noisy. Using "optimised" fan mode, I haven't been able to get them below 3100RPM using the Supermicro IPMI fan control despite changing the thresholds. For now, they are unplugged and will be replaced with quieter fans if the requirement for more fans arises.

Motherboard

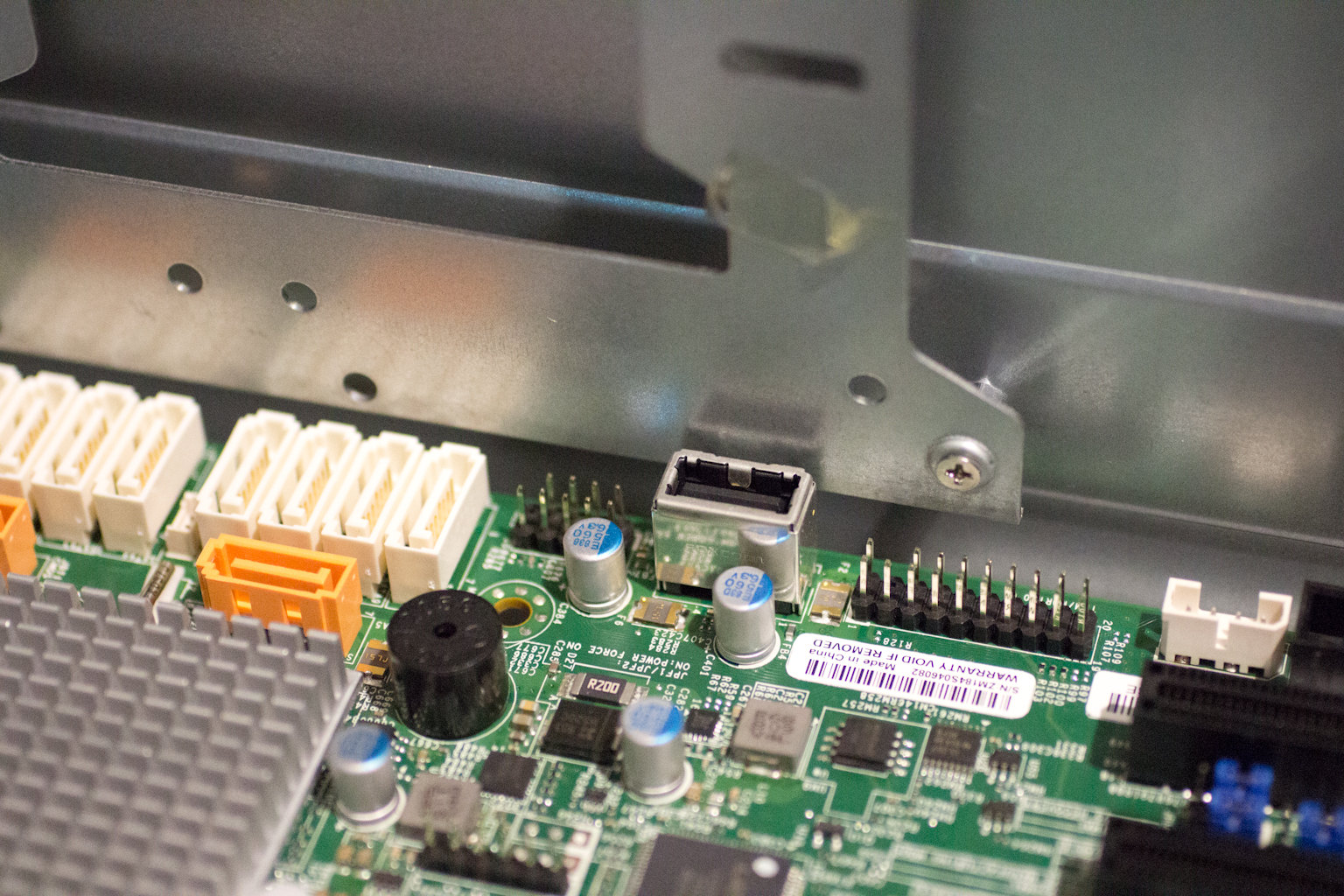

Fitting the motherboard. Not much to say about this apart from after matching up the stand-mounts with the plethora of unmarked holes in the case, I tried to drop the motherboard in but found that It wouldn't fit. The bracket for the two SSD drive mounts fouled the internal USB port and the SATA ports. Very poor design. I have had to remove the drive mount and re-think how i can use it elsewhere should i need to. Luckily, i had decided to use a M.2 NVME drive for the ESXi Datastore so its not a problem for now.

SSD Drive bracket prevented motherboard from sliding right to the edge of the case as it fouled the USB port

These stickers on the power sockets (JPWR1/2) amused me. The manual said they are 8 pin 12v power connectors. The manual on the PSU didn't explain what one 8pin lead vs another is so after a lot of googling i decided on two leads and went for it. I cant remember which ones i chose or why not but so far, no smoke :)

"Danger Danger... High Voltage"

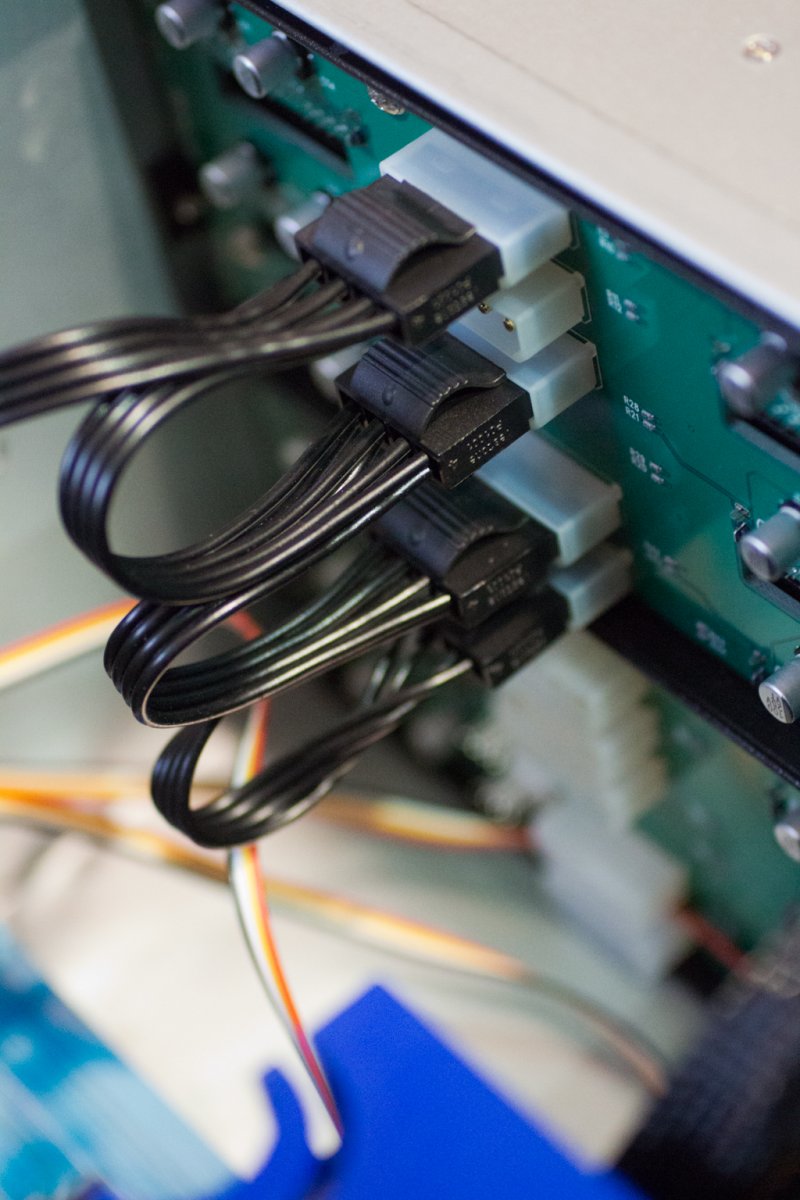

I am using a reverse breakout cable to control 4 disks from 4 sata ports on the motherboard. The SATA controler has been passed through to a linux VM for specific duties not related to the NAS. When Required i have space for another 4 disks to take another row and be controlec by a second reverse breakout cable from the remaining SATA ports.

Reverse breakout cable takes 4 SATA ports from the host to the Mini-SAS port of the target back-plane

CPU & Heat sink

Its been a long time since i fitted a CPU. These E5-2620v4's came up on ebay for an amazing price and they have been running without issue since. Installing them late at night caused my head some initial confusion with the "pull the bar one way then push it another" etc. but they went in. The Heat sinks were a different story.

One of two Xeon E5-2620vs CPU's

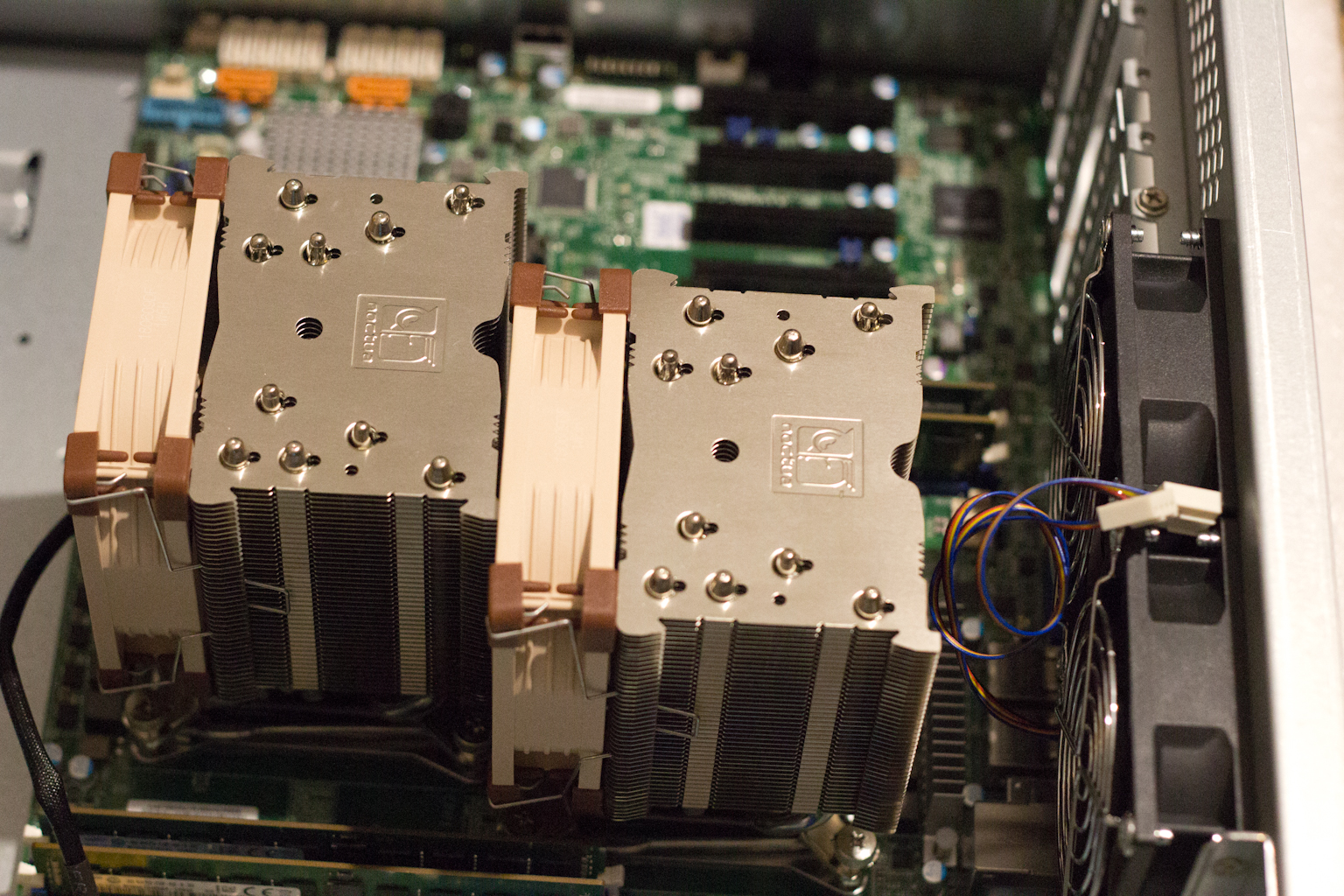

When it came to fitting the heat sinks there was a bit of a clearance problem between the two CPU's. My google-foo must have failed me because i had somehow come to the conclusion that these would sit side by side without issue. They do (now) but it was a bit tricky. The heatsinks have to be installed without the fans attached as you need access with the little tool to tighten the screws. Then you fit the fans back on. Unfortunately the gap between the two sockets was so tight, getting the fan on that sits between the two was not pretty. In doing so i managed to slightly bend a few fins towards the bottom of the rear heatsink.

Two Heatsink and fans finally in place

They are on fine and working as expected. They are really silent, even when running at full-tilt. Expensive but worth it. Worth noting that the rear CPU runs about 3 Degrees Celsius hotter than the front. This is my first dual socket build and i assume that this is because it is being fed the warm air from CPU1. I may add a second fan to the rear of CPU2 to work as a push-pull config if the temp difference becomes a problem.

The requirements for my build were simple enough. I wanted to consolidate a lot of bare metal hosts which were performing various tasks onto a ESXi host, while at the same time giving me a NAS that i could easily expand while also grabbing all the advantages that the ZFS file system has to offer.

I took a lot of inspiration from the Norco build that @Stux posted years ago and went from there.

Hardware

Case: X-Case RM 424 - 24 | A 24 bay 4u rackmount case with rail kit

Motherboard: Supermicro X10DRL-I-O

CPU: E5-2620v4 x2

RAM: 32GB (x4 8GB DIMMS) Samsung DDR4 2133 RDIM ECC (M393A1G40DB0-CPB)

PSU: Corsair RMx 1000

HBA: 2x IBM ServerRaid M1015 Flashed in IT Mode (The reason for two of these explained below)

CPU Heatsink: 2x Noctua NH-U9S with NF-A9 92mm fans

ESXi Boot disk: Sandisk Cruzer Fit 16GB USB 2.0 Flash Drive

ESXi Datastore Disk: Samsung 970 EVO 250GB M.2 NVMI SSD

Hard Disks: 6x 3TB WD Reds + various other disks that will be housed in the case but not used within FreeNAS

UPS: APC SC450RMI1U

Build Process

This part is a bit picture heavy so apologies if its too much. I know that i like seeing the pictures so others may too.

The Case

The case itself as you would expect for a 4U case is pretty spacious inside. It can take a EATX motherboard if required.

It came with 5 fans in total. 3x 120mm fans in the fanwall and 2x 80mm exhaust fans.

View of the empty case

It takes a normal ATX power supply so no screaming fans in the removable PSUs that some supermicro cases have. This is a family NAS so no redundancy required as we have no availability SLA's.

The back-plane on the case is easily accessible (especially with the fans out) and has plenty of power sockets.

Plenty of molex connectors on the back-plane

Not sure if they all had to be plugged in but i obliged as i went.

Fans

The fans on the fan wall are mounted in a quick-release housing. I'm not sure if they are hot-swap but it certainly takes the hassle out of having to use screws in a tight space, especially once the case is racked.

Removable Fan

They plug into a little PWM daughter board which in turn connects to the fan headers on your motherboard.

Daughter board for fans to plug into

There are fan headers on the back-plane but i don't know if their speed can be controlled due to lack of documentation so i plugged into the motherboards fan headers.

Fan Headers on back-plane

Currently I have the 2 CPU fans plugged into FAN1 & FAN2 on the motherboard and the fan wall fans are plugged into FAN4, FANA & FANB. I'm not sure if this is correct so feel free to shout if its wrong.

I was expecting 3 pin screamers but they are 4pin so can be controlled and for cheap Chinese fans they move a lot of air and are reasonably quiet.

The exhaust fans on the other hand are very noisy. Using "optimised" fan mode, I haven't been able to get them below 3100RPM using the Supermicro IPMI fan control despite changing the thresholds. For now, they are unplugged and will be replaced with quieter fans if the requirement for more fans arises.

Motherboard

Fitting the motherboard. Not much to say about this apart from after matching up the stand-mounts with the plethora of unmarked holes in the case, I tried to drop the motherboard in but found that It wouldn't fit. The bracket for the two SSD drive mounts fouled the internal USB port and the SATA ports. Very poor design. I have had to remove the drive mount and re-think how i can use it elsewhere should i need to. Luckily, i had decided to use a M.2 NVME drive for the ESXi Datastore so its not a problem for now.

SSD Drive bracket prevented motherboard from sliding right to the edge of the case as it fouled the USB port

These stickers on the power sockets (JPWR1/2) amused me. The manual said they are 8 pin 12v power connectors. The manual on the PSU didn't explain what one 8pin lead vs another is so after a lot of googling i decided on two leads and went for it. I cant remember which ones i chose or why not but so far, no smoke :)

"Danger Danger... High Voltage"

I am using a reverse breakout cable to control 4 disks from 4 sata ports on the motherboard. The SATA controler has been passed through to a linux VM for specific duties not related to the NAS. When Required i have space for another 4 disks to take another row and be controlec by a second reverse breakout cable from the remaining SATA ports.

Reverse breakout cable takes 4 SATA ports from the host to the Mini-SAS port of the target back-plane

CPU & Heat sink

Its been a long time since i fitted a CPU. These E5-2620v4's came up on ebay for an amazing price and they have been running without issue since. Installing them late at night caused my head some initial confusion with the "pull the bar one way then push it another" etc. but they went in. The Heat sinks were a different story.

One of two Xeon E5-2620vs CPU's

When it came to fitting the heat sinks there was a bit of a clearance problem between the two CPU's. My google-foo must have failed me because i had somehow come to the conclusion that these would sit side by side without issue. They do (now) but it was a bit tricky. The heatsinks have to be installed without the fans attached as you need access with the little tool to tighten the screws. Then you fit the fans back on. Unfortunately the gap between the two sockets was so tight, getting the fan on that sits between the two was not pretty. In doing so i managed to slightly bend a few fins towards the bottom of the rear heatsink.

Two Heatsink and fans finally in place

They are on fine and working as expected. They are really silent, even when running at full-tilt. Expensive but worth it. Worth noting that the rear CPU runs about 3 Degrees Celsius hotter than the front. This is my first dual socket build and i assume that this is because it is being fed the warm air from CPU1. I may add a second fan to the rear of CPU2 to work as a push-pull config if the temp difference becomes a problem.

Last edited: