I've been reading across the TrueNAS, ServeTheHome and Level1Techs forums, as well as Reddit and watching YouTube, to find conversations that move the cheap NVME discussion forward for those that want either a pure NVME solution (for reasons that may cover a mix of power use, server design, environmental constraints and performance targets) or a mix that includes large, slow SATA HDDs.

Unfortunately the picture remains murky. For the most part people tend to stay in their chosen lanes whether from being biased through limited experience with brand and model, technology or a little resistance towards other options that may expand on understanding and benefit.

I find that the underlying factors to all of these discussions are threefold:

- defining requirement and framing the use case

- budget

- available technology and product

In my case I have decided to build a virtualisation platform that incorporates a virtualised NAS with full access and control of dedicated storage via PCIE and SATA passthrough. It is clear to me that my requirements will benefit from using three different kinds of storage:

- very fast high quality consumer nvme M.2 SSDs for the virtualisation host vm store

- cheap and slow but reliable, good quality nvme M.2 SSDs for the NAS

- cheap and even slower but reliable, good quality sata HDDs for NAS backup

The virtualisation platform will likely be based on VMware ESXi because I'll have access to vSphere and vCenter through a VMUG Advantage subscription. On this platform TrueNAS operates purely as a general purpose NAS unrelated to the host hypervisor (no returning an ARC accelerated ZFS volume back to host VMs). The direct attached drives need to work with ESXi so that limits my selection to Samsung, Intel, Western Digital, Kingston and a few others that get detected fine (if not validated by VMware).

The server is an AMD Epyc 7452 with 32 cores and 128GB DDR4 3200 on a motherboard that has 5 x 16x PCIE 4.0 slots and 2 x 8x PCIE 4.0 slots, which means I can use cheap PCIE cards that bifurcate the 16x slots into 4 x 4x to feed 4 x M.2 SSDs directly attached to the card - and install 5 cards to pass 20 separate NVME drives to TrueNAS. However, in this initial setup I will be using only two 2TB SSDs mounted in this way and a single 4TB HDD for backup. From this HDD a backup of critical data will go to BackBlaze. If I can, some data will go to Proton Drive if this is possible.

The selection of the fast SSDs is relatively easy. Given that this is not an enterprise use case, where corporations typically demand every possible ROI and smash their hardware, high quality consumer grade SSDs will be an excellent choice - as long as the quality is there to hold the products to their advertised specifications. This last point is where the problems arise with consumer grade hardware.

I have been looking at the cheaper PCIE 3.0 and some of the cheaper 4.0 drives, like Team Group, Silicon Power, and cheap models from Samsung, Intel, Western Digital, Kingston, and others, and many of the latest products have adequate performance for a NAS serving over 1Gbit ethernet or WiFi, or even 10Gbit ethernet when used with ARC and maybe a used enterprise drive providing L2ARC.

However, after reading about the failure rates of Team Group and Silicon Power SSDs on some forums it is clear that while the specifications for performance and durability are fine for the NAS drives the QA has failed. There is a lot of discussion on this forum about how these cheap NVME drives don't have the durability for NAS but it seems like more of a QA issue. Which raises the question: which brands, series and models do have the QA to assure that their products are up to spec?

In all of the articles and discussions about SSDs across various forums and review sites not much is said about this. Most discussions about the cheaper drives talk about low endurance or terrible performance once the cache is exhausted in sustained throughput. However, for my use case, certainly, and probably for most homelabs that use a NAS for file serving and streaming, the endurance levels (if up to spec) and performance is fine. More than adequate for 1Gbe or WiFi.

After looking for a good price to performance and quality/endurance ratio in a cheap drive I found the Intel 670p 2TB NVME M.2 SSD. This review paints and interesting picture of this drive:

www.storagereview.com

www.storagereview.com

It is only QLC and has a TBW of 740 but that doesn't concern me because the specification puts it is far in excess of the demand that will be placed on it in this NAS use case. What I am interested in more is the likely hood that Intel puts more into the QA for this range of products which should translate into a true to specification drive. If that is the reality then this drive might be an excellent candidate for a modest home NAS based on NVME that serves network clients (the Network part of NAS).

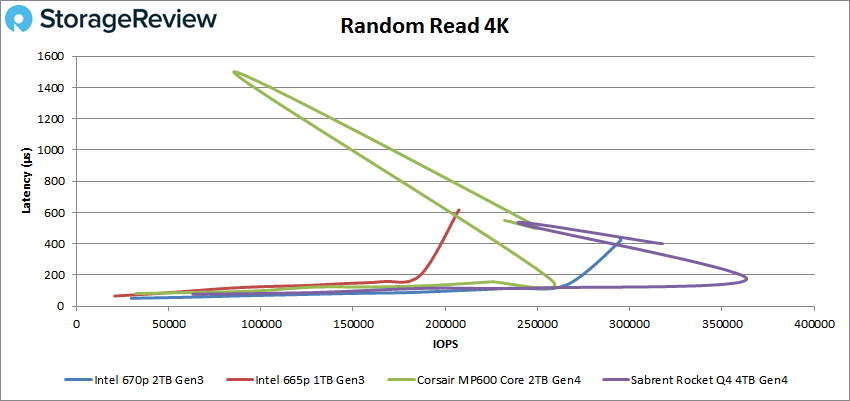

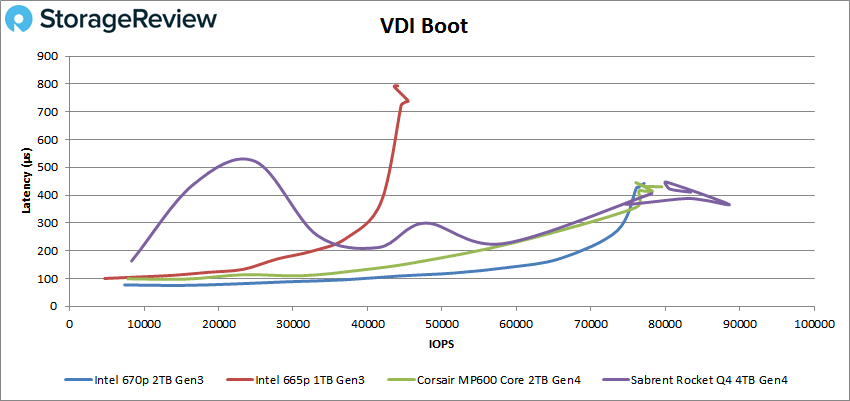

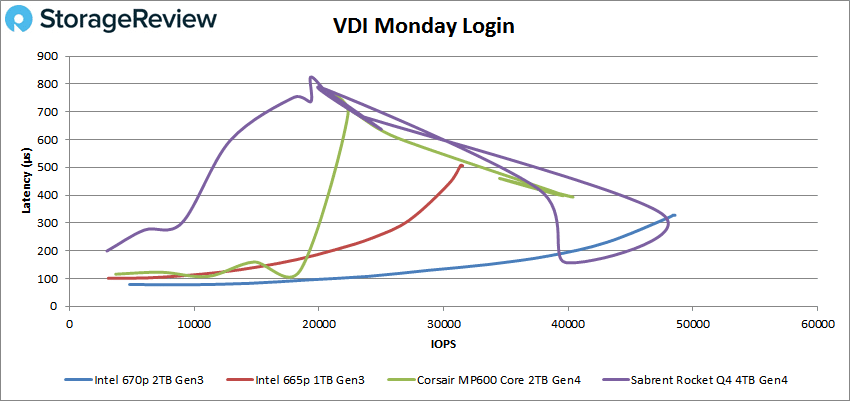

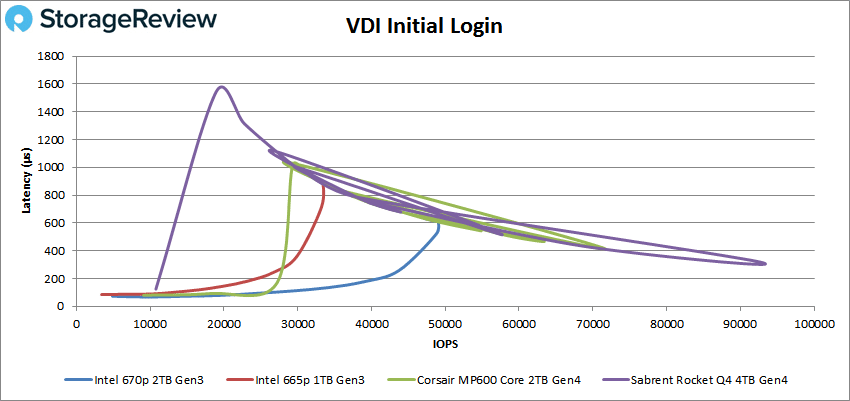

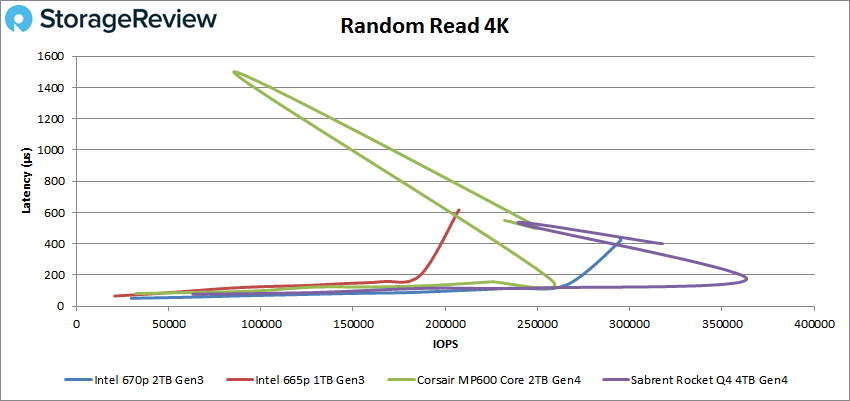

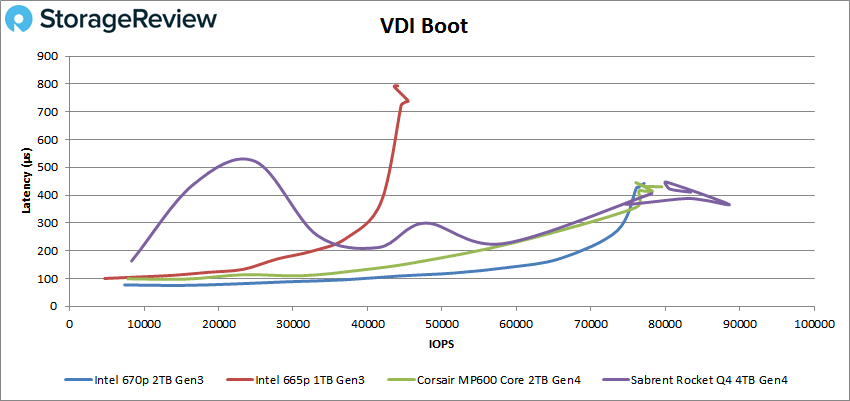

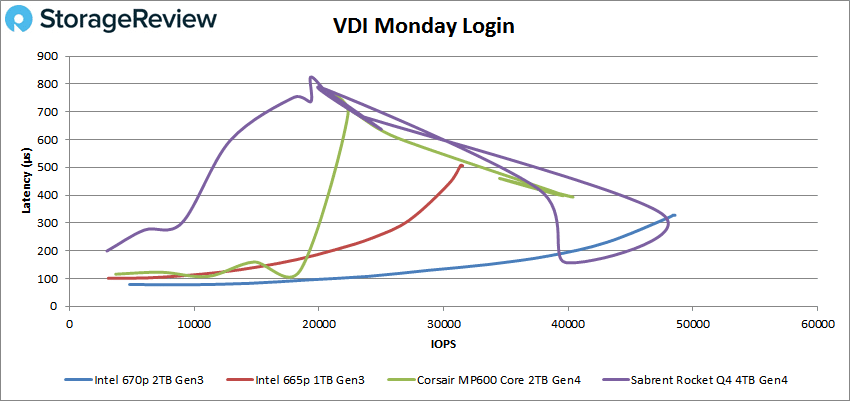

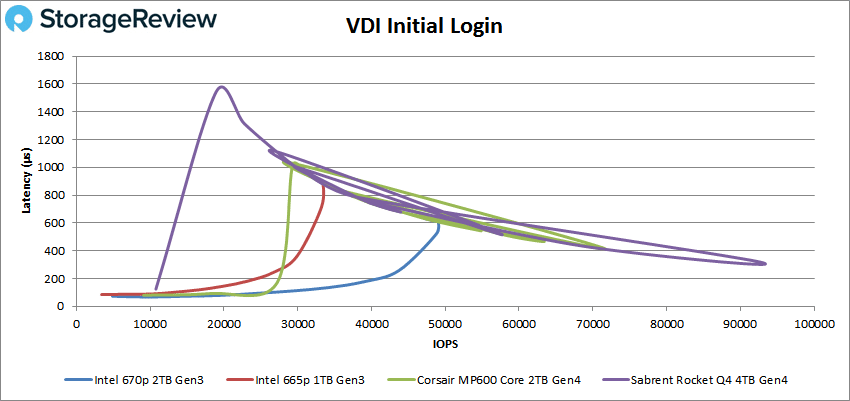

What I find interesting in the StorageReview benchmarking and analysis is that the 670p has a well defined behaviour, especially when compared with the Corsair and Sabrent drives:

These are just synthetic tests but they highlight qualities in the Intel which are desirable for a plodding-along NAS workhorse.

The final essential factor in the selection criteria is the price of the 670p. In Australia right now it is $150 AUD which makes it less than twice the cost of a Seagate IronWolf 4TB 3.5" heavyweight HDD. It runs cool, fast enough especially behind ARC and L2ARC and in front of a single/mirrored IronWolf 4TB.

It would be great to hear about other cheap PCIE 3.0 or 4.0 plodders that have the QA to back up their specifications and form the backbone of a solid home or SMB NAS. Cheers.

Unfortunately the picture remains murky. For the most part people tend to stay in their chosen lanes whether from being biased through limited experience with brand and model, technology or a little resistance towards other options that may expand on understanding and benefit.

I find that the underlying factors to all of these discussions are threefold:

- defining requirement and framing the use case

- budget

- available technology and product

In my case I have decided to build a virtualisation platform that incorporates a virtualised NAS with full access and control of dedicated storage via PCIE and SATA passthrough. It is clear to me that my requirements will benefit from using three different kinds of storage:

- very fast high quality consumer nvme M.2 SSDs for the virtualisation host vm store

- cheap and slow but reliable, good quality nvme M.2 SSDs for the NAS

- cheap and even slower but reliable, good quality sata HDDs for NAS backup

The virtualisation platform will likely be based on VMware ESXi because I'll have access to vSphere and vCenter through a VMUG Advantage subscription. On this platform TrueNAS operates purely as a general purpose NAS unrelated to the host hypervisor (no returning an ARC accelerated ZFS volume back to host VMs). The direct attached drives need to work with ESXi so that limits my selection to Samsung, Intel, Western Digital, Kingston and a few others that get detected fine (if not validated by VMware).

The server is an AMD Epyc 7452 with 32 cores and 128GB DDR4 3200 on a motherboard that has 5 x 16x PCIE 4.0 slots and 2 x 8x PCIE 4.0 slots, which means I can use cheap PCIE cards that bifurcate the 16x slots into 4 x 4x to feed 4 x M.2 SSDs directly attached to the card - and install 5 cards to pass 20 separate NVME drives to TrueNAS. However, in this initial setup I will be using only two 2TB SSDs mounted in this way and a single 4TB HDD for backup. From this HDD a backup of critical data will go to BackBlaze. If I can, some data will go to Proton Drive if this is possible.

The selection of the fast SSDs is relatively easy. Given that this is not an enterprise use case, where corporations typically demand every possible ROI and smash their hardware, high quality consumer grade SSDs will be an excellent choice - as long as the quality is there to hold the products to their advertised specifications. This last point is where the problems arise with consumer grade hardware.

I have been looking at the cheaper PCIE 3.0 and some of the cheaper 4.0 drives, like Team Group, Silicon Power, and cheap models from Samsung, Intel, Western Digital, Kingston, and others, and many of the latest products have adequate performance for a NAS serving over 1Gbit ethernet or WiFi, or even 10Gbit ethernet when used with ARC and maybe a used enterprise drive providing L2ARC.

However, after reading about the failure rates of Team Group and Silicon Power SSDs on some forums it is clear that while the specifications for performance and durability are fine for the NAS drives the QA has failed. There is a lot of discussion on this forum about how these cheap NVME drives don't have the durability for NAS but it seems like more of a QA issue. Which raises the question: which brands, series and models do have the QA to assure that their products are up to spec?

In all of the articles and discussions about SSDs across various forums and review sites not much is said about this. Most discussions about the cheaper drives talk about low endurance or terrible performance once the cache is exhausted in sustained throughput. However, for my use case, certainly, and probably for most homelabs that use a NAS for file serving and streaming, the endurance levels (if up to spec) and performance is fine. More than adequate for 1Gbe or WiFi.

After looking for a good price to performance and quality/endurance ratio in a cheap drive I found the Intel 670p 2TB NVME M.2 SSD. This review paints and interesting picture of this drive:

Intel SSD 670p Review (QLC)

Intel expands its QLC SSD line again with the Intel SSD 670p. This M.2 SSD leverages 144-Layer QLC NAND and an improved dynamic cache

It is only QLC and has a TBW of 740 but that doesn't concern me because the specification puts it is far in excess of the demand that will be placed on it in this NAS use case. What I am interested in more is the likely hood that Intel puts more into the QA for this range of products which should translate into a true to specification drive. If that is the reality then this drive might be an excellent candidate for a modest home NAS based on NVME that serves network clients (the Network part of NAS).

What I find interesting in the StorageReview benchmarking and analysis is that the 670p has a well defined behaviour, especially when compared with the Corsair and Sabrent drives:

These are just synthetic tests but they highlight qualities in the Intel which are desirable for a plodding-along NAS workhorse.

The final essential factor in the selection criteria is the price of the 670p. In Australia right now it is $150 AUD which makes it less than twice the cost of a Seagate IronWolf 4TB 3.5" heavyweight HDD. It runs cool, fast enough especially behind ARC and L2ARC and in front of a single/mirrored IronWolf 4TB.

It would be great to hear about other cheap PCIE 3.0 or 4.0 plodders that have the QA to back up their specifications and form the backbone of a solid home or SMB NAS. Cheers.