- Joined

- Apr 16, 2020

- Messages

- 2,947

There have been a couple of threads on this issue - but none of them seem to have a working solution (for me at least)

I change the default gateway of my Scale NAS. (from .254 to .15 if it matters)

None of my containers / charts that use the external world work properly now.

NAS DNS is 192.168.38.10 & 11 (I use AD - so need to use local DNS)

NAS is 192.168.38.32

Gateway is 192.168.38.15

Diagnosis Steps:

Shell into a Heimdall Container (it has ping and nslookup). I have noted where the result differs in a NAS Shell

Ping 1.1.1.1 - works

Ping 192.168.38.15 - works

Ping 192.168.38.10 (or 11, or anything else) - Does Not Work (but does work from the NAS itself). The NAS can ping anywhere, the Container can only ping the gateway (this may well be the issue)

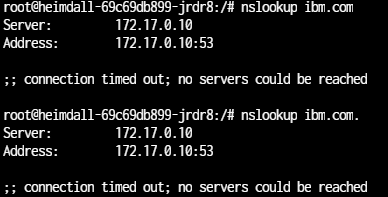

nslookup ibm.com - Does not work (Works from the NAS) - the below is what I get from the container

At the very least DNS seems fubarr'd - but there may be more to it.

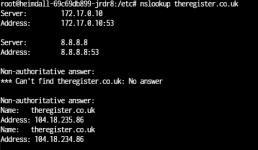

Further messing around - I added nameserver 8.8.8.8 into the resolv.conf in the container (obviously this will not persist) and theregister.co.uk resolves correctly (albeit not quickly - there is a noticable delay - 4-5 Seconds)

My take on this is that the containers within TN for some reason will only talk to the gateway - and nothing else on the LAN - thus DNS is failing. A further test - I removed 8.8.8.8 and added 192.168.38.15 (my firewall and DNS proxy) and attempted to resolve sex.com (its short and easy to type). This also works, again slowly (4-5 seconds)

BTW - under TrueNAS Global Configuration - Outbound Network I have Allow All selected

Also - the 4-5 seconds delay is only from the container. TrueNAS itself resolves everything very quickly

Anyone got an idea of whats wrong, and what I have messed up and (of course) how to fix it - that doesn't involve rebuilding everything?

I change the default gateway of my Scale NAS. (from .254 to .15 if it matters)

None of my containers / charts that use the external world work properly now.

NAS DNS is 192.168.38.10 & 11 (I use AD - so need to use local DNS)

NAS is 192.168.38.32

Gateway is 192.168.38.15

Diagnosis Steps:

Shell into a Heimdall Container (it has ping and nslookup). I have noted where the result differs in a NAS Shell

Ping 1.1.1.1 - works

Ping 192.168.38.15 - works

Ping 192.168.38.10 (or 11, or anything else) - Does Not Work (but does work from the NAS itself). The NAS can ping anywhere, the Container can only ping the gateway (this may well be the issue)

nslookup ibm.com - Does not work (Works from the NAS) - the below is what I get from the container

At the very least DNS seems fubarr'd - but there may be more to it.

Further messing around - I added nameserver 8.8.8.8 into the resolv.conf in the container (obviously this will not persist) and theregister.co.uk resolves correctly (albeit not quickly - there is a noticable delay - 4-5 Seconds)

My take on this is that the containers within TN for some reason will only talk to the gateway - and nothing else on the LAN - thus DNS is failing. A further test - I removed 8.8.8.8 and added 192.168.38.15 (my firewall and DNS proxy) and attempted to resolve sex.com (its short and easy to type). This also works, again slowly (4-5 seconds)

BTW - under TrueNAS Global Configuration - Outbound Network I have Allow All selected

Also - the 4-5 seconds delay is only from the container. TrueNAS itself resolves everything very quickly

Anyone got an idea of whats wrong, and what I have messed up and (of course) how to fix it - that doesn't involve rebuilding everything?