After running fine for the past few months, as of this morning some of my containers threw errors that they couldn't resolve external addresses. I tried to fix the issue for the past few hours, but I'm kinda stuck.

From the TrueNAS SCALE shell nslookup is fine:

From inside a container things don't look too good though:

So I dug around, and the culprit seems to be within Kubernetes CoreDNS:

So this is good and bad, my DNS entries (1.1.1.1, 8.8.8.8) from /etc/resolv.conf are forwarded correctly into CoreDNS, the requests from the gitea container make it to CoreDNS, it seems however as if the DNS requests don't make it out from here. And I'm kinda stuck what to do next. I restarted my server several times, hoping it would resolve itself, and googling brought me to lots of issues, from firewalls, to incorrect iptables entries to straight up Kubernetes reinstalls fixing the issue.

But I don't think there is a firewall in my Scale install, and I really don't wanna reinstall. Weirdest thing is I didn't change anything, all I did was run a couple of Truecharts container updates yesterday evening, but how can those ruin my entire k3s setup?

Everything is default, running latest TrueNAS-SCALE-23.10.1.

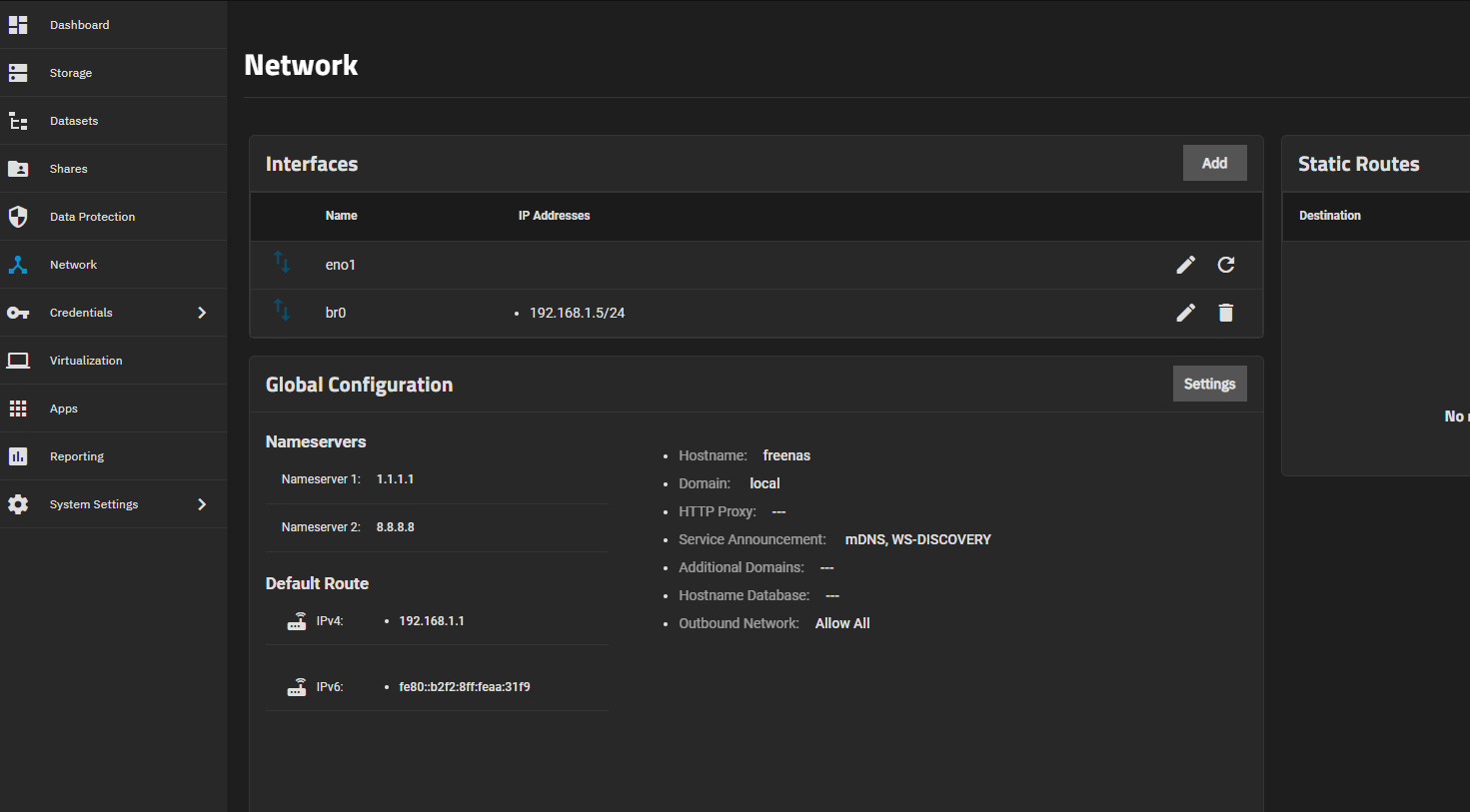

Network config:

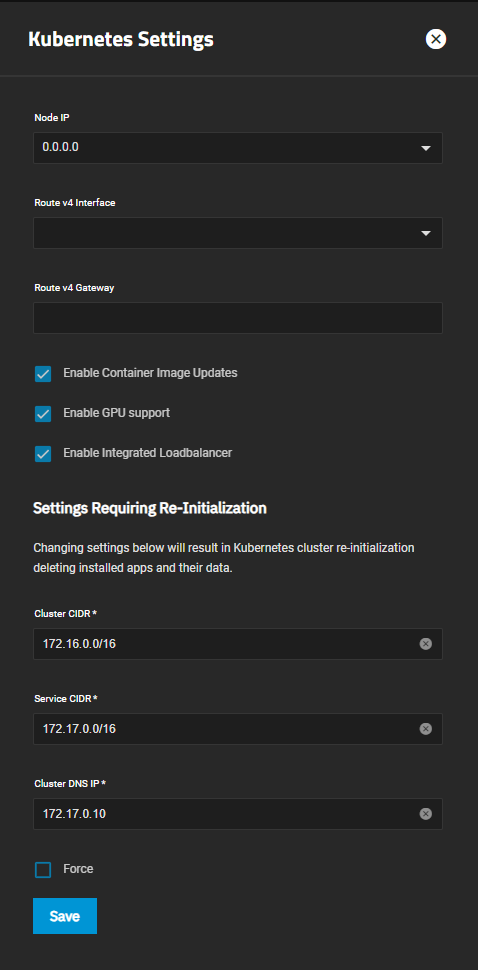

Kubernetes Settings:

From the TrueNAS SCALE shell nslookup is fine:

Code:

root@freenas[~]# nslookup google.com Server: 1.1.1.1 Address: 1.1.1.1#53 Non-authoritative answer: Name: google.com Address: 142.250.186.78 Name: google.com Address: 2a00:1450:4001:827::200e

From inside a container things don't look too good though:

Code:

root@freenas[~]# k3s kubectl exec -i -t gitea-76db8c7bd6-h5jz8 --namespace ix-gitea -- nslookup google.com Defaulted container "gitea" out of: gitea, gitea-init-postgres-wait (init) Server: 172.17.0.10 Address: 172.17.0.10:53 ;; connection timed out; no servers could be reached command terminated with exit code 1

So I dug around, and the culprit seems to be within Kubernetes CoreDNS:

Code:

root@freenas[~]# k3s kubectl logs --namespace=kube-system -l k8s-app=kube-dns [ERROR] plugin/errors: 2 google.com. AAAA: read udp 172.16.1.116:48981->1.1.1.1:53: i/o timeout [ERROR] plugin/errors: 2 google.com. A: read udp 172.16.1.116:45498->8.8.8.8:53: i/o timeout [ERROR] plugin/errors: 2 google.com. A: read udp 172.16.1.116:32862->8.8.8.8:53: i/o timeout [ERROR] plugin/errors: 2 google.com. AAAA: read udp 172.16.1.116:46529->1.1.1.1:53: i/o timeout [ERROR] plugin/errors: 2 google.com. A: read udp 172.16.1.116:48613->1.1.1.1:53: i/o timeout [ERROR] plugin/errors: 2 google.com. AAAA: read udp 172.16.1.116:37692->8.8.8.8:53: i/o timeout [ERROR] plugin/errors: 2 google.com. AAAA: read udp 172.16.1.116:50928->8.8.8.8:53: i/o timeout [ERROR] plugin/errors: 2 google.com. A: read udp 172.16.1.116:50217->8.8.8.8:53: i/o timeout [ERROR] plugin/errors: 2 google.com. AAAA: read udp 172.16.1.116:43422->8.8.8.8:53: i/o timeout [ERROR] plugin/errors: 2 google.com. A: read udp 172.16.1.116:48322->8.8.8.8:53: i/o timeout

So this is good and bad, my DNS entries (1.1.1.1, 8.8.8.8) from /etc/resolv.conf are forwarded correctly into CoreDNS, the requests from the gitea container make it to CoreDNS, it seems however as if the DNS requests don't make it out from here. And I'm kinda stuck what to do next. I restarted my server several times, hoping it would resolve itself, and googling brought me to lots of issues, from firewalls, to incorrect iptables entries to straight up Kubernetes reinstalls fixing the issue.

But I don't think there is a firewall in my Scale install, and I really don't wanna reinstall. Weirdest thing is I didn't change anything, all I did was run a couple of Truecharts container updates yesterday evening, but how can those ruin my entire k3s setup?

Everything is default, running latest TrueNAS-SCALE-23.10.1.

Network config:

Kubernetes Settings: