Hello Peeps,

About a year ago i created a high-end Truenas Core server (running 13.0-stable) on a Dell R730XD 12 bay 3.5 and 2x 2.5" slots for Truenas sysvol.

More HW setup details:

12x Seagate iron wolf 16TB

Dual 4x NvME PCI controllers with dual nvme assigned to cache,metadata and logs. This means that the pool has 2 identical nvme for cache and metadata on a different controllers (16x slot with bifurcation) for redundancy. Not sure if this is the best practice or if i should use single nvme for cache/metadata and log?

What happens if a pool looses a nvme assigned for meta, cache og log? My challenge is that all nvme's are internal (non hotswap) and i wanted to create as much uptime as possible.

There is also 512GB ECC RAM in the server. (768GB max)

The pool is mainly used for VM's (vmware) over iscsi

Now, all disk bays are populated and i'm looking to expand my existing pool.

I was thinking to purchase a JBOD (been looking at Netapp DS4246 12gbps) with a LSI HBA (IT mode) which holds 24x 3.5 bays.

Iron Wolf 16TB's are close to impossible to find in any stores, and the best buy in my area of the world atm is seagate 18TB exos.

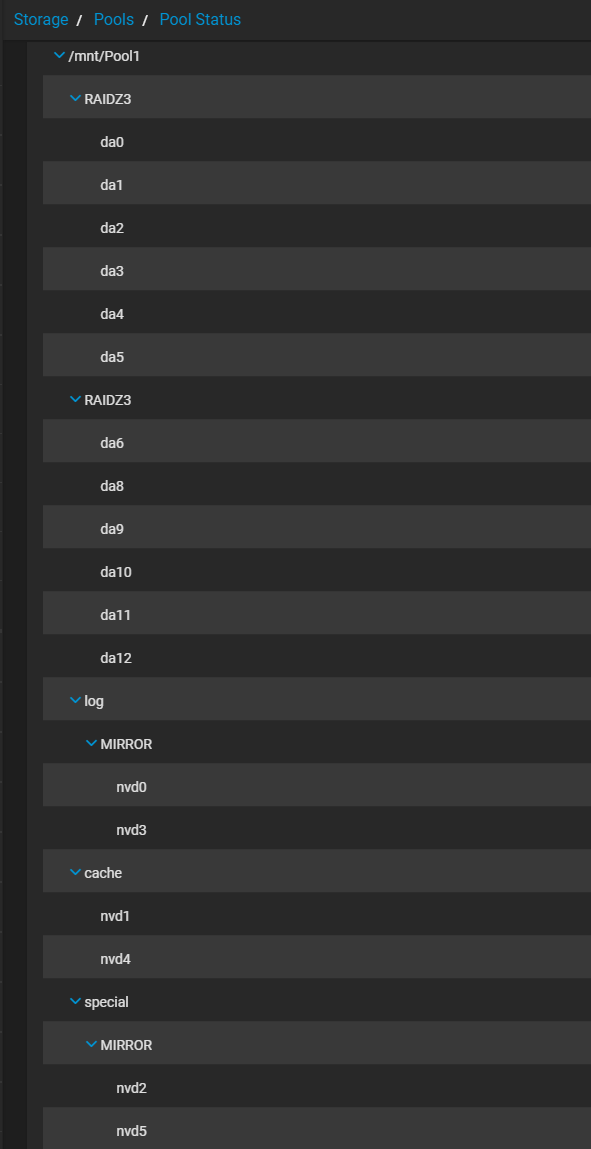

I see from my existing pool that my drives are divided into clusters of 6 and 6 drivers (screenshot).

If i add 12-24 18TB exos HDDs to my existing pool will i get to use those extra TBs or are they lost? Should i stick with 16TB's?

Can i also have 1-2 hot spares (18tb) on the Netapp JBOD and have them cover the internal 16TBs as well? I take it that i have to expand the pool with even number disks?

I'm i right in believing that the slowest/crappiest drive will be the bottle neck in the pool?

Any recommendations on how i should expand this pool? Im not keen on a new pool as i dont have enough nvme or free PCI slots for cache, log etc.

Thanks for your time

Kind Regards - Reidar

About a year ago i created a high-end Truenas Core server (running 13.0-stable) on a Dell R730XD 12 bay 3.5 and 2x 2.5" slots for Truenas sysvol.

More HW setup details:

12x Seagate iron wolf 16TB

Dual 4x NvME PCI controllers with dual nvme assigned to cache,metadata and logs. This means that the pool has 2 identical nvme for cache and metadata on a different controllers (16x slot with bifurcation) for redundancy. Not sure if this is the best practice or if i should use single nvme for cache/metadata and log?

What happens if a pool looses a nvme assigned for meta, cache og log? My challenge is that all nvme's are internal (non hotswap) and i wanted to create as much uptime as possible.

There is also 512GB ECC RAM in the server. (768GB max)

The pool is mainly used for VM's (vmware) over iscsi

Now, all disk bays are populated and i'm looking to expand my existing pool.

I was thinking to purchase a JBOD (been looking at Netapp DS4246 12gbps) with a LSI HBA (IT mode) which holds 24x 3.5 bays.

Iron Wolf 16TB's are close to impossible to find in any stores, and the best buy in my area of the world atm is seagate 18TB exos.

I see from my existing pool that my drives are divided into clusters of 6 and 6 drivers (screenshot).

If i add 12-24 18TB exos HDDs to my existing pool will i get to use those extra TBs or are they lost? Should i stick with 16TB's?

Can i also have 1-2 hot spares (18tb) on the Netapp JBOD and have them cover the internal 16TBs as well? I take it that i have to expand the pool with even number disks?

I'm i right in believing that the slowest/crappiest drive will be the bottle neck in the pool?

Any recommendations on how i should expand this pool? Im not keen on a new pool as i dont have enough nvme or free PCI slots for cache, log etc.

Thanks for your time

Kind Regards - Reidar