My server crashed, I think the USB boot drive failed. I re-installed TrueNas 12 onto a new USB drive. The server is up, it detects the pool, however, it fails to import it with this error:

("data/zfs' is not a valid directory',)

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 243, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 94, in main_worker

res = MIDDLEWARE._run(*call_args)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 45, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 979, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 371, in import_pool

self.logger.error(

File "libzfs.pyx", line 391, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 365, in import_pool

zfs.import_pool(found, new_name or found.name, options, any_host=any_host)

File "libzfs.pyx", line 1095, in libzfs.ZFS.import_pool

File "libzfs.pyx", line 1123, in libzfs.ZFS.__import_pool

libzfs.ZFSException: '/data/zfs' is not a valid directory

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 367, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 403, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 975, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool.py", line 1421, in import_pool

await self.middleware.call('zfs.pool.import_pool', pool['guid'], {

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1256, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1221, in _call

return await self._call_worker(name, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1227, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1154, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1128, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

libzfs.ZFSException: ("'/data/zfs' is not a valid directory",)

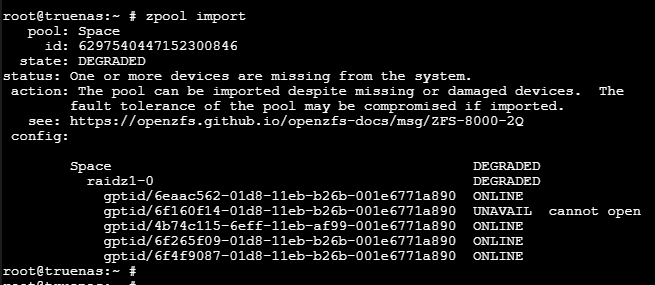

As suggested from another post have this output:

So this appears to be a failed drive, however, I should I not be able import the pool still? The post I was reading the guy was using a hardware raid. Since I'm not, is there an import process I can do?

This is a bit over my head to sort out an fix. Any change of data recovery? Any suggestions?

Specs

Motherboard: Intel S3200H

CPU: Xeon 3075

RAM 4x2gb Axiom DDR2-667 ECC

Boot Drive: USB Stick (lexar 32gb, new one and the old one)

Hard Drives: 3x Seagate ST2000NM0033 2TB Sata + 2x Western Digital RE4 WD2003FYYS 2TB

Raid : Z1

Hard disk controllers : ICH9R (onboard SATA ports)

Network cards : Intel 82541P1

("data/zfs' is not a valid directory',)

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 243, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 94, in main_worker

res = MIDDLEWARE._run(*call_args)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 45, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 979, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 371, in import_pool

self.logger.error(

File "libzfs.pyx", line 391, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 365, in import_pool

zfs.import_pool(found, new_name or found.name, options, any_host=any_host)

File "libzfs.pyx", line 1095, in libzfs.ZFS.import_pool

File "libzfs.pyx", line 1123, in libzfs.ZFS.__import_pool

libzfs.ZFSException: '/data/zfs' is not a valid directory

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 367, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 403, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 975, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool.py", line 1421, in import_pool

await self.middleware.call('zfs.pool.import_pool', pool['guid'], {

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1256, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1221, in _call

return await self._call_worker(name, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1227, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1154, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1128, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

libzfs.ZFSException: ("'/data/zfs' is not a valid directory",)

As suggested from another post have this output:

So this appears to be a failed drive, however, I should I not be able import the pool still? The post I was reading the guy was using a hardware raid. Since I'm not, is there an import process I can do?

This is a bit over my head to sort out an fix. Any change of data recovery? Any suggestions?

Specs

Motherboard: Intel S3200H

CPU: Xeon 3075

RAM 4x2gb Axiom DDR2-667 ECC

Boot Drive: USB Stick (lexar 32gb, new one and the old one)

Hard Drives: 3x Seagate ST2000NM0033 2TB Sata + 2x Western Digital RE4 WD2003FYYS 2TB

Raid : Z1

Hard disk controllers : ICH9R (onboard SATA ports)

Network cards : Intel 82541P1