Hello,

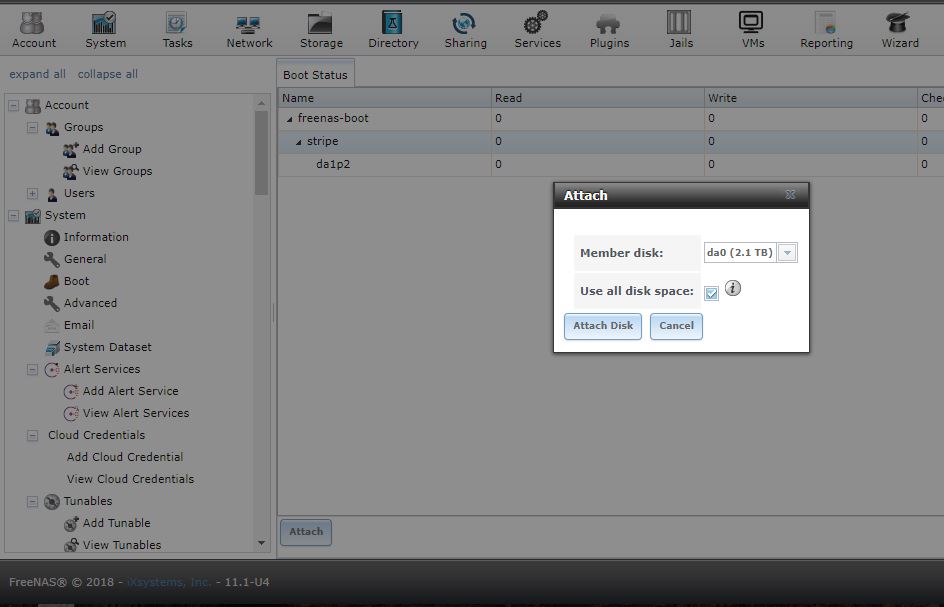

I was using BETA 12 Freenas Build. And I had 2 TB hardware raid storage unit (ISCSI Block for ESXI, I think I didn't do anything for usage, ex. format or creating any pool (I don't remember)). And yesterday I can't access web interface. I think I had OS problem of BETA. And for this reasons, I did reinstall Freenas 11 U4 (fresh install) for access web interface and disk. After I can't access my storage pool. For this, I attach this disk to under "System->Boot->Status":

And after I can't access my old datas. They are important and I didn't have backup :( Anyone can help me?

*"zpool import"

EMPTY :(

*"gpart list"

Geom name: da1

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 31116247

first: 40

entries: 152

scheme: GPT

Providers:

1. Name: da1p1

Mediasize: 524288 (512K)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r0w0e0

rawuuid: dcf438aa-4cd0-11e8-8d1b-f4ce46b254d8

rawtype: 21686148-6449-6e6f-744e-656564454649

label: (null)

length: 524288

offset: 20480

type: bios-boot

index: 1

end: 1063

start: 40

2. Name: da1p2

Mediasize: 15930970112 (15G)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 544768

Mode: r1w1e1

rawuuid: dd0b8372-4cd0-11e8-8d1b-f4ce46b254d8

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 15930970112

offset: 544768

type: freebsd-zfs

index: 2

end: 31116239

start: 1064

Consumers:

1. Name: da1

Mediasize: 15931539456 (15G)

Sectorsize: 512

Mode: r1w1e2

Geom name: da0

modified: false

state: OK

fwheads: 255

fwsectors: 32

last: 4101100087

first: 40

entries: 152

scheme: GPT

Providers:

1. Name: da0p1

Mediasize: 524288 (512K)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r0w0e0

rawuuid: 878caa70-4d1f-11e8-9e10-f4ce46b254d8

rawtype: 21686148-6449-6e6f-744e-656564454649

label: (null)

length: 524288

offset: 20480

type: bios-boot

index: 1

end: 1063

start: 40

2. Name: da0p2

Mediasize: 2099762696192 (1.9T)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 544768

Mode: r0w0e0

rawuuid: 87a6ba3c-4d1f-11e8-9e10-f4ce46b254d8

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2099762696192

offset: 544768

type: freebsd-zfs

index: 2

end: 4101100079

start: 1064

Consumers:

1. Name: da0

Mediasize: 2099763263488 (1.9T)

Sectorsize: 512

Mode: r0w0e0

*"gpart show"

=> 40 31116208 da1 GPT (15G)

40 1024 1 bios-boot (512K)

1064 31115176 2 freebsd-zfs (15G)

31116240 8 - free - (4.0K)

=> 40 4101100048 da0 GPT (1.9T)

40 1024 1 bios-boot (512K)

1064 4101099016 2 freebsd-zfs (1.9T)

4101100080 8 - free - (4.0K)

*"smartctl -a -q noserial /dev/da0"

smartctl 6.6 2017-11-05 r4594 [FreeBSD 11.1-STABLE amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Vendor: HP

Product: RAID 5

Revision: OK

User Capacity: 2,099,763,263,488 bytes [2.09 TB]

Logical block size: 512 bytes

Device type: disk

Local Time is: Tue May 1 13:26:03 2018 +03

SMART support is: Unavailable - device lacks SMART capability.

=== START OF READ SMART DATA SECTION ===

Current Drive Temperature: 0 C

Drive Trip Temperature: 0 C

Error Counter logging not supported

Device does not support Self Test logging

*"camcontrol devlist"

<HP RAID 5 OK> at scbus0 target 0 lun 0 (pass0,da0)

<Generic STORAGE DEVICE 0250> at scbus9 target 0 lun 0 (pass1,da1)

*"glabel status*

Name Status Components

gptid/dcf438aa-4cd0-11e8-8d1b-f4ce46b254d8 N/A da1p1

gptid/878caa70-4d1f-11e8-9e10-f4ce46b254d8 N/A da0p1

gptid/87a6ba3c-4d1f-11e8-9e10-f4ce46b254d8 N/A da0p2

*"zdb -l /dev/da0"

--------------------------------------------

LABEL 0

--------------------------------------------

failed to unpack label 0

--------------------------------------------

LABEL 1

--------------------------------------------

failed to unpack label 1

--------------------------------------------

LABEL 2

--------------------------------------------

failed to unpack label 2

--------------------------------------------

LABEL 3

--------------------------------------------

failed to unpack label 3

*"zpool history"

History for 'freenas-boot':

2018-05-01.02:47:40 zpool create -f -o cachefile=/tmp/zpool.cache -o version=28 -O mountpoint=none -O atime=off -O canmount=off freenas-boot da2p2

2018-05-01.02:47:41 zpool set feature@async_destroy=enabled freenas-boot

2018-05-01.02:47:41 zpool set feature@empty_bpobj=enabled freenas-boot

2018-05-01.02:47:41 zpool set feature@lz4_compress=enabled freenas-boot

2018-05-01.02:47:41 zfs set compress=lz4 freenas-boot

2018-05-01.02:47:42 zfs create -o canmount=off freenas-boot/ROOT

2018-05-01.02:47:42 zfs create -o mountpoint=legacy freenas-boot/ROOT/default

2018-05-01.02:47:48 zfs create -o mountpoint=legacy freenas-boot/grub

2018-05-01.02:53:12 zpool set bootfs=freenas-boot/ROOT/default freenas-boot

2018-05-01.02:53:12 zpool set cachefile=/boot/zfs/rpool.cache freenas-boot

2018-05-01.02:53:40 zfs set beadm:nickname=default freenas-boot/ROOT/default

2018-05-01.02:58:01 zfs snapshot -r freenas-boot/ROOT/default@2018-04-30-23:58:00

2018-05-01.02:58:08 zfs clone -o canmount=off -o mountpoint=legacy freenas-boot/ROOT/default@2018-04-30-23:58:00 freenas-boot/ROOT/Initial-Install

2018-05-01.02:58:12 zfs set beadm:nickname=Initial-Install freenas-boot/ROOT/Initial-Install

2018-05-01.04:05:08 zfs snapshot -r freenas-boot/ROOT/default@2018-05-01-04:05:07

2018-05-01.04:05:14 zfs clone -o canmount=off -o mountpoint=legacy freenas-boot/ROOT/default@2018-05-01-04:05:07 freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:05:27 zfs set beadm:nickname=12-MASTER-201804301102 freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:05:31 zfs set beadm:keep=False freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:05:39 zfs set sync=disabled freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:22 zfs inherit freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:23 zfs set canmount=noauto freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:25 zfs set mountpoint=/tmp/BE-12-MASTER-201804301102.ZJGgAoAl freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:27 zfs set mountpoint=/ freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:27 zpool set bootfs=freenas-boot/ROOT/12-MASTER-201804301102 freenas-boot

2018-05-01.04:17:28 zfs set canmount=noauto freenas-boot/ROOT/Initial-Install

2018-05-01.04:17:30 zfs set canmount=noauto freenas-boot/ROOT/default

2018-05-01.04:17:34 zfs promote freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.12:11:17 zpool attach freenas-boot /dev/da1p2 /dev/da0p2

2018-05-01.12:13:42 zpool detach freenas-boot /dev/da0p2

Thanks for help :)

I was using BETA 12 Freenas Build. And I had 2 TB hardware raid storage unit (ISCSI Block for ESXI, I think I didn't do anything for usage, ex. format or creating any pool (I don't remember)). And yesterday I can't access web interface. I think I had OS problem of BETA. And for this reasons, I did reinstall Freenas 11 U4 (fresh install) for access web interface and disk. After I can't access my storage pool. For this, I attach this disk to under "System->Boot->Status":

And after I can't access my old datas. They are important and I didn't have backup :( Anyone can help me?

*"zpool import"

EMPTY :(

*"gpart list"

Geom name: da1

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 31116247

first: 40

entries: 152

scheme: GPT

Providers:

1. Name: da1p1

Mediasize: 524288 (512K)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r0w0e0

rawuuid: dcf438aa-4cd0-11e8-8d1b-f4ce46b254d8

rawtype: 21686148-6449-6e6f-744e-656564454649

label: (null)

length: 524288

offset: 20480

type: bios-boot

index: 1

end: 1063

start: 40

2. Name: da1p2

Mediasize: 15930970112 (15G)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 544768

Mode: r1w1e1

rawuuid: dd0b8372-4cd0-11e8-8d1b-f4ce46b254d8

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 15930970112

offset: 544768

type: freebsd-zfs

index: 2

end: 31116239

start: 1064

Consumers:

1. Name: da1

Mediasize: 15931539456 (15G)

Sectorsize: 512

Mode: r1w1e2

Geom name: da0

modified: false

state: OK

fwheads: 255

fwsectors: 32

last: 4101100087

first: 40

entries: 152

scheme: GPT

Providers:

1. Name: da0p1

Mediasize: 524288 (512K)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r0w0e0

rawuuid: 878caa70-4d1f-11e8-9e10-f4ce46b254d8

rawtype: 21686148-6449-6e6f-744e-656564454649

label: (null)

length: 524288

offset: 20480

type: bios-boot

index: 1

end: 1063

start: 40

2. Name: da0p2

Mediasize: 2099762696192 (1.9T)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 544768

Mode: r0w0e0

rawuuid: 87a6ba3c-4d1f-11e8-9e10-f4ce46b254d8

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2099762696192

offset: 544768

type: freebsd-zfs

index: 2

end: 4101100079

start: 1064

Consumers:

1. Name: da0

Mediasize: 2099763263488 (1.9T)

Sectorsize: 512

Mode: r0w0e0

*"gpart show"

=> 40 31116208 da1 GPT (15G)

40 1024 1 bios-boot (512K)

1064 31115176 2 freebsd-zfs (15G)

31116240 8 - free - (4.0K)

=> 40 4101100048 da0 GPT (1.9T)

40 1024 1 bios-boot (512K)

1064 4101099016 2 freebsd-zfs (1.9T)

4101100080 8 - free - (4.0K)

*"smartctl -a -q noserial /dev/da0"

smartctl 6.6 2017-11-05 r4594 [FreeBSD 11.1-STABLE amd64] (local build)

Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Vendor: HP

Product: RAID 5

Revision: OK

User Capacity: 2,099,763,263,488 bytes [2.09 TB]

Logical block size: 512 bytes

Device type: disk

Local Time is: Tue May 1 13:26:03 2018 +03

SMART support is: Unavailable - device lacks SMART capability.

=== START OF READ SMART DATA SECTION ===

Current Drive Temperature: 0 C

Drive Trip Temperature: 0 C

Error Counter logging not supported

Device does not support Self Test logging

*"camcontrol devlist"

<HP RAID 5 OK> at scbus0 target 0 lun 0 (pass0,da0)

<Generic STORAGE DEVICE 0250> at scbus9 target 0 lun 0 (pass1,da1)

*"glabel status*

Name Status Components

gptid/dcf438aa-4cd0-11e8-8d1b-f4ce46b254d8 N/A da1p1

gptid/878caa70-4d1f-11e8-9e10-f4ce46b254d8 N/A da0p1

gptid/87a6ba3c-4d1f-11e8-9e10-f4ce46b254d8 N/A da0p2

*"zdb -l /dev/da0"

--------------------------------------------

LABEL 0

--------------------------------------------

failed to unpack label 0

--------------------------------------------

LABEL 1

--------------------------------------------

failed to unpack label 1

--------------------------------------------

LABEL 2

--------------------------------------------

failed to unpack label 2

--------------------------------------------

LABEL 3

--------------------------------------------

failed to unpack label 3

*"zpool history"

History for 'freenas-boot':

2018-05-01.02:47:40 zpool create -f -o cachefile=/tmp/zpool.cache -o version=28 -O mountpoint=none -O atime=off -O canmount=off freenas-boot da2p2

2018-05-01.02:47:41 zpool set feature@async_destroy=enabled freenas-boot

2018-05-01.02:47:41 zpool set feature@empty_bpobj=enabled freenas-boot

2018-05-01.02:47:41 zpool set feature@lz4_compress=enabled freenas-boot

2018-05-01.02:47:41 zfs set compress=lz4 freenas-boot

2018-05-01.02:47:42 zfs create -o canmount=off freenas-boot/ROOT

2018-05-01.02:47:42 zfs create -o mountpoint=legacy freenas-boot/ROOT/default

2018-05-01.02:47:48 zfs create -o mountpoint=legacy freenas-boot/grub

2018-05-01.02:53:12 zpool set bootfs=freenas-boot/ROOT/default freenas-boot

2018-05-01.02:53:12 zpool set cachefile=/boot/zfs/rpool.cache freenas-boot

2018-05-01.02:53:40 zfs set beadm:nickname=default freenas-boot/ROOT/default

2018-05-01.02:58:01 zfs snapshot -r freenas-boot/ROOT/default@2018-04-30-23:58:00

2018-05-01.02:58:08 zfs clone -o canmount=off -o mountpoint=legacy freenas-boot/ROOT/default@2018-04-30-23:58:00 freenas-boot/ROOT/Initial-Install

2018-05-01.02:58:12 zfs set beadm:nickname=Initial-Install freenas-boot/ROOT/Initial-Install

2018-05-01.04:05:08 zfs snapshot -r freenas-boot/ROOT/default@2018-05-01-04:05:07

2018-05-01.04:05:14 zfs clone -o canmount=off -o mountpoint=legacy freenas-boot/ROOT/default@2018-05-01-04:05:07 freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:05:27 zfs set beadm:nickname=12-MASTER-201804301102 freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:05:31 zfs set beadm:keep=False freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:05:39 zfs set sync=disabled freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:22 zfs inherit freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:23 zfs set canmount=noauto freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:25 zfs set mountpoint=/tmp/BE-12-MASTER-201804301102.ZJGgAoAl freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:27 zfs set mountpoint=/ freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.04:17:27 zpool set bootfs=freenas-boot/ROOT/12-MASTER-201804301102 freenas-boot

2018-05-01.04:17:28 zfs set canmount=noauto freenas-boot/ROOT/Initial-Install

2018-05-01.04:17:30 zfs set canmount=noauto freenas-boot/ROOT/default

2018-05-01.04:17:34 zfs promote freenas-boot/ROOT/12-MASTER-201804301102

2018-05-01.12:11:17 zpool attach freenas-boot /dev/da1p2 /dev/da0p2

2018-05-01.12:13:42 zpool detach freenas-boot /dev/da0p2

Thanks for help :)