Please help me understand if my DRAID expansion plan makes sense. Thank you!

This project is for my personal home use, but I've been running FreeNAS for about a decade. I have a newly acquired 45drives bare chassis, which in the end state I think I want it to have 4 arrays of 8x data and 3x parity, plus the last drive as a spare. If I'm not mistaken, in dRAID nomenclature this would be dRAID3:8:1. This results in 32x data, 12 parity, and 1 spare.

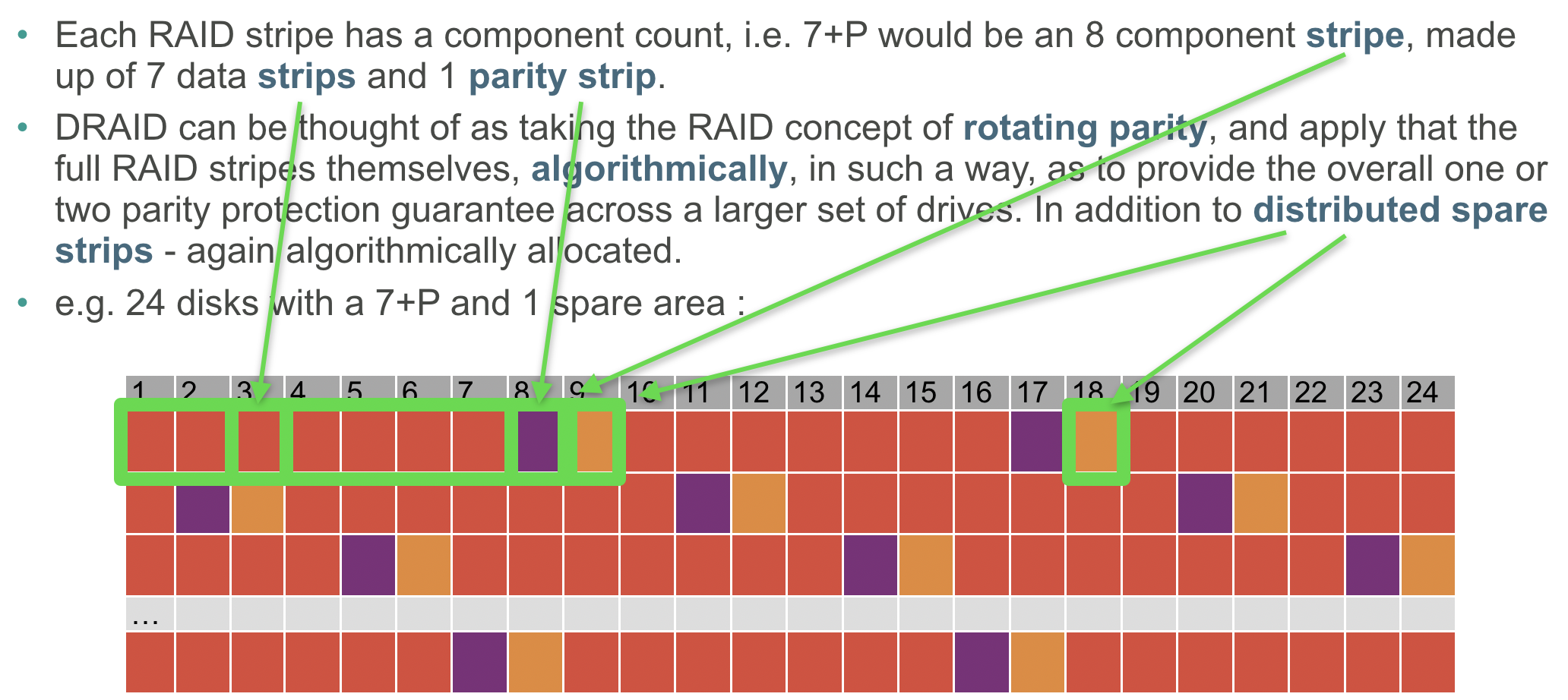

Now, in the RAIDZ3 format, the best plan to get a low cost, Z3 vdev like this would be to buy 45x small drives and when more capacity was required to slowly replace them one by one with new larger drives.* This is because you can't easily change the vdev stripe width. However, with dRAID, if I understand correctly, I can easily expand mystripe width vdev width* and data is rebuilt across it as if I put that many drive to start. This would enable me to spend my budget on larger capacity drives and slowly add to the array each year.

Thus, my plan is to use 18TB WD Gold SATA drives. They have lower power than SAS, which will save me money in direct power and cooling, but higher reliability and performance than Reds. My expansion plan is:

Year 1: Add 12x 18TB WD Gold creating an dRAID3:8:1 vdev. This is 8 data, 3 parity, and 1 spare.

Year 2: Add 11x 18TB WD Gold expanding the array, while keeping the dRAID3:8:1 vdev configuration. This is now 16 data, 6 parity, and 1 spare (in total).

Year 3: Add 11x 18TB WD Gold expanding the array, while keeping the dRAID3:8:1 vdev configuration. This is now 24 data, 9 parity, and 1 spare (in total).

Year 4: Add 11x 18TB WD Gold expanding the array, while keeping the dRAID3:8:1 vdev configuration. This is now 32 data, 12 parity, and 1 spare (in total).

After year 4 the array is fully built and any further expansion is another chassis for a cluster or slowly replacing all 45 drives with larger capacity units.

Is this an effective and safe strategy for my data? Thank you, your help is greatly appreciated.

Edit: corrected my vdev vs stripe width error for clarity.

*similar to manually expanding a RAIDZ3, but with automatic reflowing of data.

This project is for my personal home use, but I've been running FreeNAS for about a decade. I have a newly acquired 45drives bare chassis, which in the end state I think I want it to have 4 arrays of 8x data and 3x parity, plus the last drive as a spare. If I'm not mistaken, in dRAID nomenclature this would be dRAID3:8:1. This results in 32x data, 12 parity, and 1 spare.

Now, in the RAIDZ3 format, the best plan to get a low cost, Z3 vdev like this would be to buy 45x small drives and when more capacity was required to slowly replace them one by one with new larger drives.* This is because you can't easily change the vdev stripe width. However, with dRAID, if I understand correctly, I can easily expand my

Thus, my plan is to use 18TB WD Gold SATA drives. They have lower power than SAS, which will save me money in direct power and cooling, but higher reliability and performance than Reds. My expansion plan is:

Year 1: Add 12x 18TB WD Gold creating an dRAID3:8:1 vdev. This is 8 data, 3 parity, and 1 spare.

Year 2: Add 11x 18TB WD Gold expanding the array, while keeping the dRAID3:8:1 vdev configuration. This is now 16 data, 6 parity, and 1 spare (in total).

Year 3: Add 11x 18TB WD Gold expanding the array, while keeping the dRAID3:8:1 vdev configuration. This is now 24 data, 9 parity, and 1 spare (in total).

Year 4: Add 11x 18TB WD Gold expanding the array, while keeping the dRAID3:8:1 vdev configuration. This is now 32 data, 12 parity, and 1 spare (in total).

After year 4 the array is fully built and any further expansion is another chassis for a cluster or slowly replacing all 45 drives with larger capacity units.

Is this an effective and safe strategy for my data? Thank you, your help is greatly appreciated.

Edit: corrected my vdev vs stripe width error for clarity.

*similar to manually expanding a RAIDZ3, but with automatic reflowing of data.

Last edited: