Sprint

Explorer

- Joined

- Mar 30, 2019

- Messages

- 72

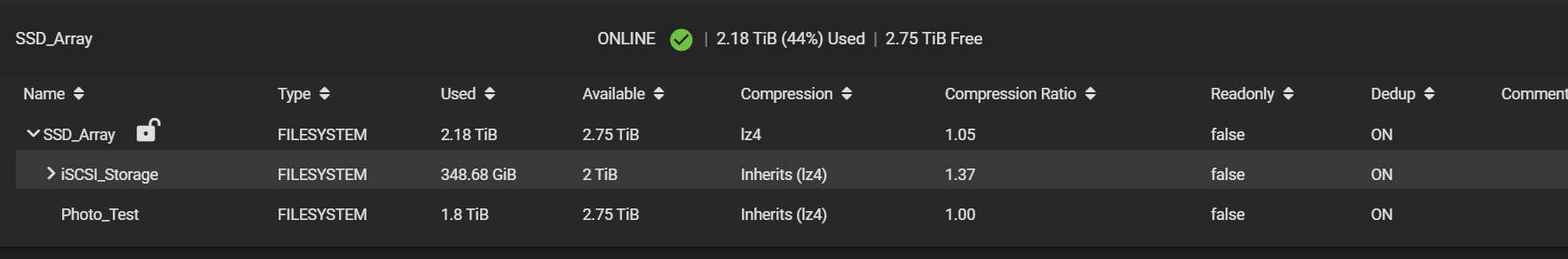

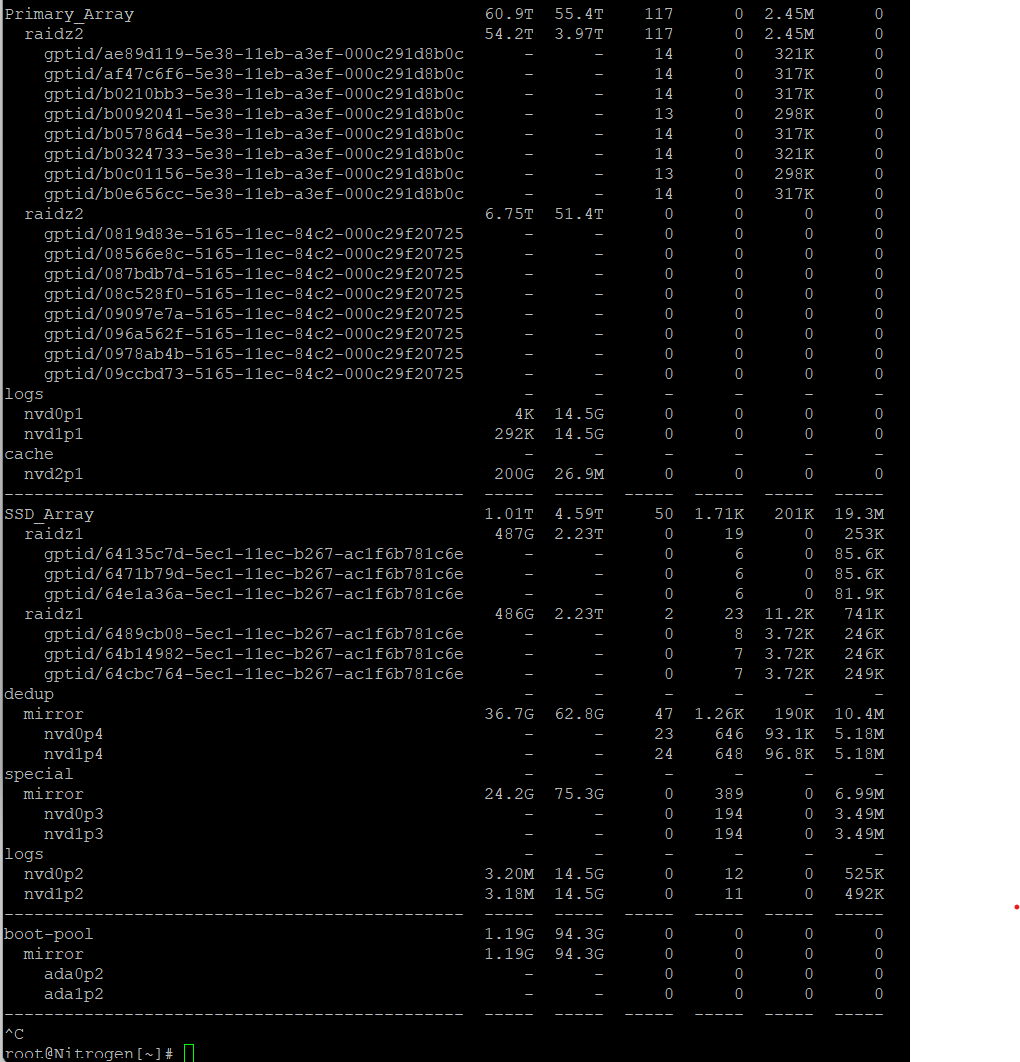

So I've been playing with dedup a little, and have rebuilt the pool a few times to get the sizing of the vdevs right, but it doesn't appear to be working. I have two vDevs of 3x1Tb SSDs in RaidZ, plus two 280Gb Optane drives with 2x 100Gb partitions on each, one partition from each make up a mirrored Special vdev and one from each make up the dedup vdev (mirrored), so I'm doing it all accord to the book. as far as I can tell. I have tones of resources, twin 10 core Xeons, 256Gb of Ram, so forth! (screenshot below showing the pool layout). Speeds are good, when moving VMs from one iSCSI array to another on a different machine, I fully saturate my 10Gb link.

At the moment, I have an iSCSI ZOL being used by Proxmox, which is seeing 1.37 duplication rate, but that doesn't seem right given the amount of windows and linux VMs, and I suspect that's LZ4 at work with all the blank space on some of my VMs drives (ZVOL is sparse), as I got similar numbers pre deduplication.

So to be sure, I created a new dataset on this pool, again with dedup on, and loaded up 3 copies of the same 500Gb folder of photos, and that dataset is seeing 1.0 dedup, which suggests to be, its NOT deduplicating.... So I'm now stumped? What have I done wrong?

Any and all input welcome as always.

Specs are:

2x Xeon E5-2630-V4 (10 Cores each)

256Gb DDR4

16x8Tb (8x WD Reds in a vDev, 8x WD Golds in another vDev)

6x 1Tb Samsung 860Evo SSDs in twin 3 drive RaidZ1 vDevs

Intel X520 10Gb NIC

PCIE 16x ASRock 4xM.2 slot card (board bifurcated 4x4x4x4)

200Gb Crucial M.2 L2ARC

2x 280Gb Optane 900p connecting via M.2

3x 9207-8i HBAs

2x 120Gb Boot SSDs

At the moment, I have an iSCSI ZOL being used by Proxmox, which is seeing 1.37 duplication rate, but that doesn't seem right given the amount of windows and linux VMs, and I suspect that's LZ4 at work with all the blank space on some of my VMs drives (ZVOL is sparse), as I got similar numbers pre deduplication.

So to be sure, I created a new dataset on this pool, again with dedup on, and loaded up 3 copies of the same 500Gb folder of photos, and that dataset is seeing 1.0 dedup, which suggests to be, its NOT deduplicating.... So I'm now stumped? What have I done wrong?

Any and all input welcome as always.

Specs are:

2x Xeon E5-2630-V4 (10 Cores each)

256Gb DDR4

16x8Tb (8x WD Reds in a vDev, 8x WD Golds in another vDev)

6x 1Tb Samsung 860Evo SSDs in twin 3 drive RaidZ1 vDevs

Intel X520 10Gb NIC

PCIE 16x ASRock 4xM.2 slot card (board bifurcated 4x4x4x4)

200Gb Crucial M.2 L2ARC

2x 280Gb Optane 900p connecting via M.2

3x 9207-8i HBAs

2x 120Gb Boot SSDs