onthax

Explorer

- Joined

- Jan 31, 2012

- Messages

- 81

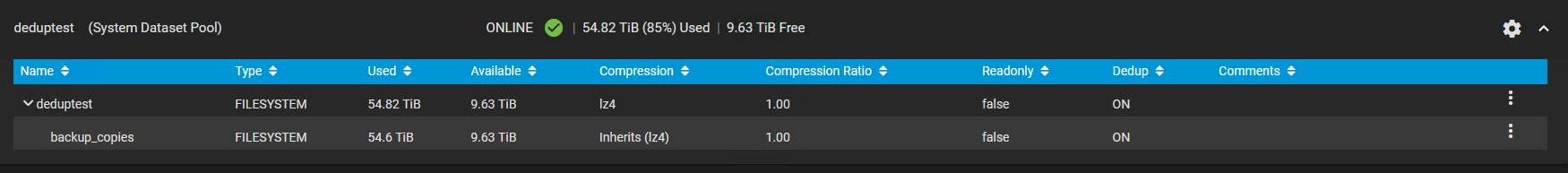

Have been running truenas for a while at home, but running a test at work with backup sets.

We copied a similar dataset to a windows server with post processing dedup enabled and were getting around 60% dedup, they are monthly copies of the same data for backup purposes so expect the data to be highly deduplicatable.

Fired up a freenas box with an SSD to do a test to see if we could get the same benefits using ZFS inline dudup but are seeing 0 dedup benefit.

Note: this is a test dataset, so no ssd redundancy, data stored elsewhere.

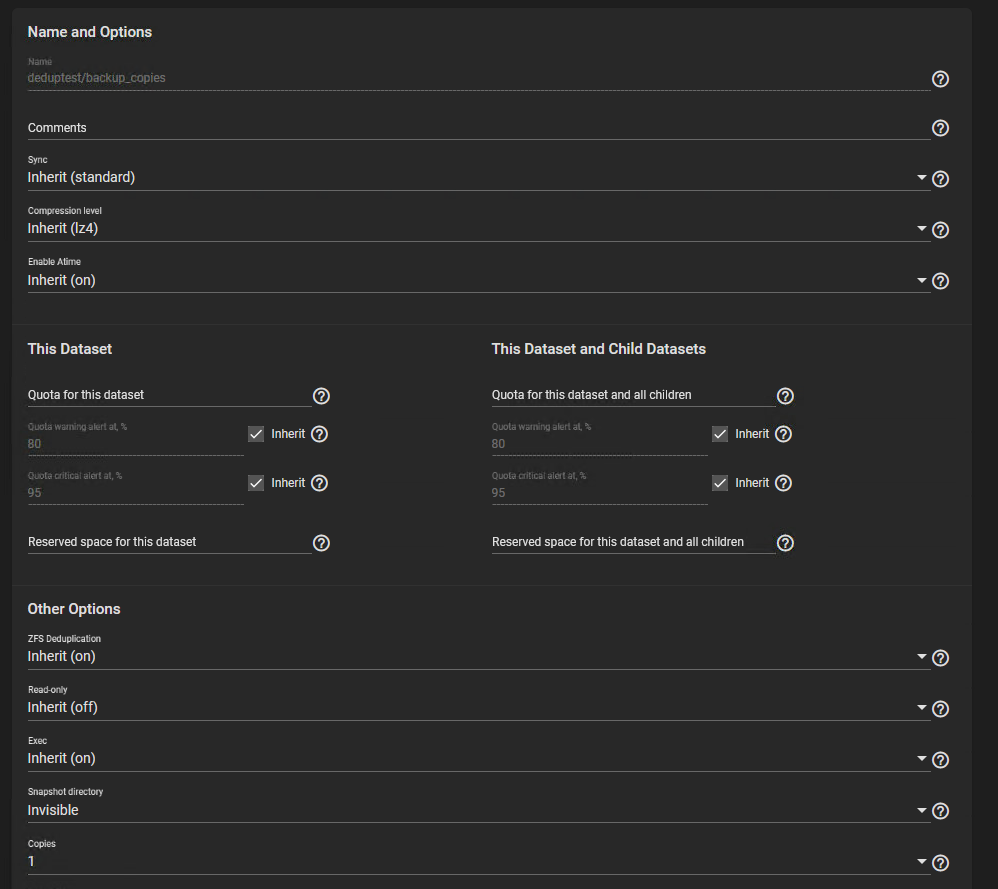

Something i'm doing wrong other than just enabling dedup?

something i'm doing wrong here?

We copied a similar dataset to a windows server with post processing dedup enabled and were getting around 60% dedup, they are monthly copies of the same data for backup purposes so expect the data to be highly deduplicatable.

Fired up a freenas box with an SSD to do a test to see if we could get the same benefits using ZFS inline dudup but are seeing 0 dedup benefit.

Note: this is a test dataset, so no ssd redundancy, data stored elsewhere.

Something i'm doing wrong other than just enabling dedup?

config:

NAME STATE READ WRITE CKSUM

deduptest ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/e1b4e4c2-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e1c0f7df-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e21d3384-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e1a880ed-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e1da3026-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e2053e11-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e1f912f2-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e3250fe1-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e30e5b8b-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e337469a-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e36cd0a6-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

gptid/e34d095e-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

dedup

gptid/e18000ce-77ab-11ec-b5ac-1418776d9ff3 ONLINE 0 0 0

errors: No known data errors

dedup: DDT entries 457192758, size 516B on disk, 152B in core

bucket allocated referenced

______ ______________________________ ______________________________

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

------ ------ ----- ----- ----- ------ ----- ----- -----

1 436M 54.5T 54.5T 54.5T 436M 54.5T 54.5T 54.5T

2 191K 23.9G 23.9G 23.8G 395K 49.4G 49.4G 49.4G

4 80 10M 9.64M 9.66M 330 41.2M 39.2M 39.3M

8 4 512K 32K 64.0K 40 5M 320K 640K

Total 436M 54.5T 54.5T 54.5T 436M 54.5T 54.5T 54.5T

root@truenas[~]# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

boot-pool 262G 1.20G 261G - - 0% 0% 1.00x ONLINE -

deduptest 87.5T 71.9T 15.6T - - 9% 82% 1.00x ONLINE /mnt

something i'm doing wrong here?