Hi

i am not confident of recovering my pool

im using truenas 12 for a few months and this is the first issue....

will I be able to revert ??

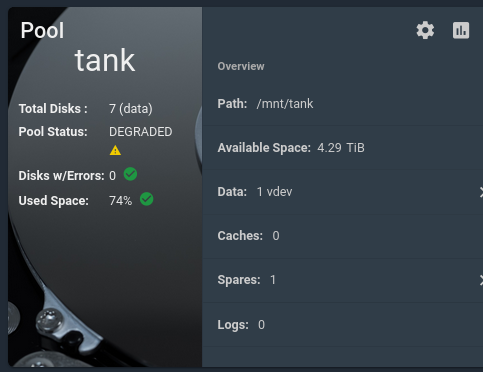

I have 8 HD WD RED 4TB with raidz2 with 1 spare disk , all drives are online

I am a week waiting for the pool to be rebuilt

I removed a disk that was degraded by a new disk seven days ago but the replacement is not over yet

what can i do ?

I am a week migrating all VMs to other storages....

OS Truenas 12

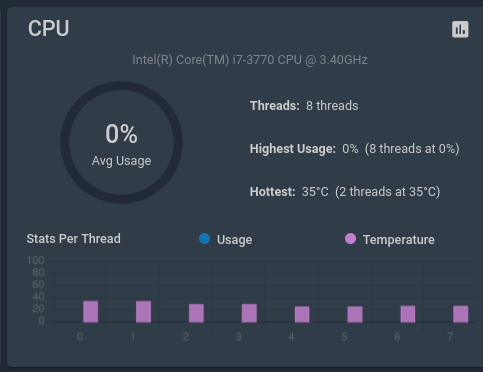

hw.model: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz

hw.machine: amd64

hw.ncpu: 8

Memory 32GB RAM

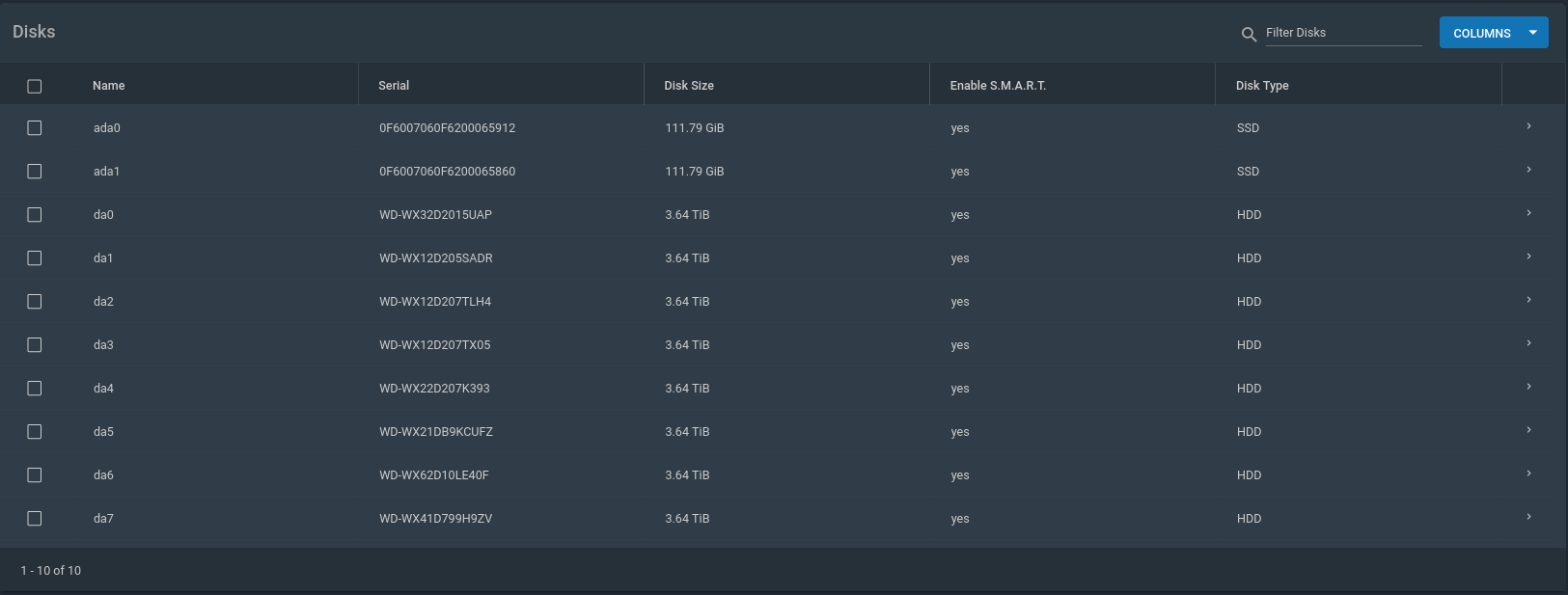

2 SSD 120GB

8 HD WD RED 4 TB

Interfaces

Intel Dual 10G Intel X540-AT2

Intel Quad 1Gb Intel I350-T4

HBA LSI 9240-8i - Firmware 9211 IT Mode

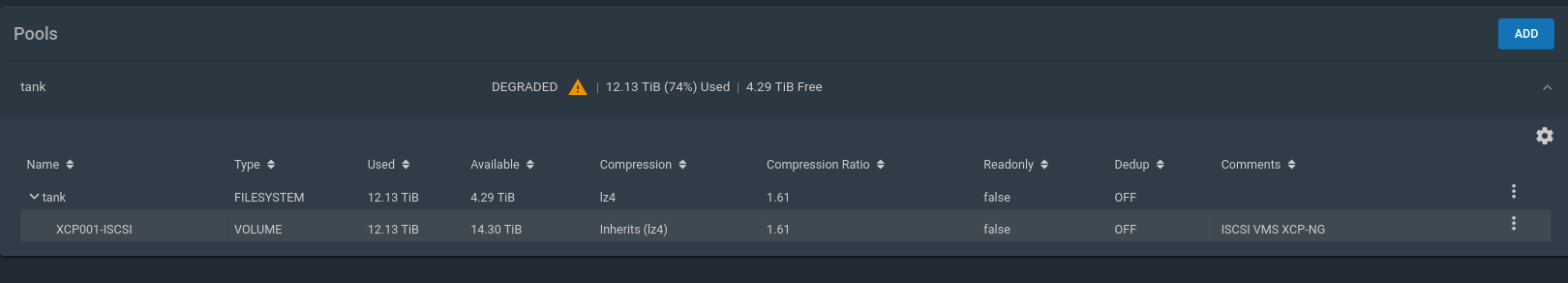

Pool tank HD RED 4TB - WD40EFAX da0 da1 da2 da3 da4 da5 da6 da7

i am not confident of recovering my pool

im using truenas 12 for a few months and this is the first issue....

will I be able to revert ??

I have 8 HD WD RED 4TB with raidz2 with 1 spare disk , all drives are online

I am a week waiting for the pool to be rebuilt

I removed a disk that was degraded by a new disk seven days ago but the replacement is not over yet

what can i do ?

I am a week migrating all VMs to other storages....

OS Truenas 12

hw.model: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz

hw.machine: amd64

hw.ncpu: 8

Memory 32GB RAM

2 SSD 120GB

8 HD WD RED 4 TB

Interfaces

Intel Dual 10G Intel X540-AT2

Intel Quad 1Gb Intel I350-T4

HBA LSI 9240-8i - Firmware 9211 IT Mode

Code:

# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

boot-pool 95.5G 1.06G 94.4G - - 0% 1% 1.00x ONLINE -

tank 25.5T 3.18T 22.3T - - 6% 12% 1.00x DEGRADED /mnt

root@truenas02[~]# zpool status -v tank

pool: tank

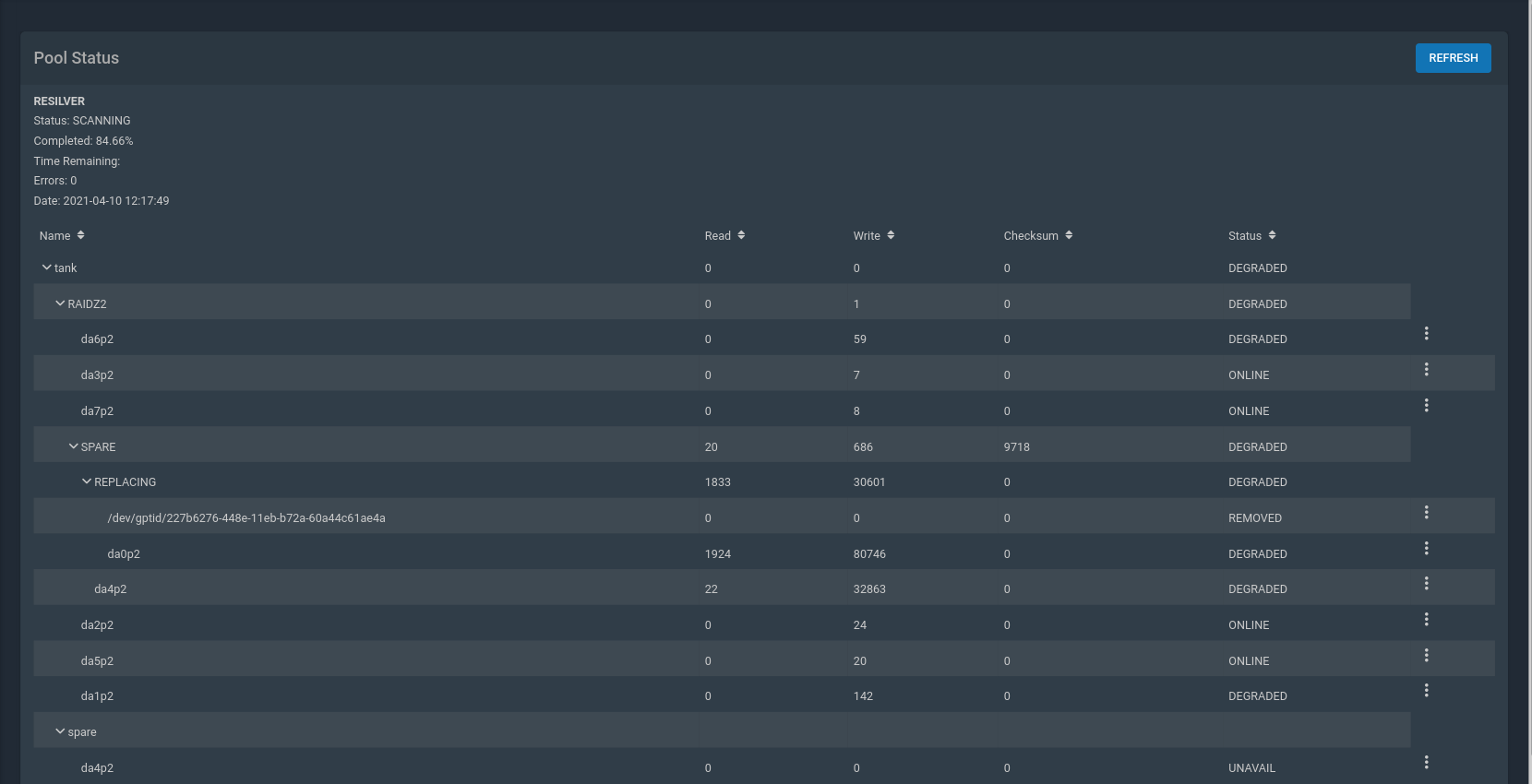

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Apr 10 12:17:49 2021

2.96T scanned at 11.5M/s, 2.61T issued at 10.2M/s, 3.08T total

705G resilvered, 84.66% done, 13:34:21 to go

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

raidz2-0 DEGRADED 0 1 0

gptid/2102515b-448e-11eb-b72a-60a44c61ae4a DEGRADED 0 59 0 too many errors (resilvering)

gptid/21efb762-448e-11eb-b72a-60a44c61ae4a ONLINE 0 7 0 (resilvering)

gptid/bcdc7987-448e-11eb-b72a-60a44c61ae4a ONLINE 0 8 0 (resilvering)

spare-3 DEGRADED 20 686 9.49K

replacing-0 DEGRADED 1.79K 29.9K 0

gptid/227b6276-448e-11eb-b72a-60a44c61ae4a REMOVED 0 0 0

gptid/dbe6434f-97c8-11eb-b4c4-a0369f51cb3c DEGRADED 1.88K 78.9K 0 too many errors (resilvering)

gptid/05d8adad-60d0-11eb-a9c0-a0369f51cb3c DEGRADED 22 32.1K 0 too many errors (resilvering)

gptid/22dbd3ac-448e-11eb-b72a-60a44c61ae4a ONLINE 0 24 0 (resilvering)

gptid/2315983f-448e-11eb-b72a-60a44c61ae4a ONLINE 0 20 0 (resilvering)

gptid/22f3057c-448e-11eb-b72a-60a44c61ae4a DEGRADED 0 142 0 too many errors (resilvering)

spares

gptid/05d8adad-60d0-11eb-a9c0-a0369f51cb3c INUSE currently in use

errors: No known data errors

root@truenas02[~]# zpool iostat -v

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------------------- ----- ----- ----- ----- ----- -----

boot-pool 1.06G 94.4G 0 0 4.25K 393

mirror 1.06G 94.4G 0 0 4.25K 393

ada0p2 - - 0 0 2.12K 196

ada1p2 - - 0 0 2.13K 196

-------------------------------------------------- ----- ----- ----- ----- ----- -----

tank 3.18T 22.3T 142 260 2.66M 5.98M

raidz2 3.18T 22.3T 142 260 2.66M 5.98M

gptid/2102515b-448e-11eb-b72a-60a44c61ae4a - - 21 35 414K 830K

gptid/21efb762-448e-11eb-b72a-60a44c61ae4a - - 21 35 416K 829K

gptid/bcdc7987-448e-11eb-b72a-60a44c61ae4a - - 21 35 413K 829K

spare - - 187 541 2.79M 13.0M

replacing - - 188 464 2.80M 11.0M

gptid/227b6276-448e-11eb-b72a-60a44c61ae4a - - 15 32 240K 771K

gptid/dbe6434f-97c8-11eb-b4c4-a0369f51cb3c - - 1 80 37.2K 2.14M

gptid/05d8adad-60d0-11eb-a9c0-a0369f51cb3c - - 0 81 22.9K 2.15M

gptid/22dbd3ac-448e-11eb-b72a-60a44c61ae4a - - 20 35 410K 830K

gptid/2315983f-448e-11eb-b72a-60a44c61ae4a - - 21 35 413K 829K

gptid/22f3057c-448e-11eb-b72a-60a44c61ae4a - - 20 35 414K 830K

-------------------------------------------------- ----- ----- ----- ----- ----- -----

Pool tank HD RED 4TB - WD40EFAX da0 da1 da2 da3 da4 da5 da6 da7