AJCxZ0

Dabbler

- Joined

- Mar 11, 2020

- Messages

- 13

24 identical spinning disks. TrueNAS 12.0-U5.1 updated from previous 12.0 Update installed on da0 and da1. Two 10 disk RAIDZ1 vdevs with two hot spares. One data pool, Pacific, across both vdevs. Booted several times without incident.

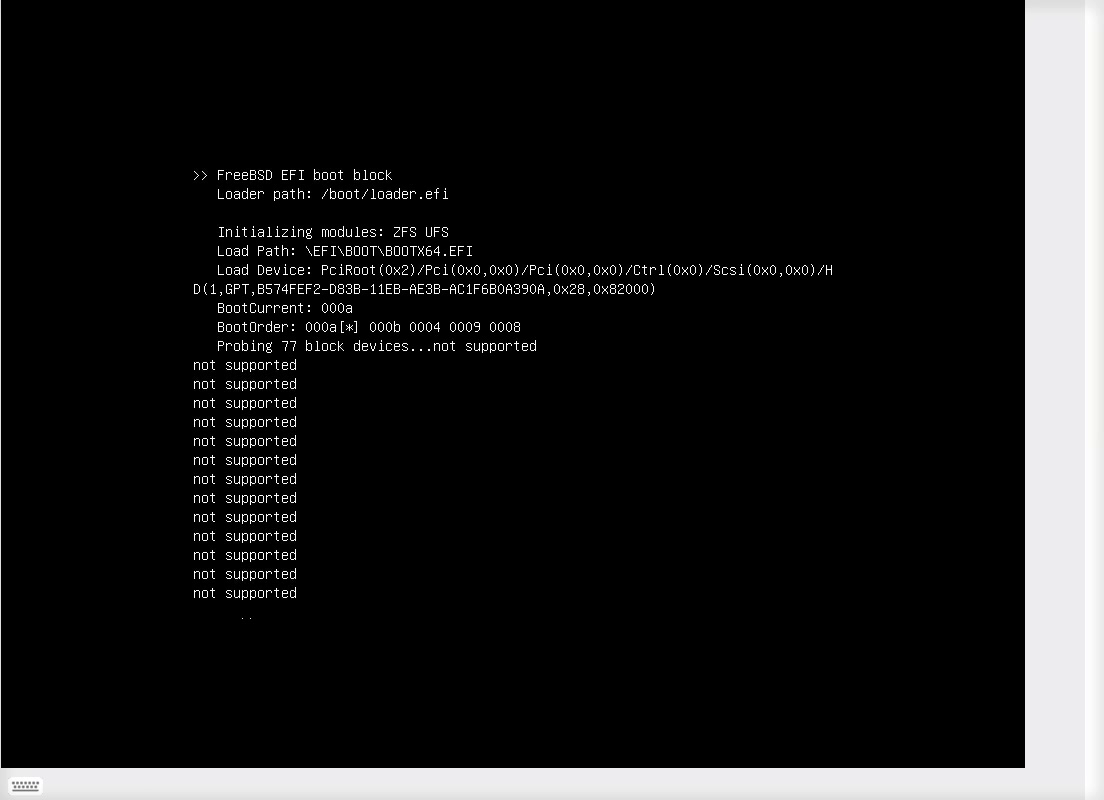

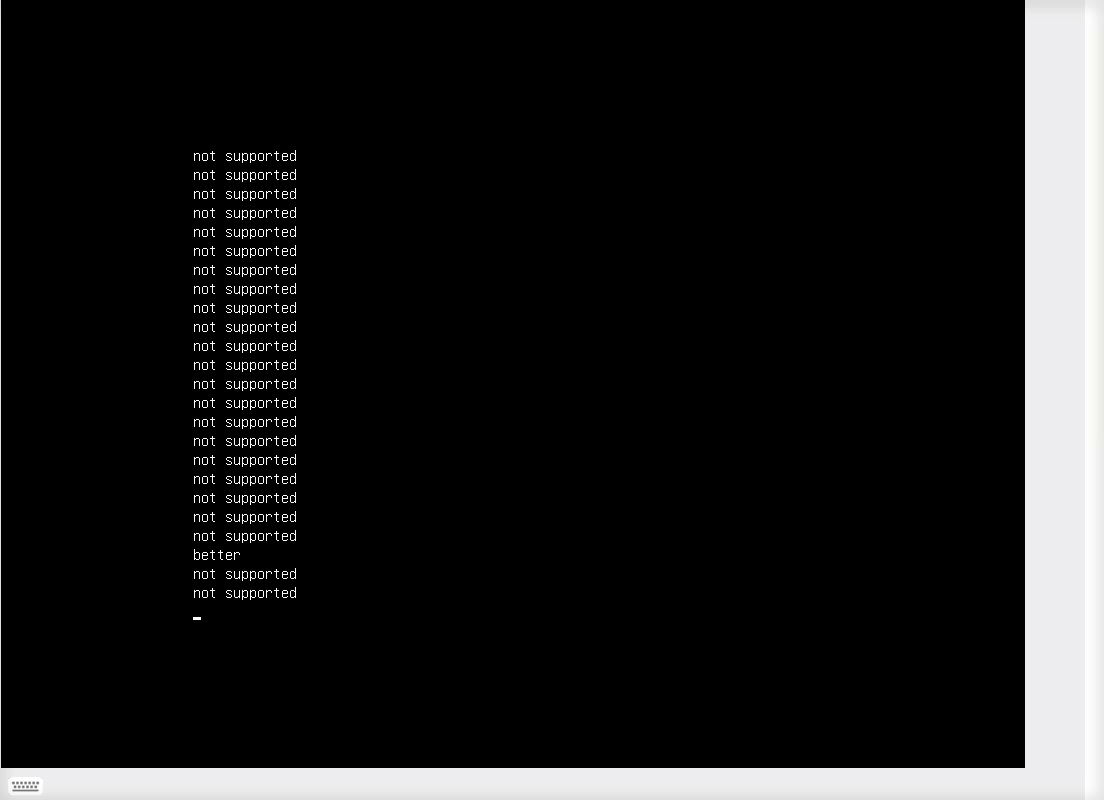

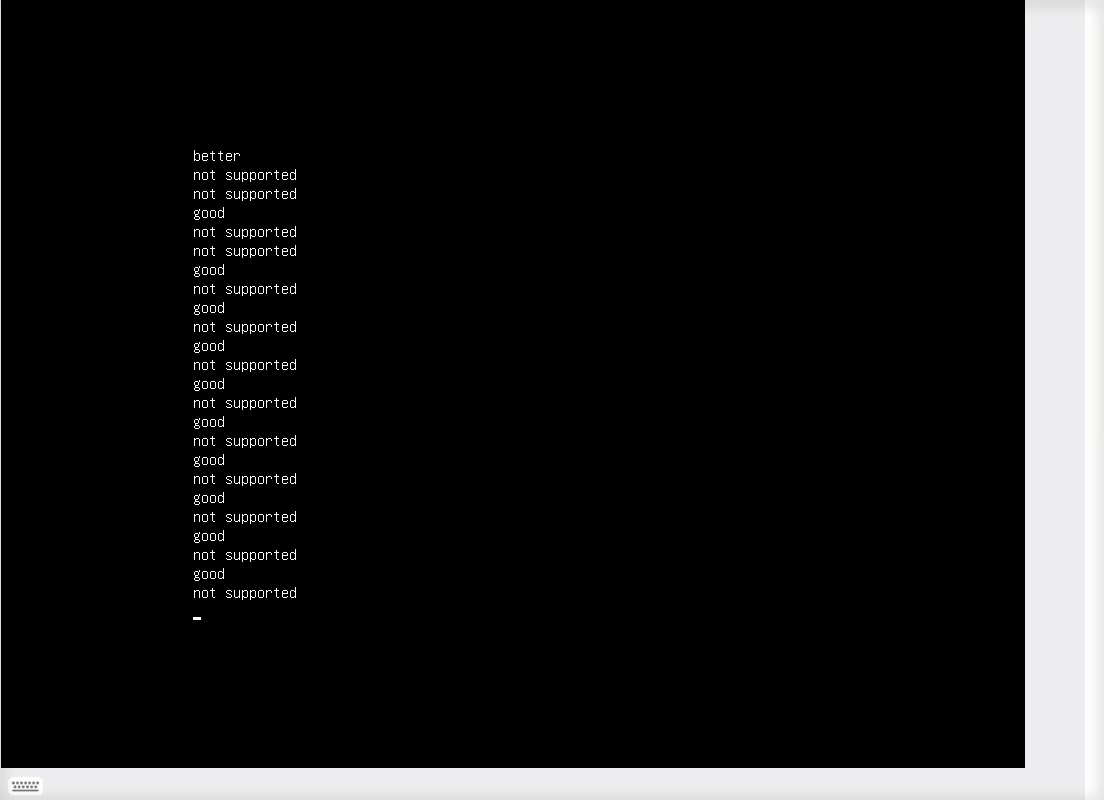

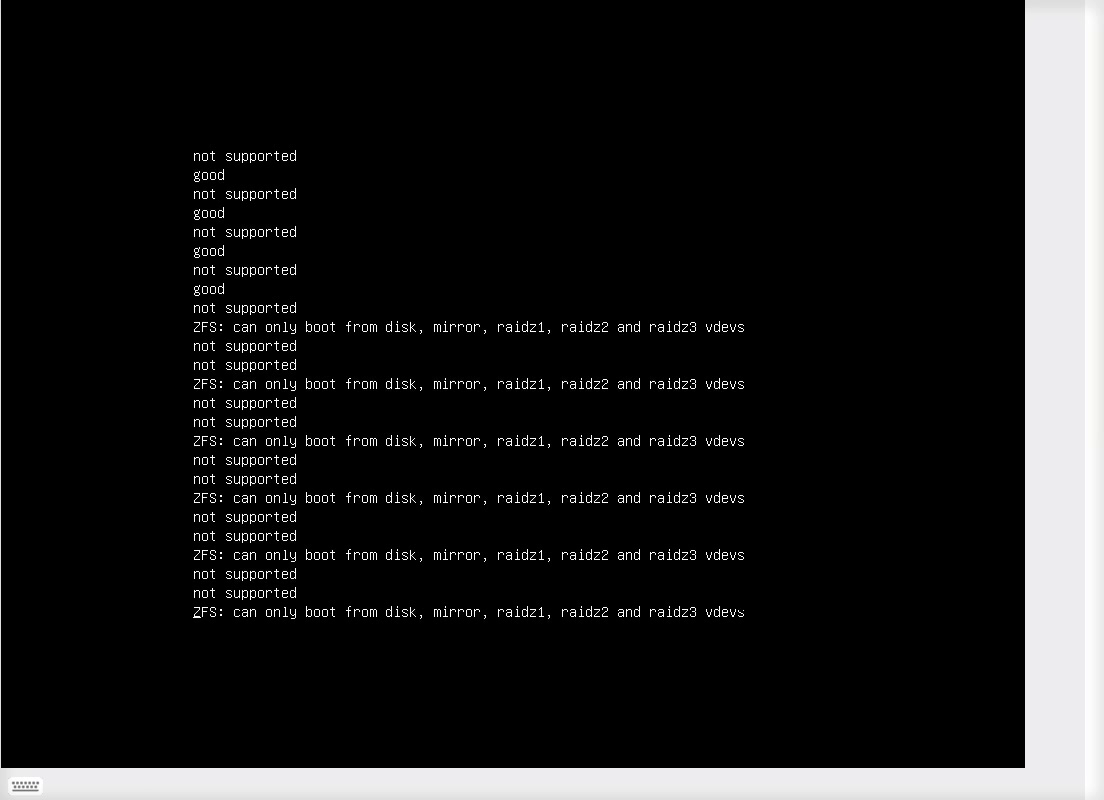

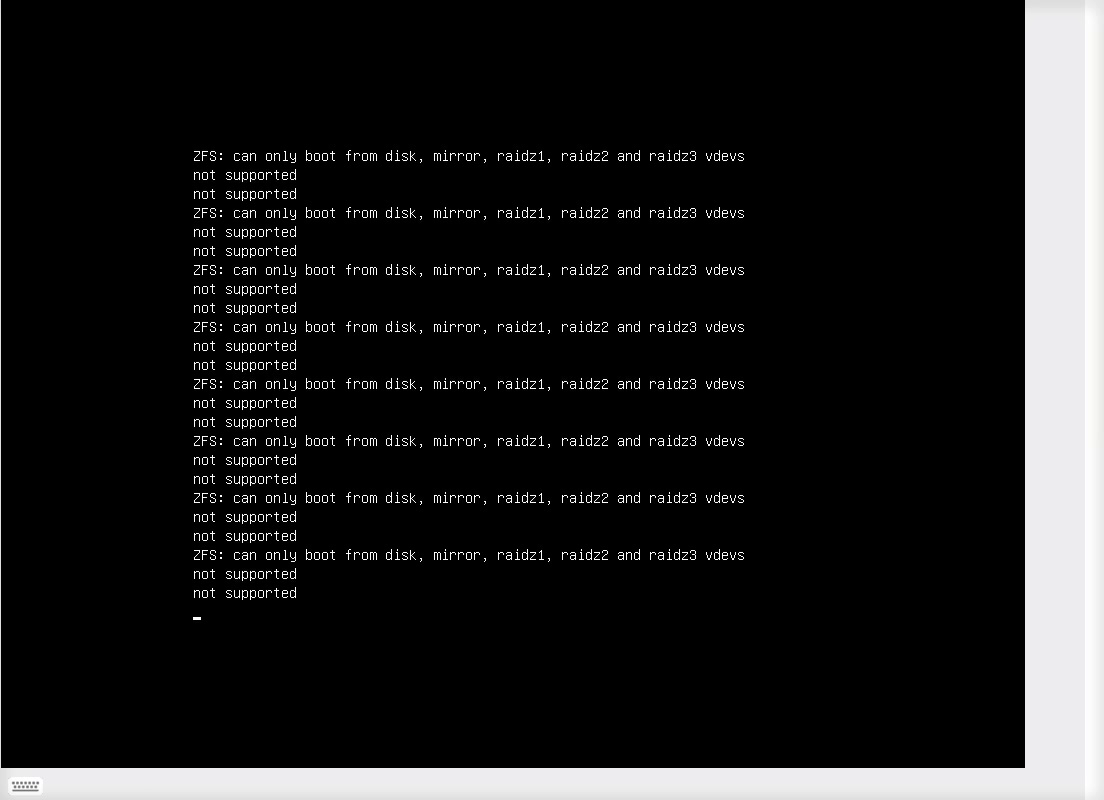

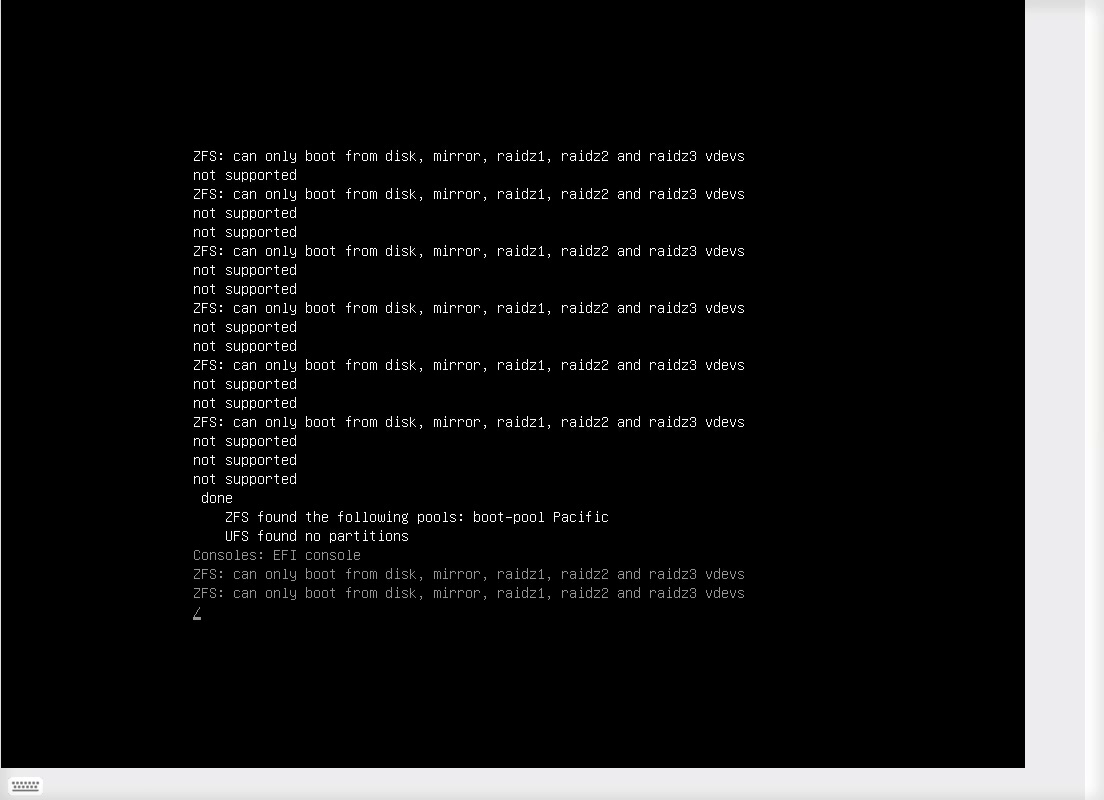

After a power outage, server fails to boot with console showing,

where that final "\" is sometimes "-" and is not spinning. Screenshots of console and video below (though messages are sometimes only captured in a single frame).

This issue appear to be very similar to unresolved posts Unable to boot with data pool drives attached. and

TrueNAS Won't boot with Pool - UFS found no partitions.

Trying to boot from TrueNAS 12.0-U8 "ISO" on a USB flash drive* and moving all disks to another server which boots from an ada0+ada1 mirror results in exactly the same boot, even when limiting boot in the "BIOS" to only the USB flash drive or the mirror. TrueNAS boot fine from the USB flash drive to the menu when the disks are are removed. Note that this is before the TrueNAS splash screen and kernel, during BOOTX64.EFI.

Since the previous boot, ZFS errors caused da19 to be removed from the pool and replaced with hot spare da12 over weeks ago. The resilver finished. A Long S.M.A.R.T test of da19 showed no errors. Pool remained marked DEGRADED. I did not try clearing the errors.

Long S.M.A.R.T test result for da19:

Firmware diagnostics show no problems with any hardware.

Assistance getting this back up and running would be most appreciated. I'm happy to provide more details and test, however changes to hardware require a site visit.

After a power outage, server fails to boot with console showing,

Code:

not supported

ZFS: can only boot from disks, mirror, raidz1, raidz2 and raidz3 vdevs

not supported

(repaeated)

done

ZFS found the following pools: boot-pool Pacific

UFS found no partitions

Consoles: EFI console

ZFS: can only boot from disks, mirror, raidz1, raidz2 and raidz3 vdevs

(repeated 10 times)

\

where that final "\" is sometimes "-" and is not spinning. Screenshots of console and video below (though messages are sometimes only captured in a single frame).

This issue appear to be very similar to unresolved posts Unable to boot with data pool drives attached. and

TrueNAS Won't boot with Pool - UFS found no partitions.

Trying to boot from TrueNAS 12.0-U8 "ISO" on a USB flash drive* and moving all disks to another server which boots from an ada0+ada1 mirror results in exactly the same boot, even when limiting boot in the "BIOS" to only the USB flash drive or the mirror. TrueNAS boot fine from the USB flash drive to the menu when the disks are are removed. Note that this is before the TrueNAS splash screen and kernel, during BOOTX64.EFI.

Since the previous boot, ZFS errors caused da19 to be removed from the pool and replaced with hot spare da12 over weeks ago. The resilver finished. A Long S.M.A.R.T test of da19 showed no errors. Pool remained marked DEGRADED. I did not try clearing the errors.

Code:

# zpool status

pool: Pacific

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: resilvered 6.71T in 2 days 22:36:43 with 0 errors on Fri Feb 4 05:56:32 2022

config:

NAME STATE READ WRITE CKSUM

Pacific DEGRADED 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/e6e10598-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e6fb145a-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e74196fb-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e7b73861-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e6c726b0-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e80cb298-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e84ef3f3-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e8c5ce48-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e8b83d4c-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/e8fe9a5f-d846-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

raidz1-1 DEGRADED 0 0 0

spare-0 DEGRADED 0 0 292

gptid/16a5238d-d847-11eb-ae3e-ac1f6b0a390a DEGRADED 0 0 243 too many errors

gptid/f805643f-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/1627b212-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/16b8b495-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/1737a1d1-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/17cf6bb6-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/17f006fa-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/185ea22f-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/18b2fcdb-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/19340582-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

gptid/1901ad03-d847-11eb-ae3e-ac1f6b0a390a ONLINE 0 0 0

spares

gptid/f805643f-d847-11eb-ae3e-ac1f6b0a390a INUSE currently in use

gptid/f820d5f6-d847-11eb-ae3e-ac1f6b0a390a AVAIL

errors: No known data errors

Long S.M.A.R.T test result for da19:

Code:

ID Description Status 1 Extended offline SUCCESS Remaining: 0 Lifetime: 26682 Error: N/A

Firmware diagnostics show no problems with any hardware.

Assistance getting this back up and running would be most appreciated. I'm happy to provide more details and test, however changes to hardware require a site visit.