- Joined

- Feb 6, 2014

- Messages

- 5,112

It’s no secret that ZFS and deduplication have had performance issues in the past. Use cases that demand high read performance have aligned well with deduplication, given the compressed and deduplicated data is only stored once in ZFS read cache. Sustained write performance however has presented challenges. The overhead of managing dedup metadata has restricted performance and limited pool sizes. iX has started a new “Fast Dedup” project to address both the performance and scalability limits.

Previous Dedup Work

Special vdevs in TrueNAS 12 added a dedicated group of SSDs to hold the deduplication table, increasing performance and expanding acceptable use-cases. With a dedup vdev in place, the small random I/O patterns of the deduplication table (DDT) could be quickly handled by a few high-performance SSDs rather than compete with data writes to the pool HDDs. Our own TrueNAS Community users conducted research and shared detailed results of configurations that worked well with their workloads. While dedup write performance was improved, several key limitations remained, such as the inherent write amplification of updating the DDT. Even with a dedup vdev, if the DDT did not fit entirely in RAM, performance of the ZFS pool is still much lower.

Proposals have been written previously for methods to improve deduplication, including Matt Ahrens’ Dedup Performance paper presented at the OpenZFS Developer Summit. Some of the ideas in this proposal are being used in the new project.

The Fast Dedup Project

iXsystems and Klara Systems have started a new Fast Dedup project in conjunction with the OpenZFS community. The work is underway and will require significant resources to complete.

Fast Dedup will include a rearchitecting of the DDT to improve performance. The new Fast Dedup Tables (FDT) have several significant new properties:

All of these will result in a significant increase in performance when compared to the existing dedup experience.

iXsystems is looking for sponsors and collaborators who could benefit from this new Fast Dedup and leverage it to make new storage use-cases possible or improve on the performance of existing use-cases. If this sounds like your organization, click here to share your interest. We’ll then send you a sponsorship information package and answer any questions you might have.

Sponsorship contributions of between $5,000 and $30,000 are sought from commercial users of the free OpenZFS or the free TrueNAS software. There is no expectation that home or small users will contribute. TrueNAS Enterprise users have already effectively contributed via their business relationship with iX.

With funding, we aim to complete development this year. Once completed, Fast Dedup will be merged into OpenZFS and introduced as part of a TrueNAS update in 2024.

Previous Dedup Work

Special vdevs in TrueNAS 12 added a dedicated group of SSDs to hold the deduplication table, increasing performance and expanding acceptable use-cases. With a dedup vdev in place, the small random I/O patterns of the deduplication table (DDT) could be quickly handled by a few high-performance SSDs rather than compete with data writes to the pool HDDs. Our own TrueNAS Community users conducted research and shared detailed results of configurations that worked well with their workloads. While dedup write performance was improved, several key limitations remained, such as the inherent write amplification of updating the DDT. Even with a dedup vdev, if the DDT did not fit entirely in RAM, performance of the ZFS pool is still much lower.

Proposals have been written previously for methods to improve deduplication, including Matt Ahrens’ Dedup Performance paper presented at the OpenZFS Developer Summit. Some of the ideas in this proposal are being used in the new project.

The Fast Dedup Project

iXsystems and Klara Systems have started a new Fast Dedup project in conjunction with the OpenZFS community. The work is underway and will require significant resources to complete.

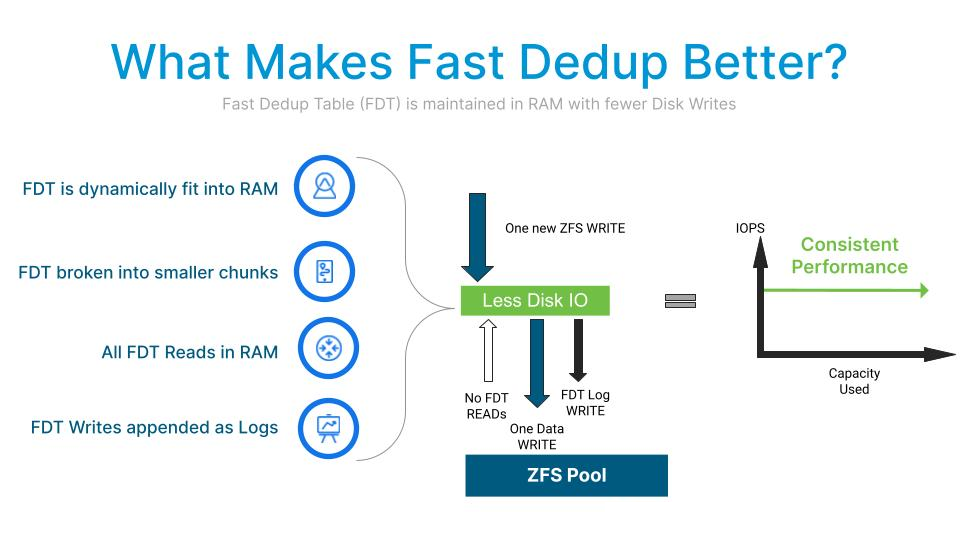

Fast Dedup will include a rearchitecting of the DDT to improve performance. The new Fast Dedup Tables (FDT) have several significant new properties:

- Dynamically sized to fit into RAM,

- Broken into smaller, more manageable chunks for efficient updating,

- Automatically prune non-dedupable data for better RAM efficiency

- Massively reduce write amplification by using a log-based write process

All of these will result in a significant increase in performance when compared to the existing dedup experience.

iXsystems is looking for sponsors and collaborators who could benefit from this new Fast Dedup and leverage it to make new storage use-cases possible or improve on the performance of existing use-cases. If this sounds like your organization, click here to share your interest. We’ll then send you a sponsorship information package and answer any questions you might have.

Sponsorship contributions of between $5,000 and $30,000 are sought from commercial users of the free OpenZFS or the free TrueNAS software. There is no expectation that home or small users will contribute. TrueNAS Enterprise users have already effectively contributed via their business relationship with iX.

With funding, we aim to complete development this year. Once completed, Fast Dedup will be merged into OpenZFS and introduced as part of a TrueNAS update in 2024.