TrueNAS-12.0-U8

ESXi 6.7u3 (new and old servers)

Situation:

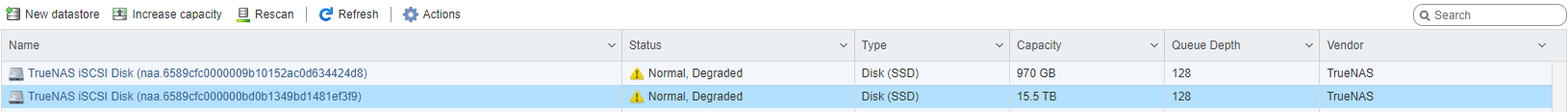

Replacing our ESXi hosts with newer hardware in prep to move to ESXi 7.0.x. The New ESXi host does not see the DataStore but the New ESXi hosts see the TrueNAS LUN under Devices in ESXi. However, ESXi wants us to create a new Datastore when highlighting the device (focusing on the 15.5TB Datastore):

No changes have been made to the TrueNAS device, pool or zvol. The tech who was working on the entire operation cannot remember if he hit "unmount" or delete" on the datastore. If the tech hit "delete" has the data been destroyed on the TrueNAS? Is there a way to recover the data on the TrueNAS?

Tech did not take a snapshot of the Pool or zvol before making any changes. We do have veeam backups which are on a separate NAS, but the VM resided on the Datastore in question.

If your wondering about the fate of the tech, his employment status does ride on the recovery of the DataStore and or data without having to rely on the Veeam backups.

ESXi 6.7u3 (new and old servers)

Situation:

Replacing our ESXi hosts with newer hardware in prep to move to ESXi 7.0.x. The New ESXi host does not see the DataStore but the New ESXi hosts see the TrueNAS LUN under Devices in ESXi. However, ESXi wants us to create a new Datastore when highlighting the device (focusing on the 15.5TB Datastore):

No changes have been made to the TrueNAS device, pool or zvol. The tech who was working on the entire operation cannot remember if he hit "unmount" or delete" on the datastore. If the tech hit "delete" has the data been destroyed on the TrueNAS? Is there a way to recover the data on the TrueNAS?

Tech did not take a snapshot of the Pool or zvol before making any changes. We do have veeam backups which are on a separate NAS, but the VM resided on the Datastore in question.

If your wondering about the fate of the tech, his employment status does ride on the recovery of the DataStore and or data without having to rely on the Veeam backups.