Hi,

i added a new 4x4TB RaidZ (i know raidz2 is better, and i will change it in a few weeks) Volume to my setup and began filling it with data from another freenas box with rsync. Everything seemed to have worked fine, till i shut the server down to physically move it.

After the restart, one of my datasets that before was filled with about 3TB of data is empty. The strange thing is, even though the data seems to be gone, the space is not available again. So I am wondering if i messed up somewhere and it is just not displayed or w/e anymore.

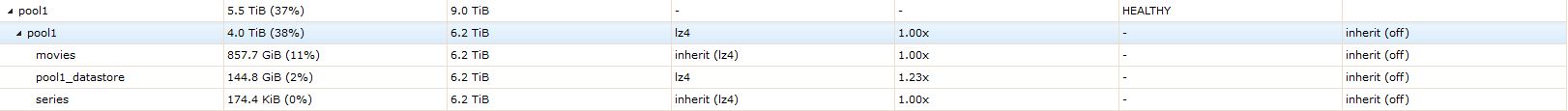

here some pics/copies:

It shows 37% used, but all datasets are only 13% ? (missing the 3TB that were in series before)

So, I am wondering if i should just destroy the whole volume and redo or if the data could still be around somewhere and i can just restore it?

Thanks for your help!

i added a new 4x4TB RaidZ (i know raidz2 is better, and i will change it in a few weeks) Volume to my setup and began filling it with data from another freenas box with rsync. Everything seemed to have worked fine, till i shut the server down to physically move it.

After the restart, one of my datasets that before was filled with about 3TB of data is empty. The strange thing is, even though the data seems to be gone, the space is not available again. So I am wondering if i messed up somewhere and it is just not displayed or w/e anymore.

here some pics/copies:

It shows 37% used, but all datasets are only 13% ? (missing the 3TB that were in series before)

Code:

[root@Nas] /mnt# zfs list NAME USED AVAIL REFER MOUNTPOINT freenas-boot 2.05G 5.64G 31K none freenas-boot/ROOT 2.01G 5.64G 25K none freenas-boot/ROOT/9.10-STABLE-201604181743 11.2M 5.64G 505M / freenas-boot/ROOT/9.10-STABLE-201604261518 11.1M 5.64G 511M / freenas-boot/ROOT/9.10-STABLE-201605021851 1.96G 5.64G 532M / freenas-boot/ROOT/FreeNAS-5f91faf7204d20c5a639d34396e74b2b 10.8M 5.64G 541M / freenas-boot/ROOT/FreeNAS-9.3-STABLE-201604150515 9.14M 5.64G 572M / freenas-boot/ROOT/Initial-Install 1K 5.64G 512M legacy freenas-boot/ROOT/Pre-FreeNAS-9.3-STABLE-201602031011-791933 1K 5.64G 550M legacy freenas-boot/ROOT/default 9.14M 5.64G 559M legacy freenas-boot/grub 38.8M 5.64G 6.33M legacy pool1 3.98T 6.23T 3.00T /mnt/pool1 pool1/movies 858G 6.23T 858G /mnt/pool1/movies pool1/pool1_datastore 145G 6.23T 145G - pool1/series 174K 6.23T 174K /mnt/pool1/series

So, I am wondering if i should just destroy the whole volume and redo or if the data could still be around somewhere and i can just restore it?

Thanks for your help!