-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Create zfs mirror by adding a drive?

- Thread starter TheShoeGuy

- Start date

danb35

Hall of Famer

- Joined

- Aug 16, 2011

- Messages

- 15,504

...so where did you get the second device in this command? The correct command would beroot@freenas[~]# zpool attach Disco1 /dev/dee51a10-6414-11e9-b71e-3464a99a3b70 /dev/gptid/a1f796ce-b62f-11e9-afc0-3464a99a3b70

zpool attach Disco1 gptid/deeff679-6414-11e9-b71e-3464a99a3b70 gptid/1e28994f-b7bf-11e9-93dc-3464a99a3b70.Yeah, you are totally right. But same error:...so where did you get the second device in this command? The correct command would bezpool attach Disco1 gptid/deeff679-6414-11e9-b71e-3464a99a3b70 gptid/1e28994f-b7bf-11e9-93dc-3464a99a3b70.

Code:

root@freenas[~]# zpool attach Disco1 gptid/deeff679-6414-11e9-b71e-3464a99a3b70gptid/1e28994f-b7bf-11e9-93dc-3464a99a3b70 cannot attach gptid/1e28994f-b7bf-11e9-93dc-3464a99a3b70 to gptid/deeff679-6414-11e9-b71e-3464a99a3b70: gptid/1e28994f-b7bf-11e9-93dc-3464a99a3b70 is busy, or pool has removing/removed vdevs

danb35

Hall of Famer

- Joined

- Aug 16, 2011

- Messages

- 15,504

So we're back to what I said earlier--ZFS vdev removal is a new thing--prior to the release of 11.2 you couldn't have removed the second disk at all. It may be the case (it appears to be, based on the error message you got) that you simply can't add a second disk as a mirror of the first, when the first is in the state yours is in. I don't know one way or the other, though, and I'm not sure where to check. Perhaps a ZFS guru would know--@Arwen perhaps? @Ericloewe?

I have the same type of disk (size and brand) and I can do anything with the second disk. What I need to do to fix that?Device removal requires that all vdevs be mirrors of the same width, due to the simplistic nature of the copy process. That would explain the error message.

What's my best option to fix that? Can you analyse my pool in this post #78?

Thanks

- Joined

- Feb 15, 2014

- Messages

- 20,194

Your case seems like an easy one to solve. Make a new single-disk pool with your new disk, replicate all your stuff over, destroy the old pool, rename the new one to match the old one and add the old disk to create a mirror out of the single disk.

I will try your solution.Your case seems like an easy one to solve. Make a new single-disk pool with your new disk, replicate all your stuff over, destroy the old pool, rename the new one to match the old one and add the old disk to create a mirror out of the single disk.

Thanks a lot.

spg900ny

Dabbler

- Joined

- Feb 10, 2012

- Messages

- 28

Let's assume ada0 is your existing disk, ada1 is the new one, tank is the pool name.

Edit: Updated the steps to create the default 2GB swap partition.

Just wanted to say thank you for confirming this is still valid! I had a 2TB mirror where one drive had been online for eight years and started showing SMART errors. Rather than spend money on a new (old) 2TB to replace it (which seemed foolish since the 4TB's weren't much more), I decided to just get two 4TB drives and move the data off the 2TB drive. Figured once the data was moved, I could put the failing 2TB drive offline, substitute the blank 4TB and mirror it to the 4TB one where I had just moved the data over. Seemed simple enough, but no option in the GUI! Made yet another backup and then performed these steps and happy to say my drive is being resilvered! (I'm running 11.7-U7)

I do have a question though - when I do a glabel status, none of the other devices seem to have the swap partition (they were all configured using the GUI). Why was creation of that 2G partition necessary, and how does the mirror work if I seemingly have 2GB less space on the newly-added drive? Sorry if this is a basic question.

Thanks again!

@Ericloewe I tried following this tutorial, but I had a lot of problems with my iocages. They do not start properly. The biggest problem is with my GitLab and Nextcloud, I have a lot of information and configurations in it and I can't lose them. But my VM machine works fine. I reverted to the "old" disk.

Possible solution: I will try again.

EDIT: So,... finally the tutorial works for me.

Now, I need to confirm the replication is ok... How I test this? Can I get one confirmation of this result via GUI or CLI? how I check if it’s all ok?

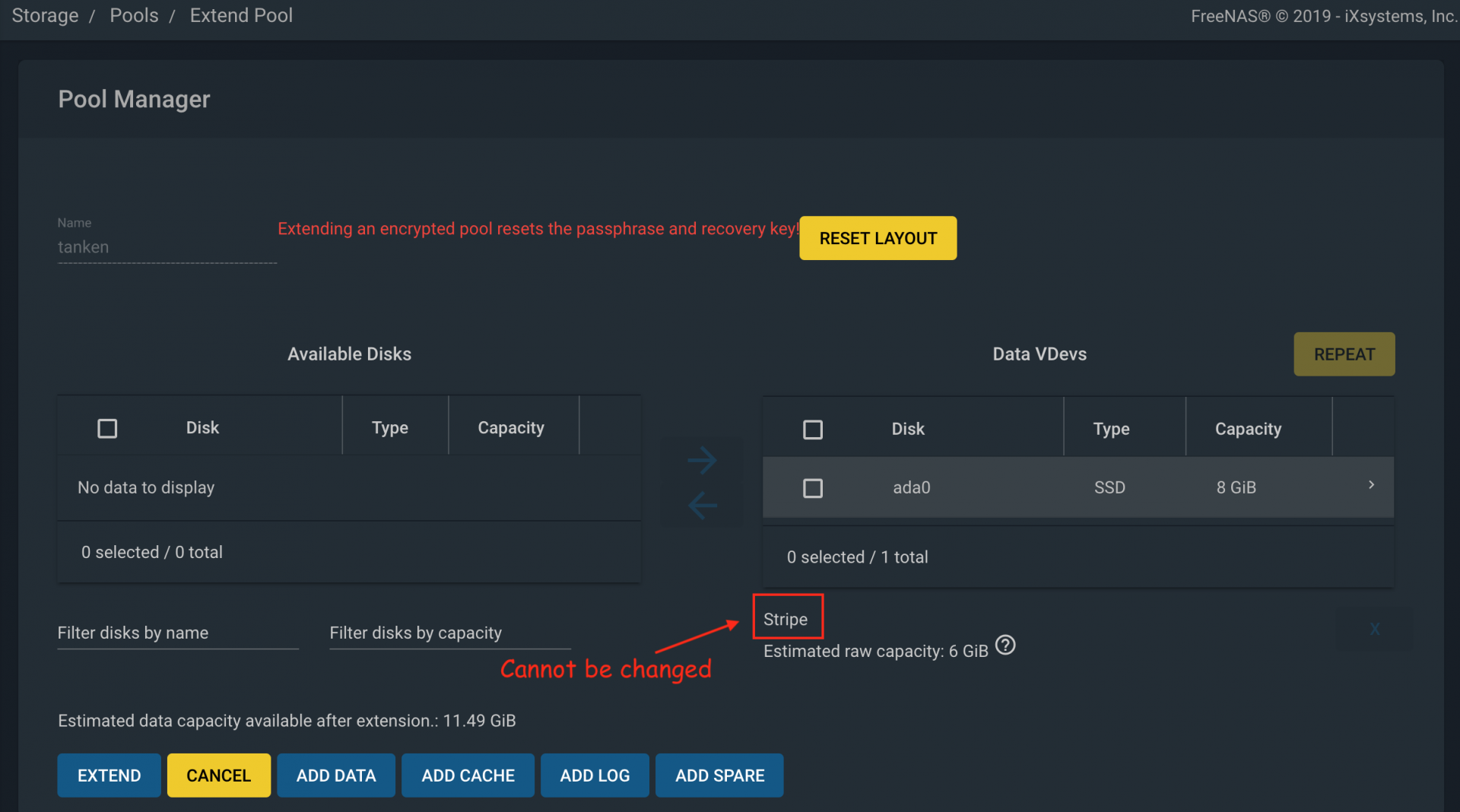

(image attached)

Thanks @danb35 for your help here and the previous thread and @Ericloewe for your knowlegment and help.

Possible solution: I will try again.

EDIT: So,... finally the tutorial works for me.

Now, I need to confirm the replication is ok... How I test this? Can I get one confirmation of this result via GUI or CLI? how I check if it’s all ok?

(image attached)

Thanks @danb35 for your help here and the previous thread and @Ericloewe for your knowlegment and help.

Attachments

Last edited:

Just want to confirm it still work with FreeNAS-11.2-RC2. Change "zpool attach tank /dev/gptid/[gptid_of_the_existing_disk] /dev/gptid/[gptid_of_the_new_partition]" to "zpool attach tank gptid/[gptid_of_the_existing_disk] gptid/[gptid_of_the_new_partition]" and using "rawuuid of ada1p2" not "rawuuid of ada1p1". Thanks!!!

Thanks, this helped me. I followed the steps given and now I can see my newly added drive is presently reslivering. Perfect. For completeness, I'm quoting the steps, with 1.5 inserted (as #2 below), as I could have ended up missing 1.5, and others may end up missing it.. Thanks a lot Dusan!

----------------------------------------------------------------------

Dusan & James' rad guide to

ZFS: Adding a drive to create a mirror

of another in an existing ZFS pool

(circa FreeNAS 9.1.1)

Here we're adding a drive labelled /dev/ada1 - Your exact drive name obviously matters vastly. Make sure you know the correct name for the drive you mean to add to your pool!

Upon completion of these steps, running zpool status should return a screen similar to mine, note the "resilver" comments in the output:

Note in this case, I have a drive for a zfs slog - I expect most won't have the logs section I do.. ^_^

Thank You sooooooooo much man!Let's assume ada0 is your existing disk, ada1 is the new one, tank is the pool name.

Test this first in a VM to verify that you understand everything.

- gpart create -s gpt /dev/ada1

- gpart add -i 1 -b 128 -t freebsd-swap -s 2g /dev/ada1

- gpart add -i 2 -t freebsd-zfs /dev/ada1

- Run zpool status and note the gptid of the existing disk

- Run glabel status and find the gptid of the newly created partition. It is the gptid associated with ada1p2.

- zpool attach tank /dev/gptid/[gptid_of_the_existing_disk] /dev/gptid/[gptid_of_the_new_partition]

Edit: Updated the steps to create the default 2GB swap partition.

mgd

Dabbler

- Joined

- Jan 8, 2017

- Messages

- 46

@Ericloewe if I am guessing right, this is your original feature request which would (among other things) allow for converting a stripe to a mirror by adding a disk in the GUI:

It is now closed and it looks as it is included in the upcoming 11.3 release:

However, I just tried if I could add a disk to a stripe and convert it to a mirror in the GUI on a FreeNAS-11.3-RC1 test installation in a VM, but apparently it is not possible (or I have overlooked something).

(I cannot add a comment to the feature request, so therefore I write here.)

It is now closed and it looks as it is included in the upcoming 11.3 release:

However, I just tried if I could add a disk to a stripe and convert it to a mirror in the GUI on a FreeNAS-11.3-RC1 test installation in a VM, but apparently it is not possible (or I have overlooked something).

(I cannot add a comment to the feature request, so therefore I write here.)

Last edited:

- Joined

- Feb 15, 2014

- Messages

- 20,194

mgd

Dabbler

- Joined

- Jan 8, 2017

- Messages

- 46

@Ericloewe …and BTW, the method I described previously in this post no longer works in 11.3. So currently there is no way to transform a single-disk encrypted vdev into a mirrored vdev by adding a disk. So if you need to do that you would have to invest in two new drives instead of only one in order to create a new encrypted and mirrored pool, then copy all data over and discard/export the existing pool.

The step that fails in my previously described procedure is getting rid of the fake pool in FreeNAS's configuration. In 11.3 (and maybe also 11.2 but untested) you end up with a pool where the disk is offline and you cannot delete the pool from the GUI. If you try, you get this error and stack trace:

Error exporting/disconnecting pool.

[ENOENT] None: PoolDataset fake does not exist

The step that fails in my previously described procedure is getting rid of the fake pool in FreeNAS's configuration. In 11.3 (and maybe also 11.2 but untested) you end up with a pool where the disk is offline and you cannot delete the pool from the GUI. If you try, you get this error and stack trace:

Error exporting/disconnecting pool.

[ENOENT] None: PoolDataset fake does not exist

Code:

Error: Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 128, in call_method

result = await self.middleware.call_method(self, message)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1113, in call_method

return await self._call(message['method'], serviceobj, methodobj, params, app=app, io_thread=False)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1061, in _call

return await methodobj(*args)

File "/usr/local/lib/python3.7/site-packages/middlewared/schema.py", line 949, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/pool.py", line 2218, in attachments

return await self.middleware.call('pool.dataset.attachments', pool['name'])

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1122, in call

app=app, pipes=pipes, job_on_progress_cb=job_on_progress_cb, io_thread=True,

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1061, in _call

return await methodobj(*args)

File "/usr/local/lib/python3.7/site-packages/middlewared/schema.py", line 949, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/pool.py", line 3123, in attachments

dataset = await self._get_instance(oid)

File "/usr/local/lib/python3.7/site-packages/middlewared/service.py", line 421, in _get_instance

raise ValidationError(None, f'{self._config.verbose_name} {id} does not exist', errno.ENOENT)

middlewared.service_exception.ValidationError: [ENOENT] None: PoolDataset fake does not exist

mgd

Dabbler

- Joined

- Jan 8, 2017

- Messages

- 46

@Ericloewe But maybe something is happening after all:

The final comment points to this pull requests:

…which seems to have been merged to FreeNAS12.

The final comment points to this pull requests:

…which seems to have been merged to FreeNAS12.

Rafal Lukawiecki

Dabbler

- Joined

- Jul 23, 2017

- Messages

- 34

@mgd are you aware of a new workaround for this? I am stuck with an encrypted single-drive pool and no way to add a second one to create a mirror. In my defence, I am stuck because an older upgrade of FreeNAS (10.x to 11.2) messed up the status of encryption in its new and "improved" GUI. To resolve that, I have split the mirror, created a new encrypted single-drive pool and copied the data, but now, well, I am stuck. :)

As an aside, the new GUI is limiting: neither does it allow things to be done, nor does it let you fix things in CLI without then getting stroppy about the results. The whole reason I am here is because I was too eager and trusted the new GUI. :( My bad.

I suppose waiting for the a new FreeNAS release (12) while keeping fingers crossed for no drive failures is one option. Another option is to solve things in the CLI, tell the GUI to stuff itself and revisit once again when things have been resolved in 12...

As an aside, the new GUI is limiting: neither does it allow things to be done, nor does it let you fix things in CLI without then getting stroppy about the results. The whole reason I am here is because I was too eager and trusted the new GUI. :( My bad.

I suppose waiting for the a new FreeNAS release (12) while keeping fingers crossed for no drive failures is one option. Another option is to solve things in the CLI, tell the GUI to stuff itself and revisit once again when things have been resolved in 12...

- Joined

- Jan 1, 2016

- Messages

- 9,700

You can always export the GUI after fixing it in CLI and import in the GUI to have it back as normal.

You can certainly create a mirror from a working single disk pool and an additional drive of the same (or greater size).

You can certainly create a mirror from a working single disk pool and an additional drive of the same (or greater size).

mgd

Dabbler

- Joined

- Jan 8, 2017

- Messages

- 46

@mgd are you aware of a new workaround for this? I am stuck with an encrypted single-drive pool and no way to add a second one to create a mirror. In my defence, I am stuck because an older upgrade of FreeNAS (10.x to 11.2) messed up the status of encryption in its new and "improved" GUI. To resolve that, I have split the mirror, created a new encrypted single-drive pool and copied the data, but now, well, I am stuck. :)

No, I am sorry. My old workaround does not work in 11.3.

As an aside, the new GUI is limiting: neither does it allow things to be done, nor does it let you fix things in CLI without then getting stroppy about the results. The whole reason I am here is because I was too eager and trusted the new GUI. :( My bad.

As a last resort, you could try switching back to the old GUI in 11.3. On the login page you can click “LEGACY WEB INTERFACE”. Maybe, the workaround will work there, but I haven't tried, so test it out in a VM before risking you real storage pool.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Create zfs mirror by adding a drive?"

Similar threads

- Replies

- 5

- Views

- 3K