Sprint

Explorer

- Joined

- Mar 30, 2019

- Messages

- 72

So this is off the back of another thread which I've marked as solved, but here's the brief history.

I decided I wanted to encrypt my pools, incase I ever need to send a drive off for repair or RMA.

I started with two Pools

Primary_Array = no Encryption

Secondary_Array = Legacy Encryption

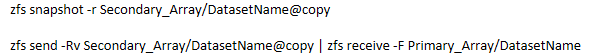

I started by moving everything off of the Secondary_Array onto the Primary_Array, so I could re-encrypt the Secondary using the new Encryption system in TrueNAS. This was done using:

This takes the entire dataset and its snapshots with it.

Once I'd moved all the data off the Secondary_Array, I tore down the pool, and recreated it using the new encryption options.

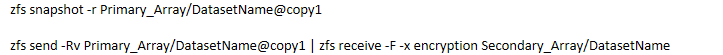

When I tried to copy everything back to the newly encrypted Secondary, I discovered that I needed to use the "-x encryption" tag to my zfs send commands in order for it to work.

This again works fine, and takes all the snapshots with it.

Once the Primary_Array was clear, I did a full DD wipe on all 8 drives, and re-creating the pool with the same new encryption method. I'm now looking at move my data back into their final homes, which means moving around 20Tb's of Datasets back to the Primary_Array, and this is where it goes wrong.

When I attempt to zfs send datasets from the Secondary_Array back to the Primary_Array (both encrypted using the same method now) I'm getting errors, and I'll be honest, I'm a little out of my depth, and a little nervous as my backup server took a nose dive this morning :(

This is what I see....

root@Nitrogen[~]# zfs send -Rv Secondary_Array/Public@copy2 | zfs receive -F -x encryption Primary_Array/Public

cannot send Secondary_Array/Public@copy2: encrypted dataset Secondary_Array/Public may not be sent with properties without the raw flag

warning: cannot send 'Secondary_Array/Public@copy2': backup failed

cannot receive: failed to read from stream

I've tried it both with and without the -x flag, but the error is the same.

It DOES work if I remove the -R tag at the start, but then it ONLY takes the snapshot named in the command (@copy2), and not the earlier snapshots.

What am I doing wrong?

(as a side question for bonus points, when I send some datasets over, I also want to change the compression, is there a flag todo that?)

Thanks all in advanced :)

Sprint

I decided I wanted to encrypt my pools, incase I ever need to send a drive off for repair or RMA.

I started with two Pools

Primary_Array = no Encryption

Secondary_Array = Legacy Encryption

I started by moving everything off of the Secondary_Array onto the Primary_Array, so I could re-encrypt the Secondary using the new Encryption system in TrueNAS. This was done using:

This takes the entire dataset and its snapshots with it.

Once I'd moved all the data off the Secondary_Array, I tore down the pool, and recreated it using the new encryption options.

When I tried to copy everything back to the newly encrypted Secondary, I discovered that I needed to use the "-x encryption" tag to my zfs send commands in order for it to work.

This again works fine, and takes all the snapshots with it.

Once the Primary_Array was clear, I did a full DD wipe on all 8 drives, and re-creating the pool with the same new encryption method. I'm now looking at move my data back into their final homes, which means moving around 20Tb's of Datasets back to the Primary_Array, and this is where it goes wrong.

When I attempt to zfs send datasets from the Secondary_Array back to the Primary_Array (both encrypted using the same method now) I'm getting errors, and I'll be honest, I'm a little out of my depth, and a little nervous as my backup server took a nose dive this morning :(

This is what I see....

root@Nitrogen[~]# zfs send -Rv Secondary_Array/Public@copy2 | zfs receive -F -x encryption Primary_Array/Public

cannot send Secondary_Array/Public@copy2: encrypted dataset Secondary_Array/Public may not be sent with properties without the raw flag

warning: cannot send 'Secondary_Array/Public@copy2': backup failed

cannot receive: failed to read from stream

I've tried it both with and without the -x flag, but the error is the same.

It DOES work if I remove the -R tag at the start, but then it ONLY takes the snapshot named in the command (@copy2), and not the earlier snapshots.

What am I doing wrong?

(as a side question for bonus points, when I send some datasets over, I also want to change the compression, is there a flag todo that?)

Thanks all in advanced :)

Sprint