I had a set of disks go bad in my iSCSI store which meant a complete rebuild of the pool. I upgraded to 11.2-U5 at the time I could not set the iSCSI device (now reported as a bug). I've reverted to 11.1-U7 (active boot under the gui). I am trying to re-setup iSCSI to esxi 6.0 with FN 11.1.U7 which was working before (following John Keen's guide). I've done a clean install of esxi 6.

I just cannot get the zvol to show up on the esxi side. I am now out of ideas. Any help to crack this problem would be really appreciated.

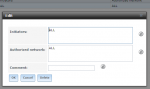

Basic settings are attached below as screenshots.

Troubleshooting or information so far:

- I've checked the devices on the esxi side. This shows the SSD system disk, WD HDD and CD-Rom

- Looked at the ctl.conf Which seems to match my settings but I may be missing something

- I've successfully pinged the network connection from both sides.

I just used ping from FN to esxi. While using (successfull) vmkping -I vmk1 10.0.0.1 / 10.0.1.1. from the esxi side

- ctkadm devlist -v shows

The debug.log is full of these lines

I'm really stuck and desperate to get this up and running -- hopefully without doing a complete rebuild.

Thanks for any help.

I just cannot get the zvol to show up on the esxi side. I am now out of ideas. Any help to crack this problem would be really appreciated.

Basic settings are attached below as screenshots.

Troubleshooting or information so far:

- I've checked the devices on the esxi side. This shows the SSD system disk, WD HDD and CD-Rom

Code:

[root@localhost:~] esxcli storage core device list naa.600508e00000000030bf27996e48ce01 Display Name: LSI Serial Attached SCSI Disk (naa.600508e00000000030bf27996e48ce01) Has Settable Display Name: true Size: 113487 Device Type: Direct-Access Multipath Plugin: NMP Devfs Path: /vmfs/devices/disks/naa.600508e00000000030bf27996e48ce01 Vendor: LSI Model: Logical Volume Revision: 3000 SCSI Level: 6 Is Pseudo: false Status: degraded Is RDM Capable: true Is Local: false Is Removable: false Is SSD: true Is VVOL PE: false Is Offline: false Is Perennially Reserved: false Queue Full Sample Size: 0 Queue Full Threshold: 0 Thin Provisioning Status: unknown Attached Filters: VAAI Status: unknown Other UIDs: vml.0200000000600508e00000000030bf27996e48ce014c6f67696361 Is Shared Clusterwide: true Is Local SAS Device: false Is SAS: true Is USB: false Is Boot USB Device: false Is Boot Device: true Device Max Queue Depth: 128 No of outstanding IOs with competing worlds: 32 Drive Type: logical RAID Level: RAID1 Number of Physical Drives: 2 Protection Enabled: false PI Activated: false PI Type: 0 PI Protection Mask: NO PROTECTION Supported Guard Types: NO GUARD SUPPORT DIX Enabled: false DIX Guard Type: NO GUARD SUPPORT Emulated DIX/DIF Enabled: false naa.50014ee2bb6e806f Display Name: ATA Serial Attached SCSI Disk (naa.50014ee2bb6e806f) Has Settable Display Name: true Size: 1907729 Device Type: Direct-Access Multipath Plugin: NMP Devfs Path: /vmfs/devices/disks/naa.50014ee2bb6e806f Vendor: ATA Model: WDC WD20EFAX-68F Revision: 0A82 SCSI Level: 6 Is Pseudo: false Status: degraded Is RDM Capable: true Is Local: false Is Removable: false Is SSD: false Is VVOL PE: false Is Offline: false Is Perennially Reserved: false Queue Full Sample Size: 0 Queue Full Threshold: 0 Thin Provisioning Status: unknown Attached Filters: VAAI Status: unknown Other UIDs: vml.020000000050014ee2bb6e806f574443205744 Is Shared Clusterwide: true Is Local SAS Device: false Is SAS: true Is USB: false Is Boot USB Device: false Is Boot Device: false Device Max Queue Depth: 32 No of outstanding IOs with competing worlds: 32 Drive Type: physical RAID Level: NA Number of Physical Drives: 1 Protection Enabled: false PI Activated: false PI Type: 0 PI Protection Mask: NO PROTECTION Supported Guard Types: NO GUARD SUPPORT DIX Enabled: false DIX Guard Type: NO GUARD SUPPORT Emulated DIX/DIF Enabled: false mpx.vmhba33:C0:T0:L0 Display Name: Local ASUS CD-ROM (mpx.vmhba33:C0:T0:L0) Has Settable Display Name: false Size: 0 Device Type: CD-ROM Multipath Plugin: NMP Devfs Path: /vmfs/devices/cdrom/mpx.vmhba33:C0:T0:L0 Vendor: ASUS Model: DRW-24D5MT Revision: 1.00 SCSI Level: 5 Is Pseudo: false Status: on Is RDM Capable: false Is Local: true Is Removable: true Is SSD: false Is VVOL PE: false Is Offline: false Is Perennially Reserved: false Queue Full Sample Size: 0 Queue Full Threshold: 0 Thin Provisioning Status: unknown Attached Filters: VAAI Status: unsupported Other UIDs: vml.0005000000766d68626133333a303a30 Is Shared Clusterwide: false Is Local SAS Device: false Is SAS: false Is USB: false Is Boot USB Device: false Is Boot Device: false Device Max Queue Depth: 1 No of outstanding IOs with competing worlds: 32 Drive Type: unknown RAID Level: unknown Number of Physical Drives: unknown Protection Enabled: false PI Activated: false PI Type: 0 PI Protection Mask: NO PROTECTION Supported Guard Types: NO GUARD SUPPORT DIX Enabled: false DIX Guard Type: NO GUARD SUPPORT Emulated DIX/DIF Enabled: false

- Looked at the ctl.conf Which seems to match my settings but I may be missing something

Code:

root@freenas:/var/log # cat /etc/ctl.conf

portal-group default {

}

portal-group pg1 {

tag 0x0001

discovery-filter portal-name

discovery-auth-group no-authentication

listen 10.0.0.1:3260

listen 10.0.1.1:3260

option ha_shared on

}

lun "store-1" {

ctl-lun 0

path "/dev/zvol/vm-datastore/store-1"

blocksize 512

option pblocksize 0

serial "ac1f6b2542fe00"

device-id "iSCSI Disk ac1f6b2542fe00 "

option vendor "FreeNAS"

option product "iSCSI Disk"

option revision "0123"

option naa 0x6589cfc000000b2f7a820c76db024268

option insecure_tpc on

option rpm 7200

}

target iqn.2011-03.org.exam.istgt:iscsi-target-t {

alias "iscsi-target-t"

portal-group pg1 no-authentication

lun 0 "store-1"

}

- I've successfully pinged the network connection from both sides.

I just used ping from FN to esxi. While using (successfull) vmkping -I vmk1 10.0.0.1 / 10.0.1.1. from the esxi side

- ctkadm devlist -v shows

Code:

root@freenas:/var/log # ctladm devlist -v

LUN Backend Size (Blocks) BS Serial Number Device ID

0 block 1887436800 512 ac1f6b2542fe00 iSCSI Disk ac1f6b2542fe00

lun_type=0

num_threads=14

pblocksize=0

vendor=FreeNAS

product=iSCSI Disk

revision=0123

naa=0x6589cfc000000b2f7a820c76db024268

insecure_tpc=on

rpm=7200

file=/dev/zvol/vm-datastore/store-1

ctld_name=store-1

The debug.log is full of these lines

Code:

Sep 5 14:13:21 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:14:21 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:15:21 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:16:22 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:17:22 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:18:23 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:19:23 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:20:24 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:21:24 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b''' Sep 5 14:22:25 freenas /alert.py: [ws4py:360] Closing message received (1000) 'b'''

I'm really stuck and desperate to get this up and running -- hopefully without doing a complete rebuild.

Thanks for any help.

Attachments

-

assoc-target.PNG7.4 KB · Views: 235

assoc-target.PNG7.4 KB · Views: 235 -

assoc-target.PNG7.4 KB · Views: 235

assoc-target.PNG7.4 KB · Views: 235 -

esxi_net_all.PNG12.6 KB · Views: 281

esxi_net_all.PNG12.6 KB · Views: 281 -

esxi_net_one.PNG37.2 KB · Views: 268

esxi_net_one.PNG37.2 KB · Views: 268 -

esxi_storage_adapter_all.PNG46 KB · Views: 263

esxi_storage_adapter_all.PNG46 KB · Views: 263 -

esxi_storage_adapter_detail.PNG11 KB · Views: 243

esxi_storage_adapter_detail.PNG11 KB · Views: 243 -

extent.PNG25.9 KB · Views: 271

extent.PNG25.9 KB · Views: 271 -

networking.PNG9.2 KB · Views: 252

networking.PNG9.2 KB · Views: 252 -

initiators.PNG11.2 KB · Views: 233

initiators.PNG11.2 KB · Views: 233 -

target.PNG16.6 KB · Views: 243

target.PNG16.6 KB · Views: 243