Adding Swap and L2ARC using Virtual Disks

There is a fast M.2 NVMe disk with 250GB available installed in this system, but the ESXi install and the FreeNAS boot disk only use about 12GB of that. Seems like a waste.

Although, best recommendations are to provide FreeNAS with physical access to disks, the swap and l2arc devices are ephemeral, and thus it doesn't really matter if they are virtual devices.

In my testing, the VMware pass-through virtual devices are quite performant.

So, I'll create a couple of Virtual disks in the FreeNAS VM, and use those for swap and L2ARC.

I'll use a 16GB swap disk, but you could use whatever size you preferred. I'll use a 64GB L2ARC, as I found on a 16GB FreeNAS install, 128GB causes performance issues. The more memory your FreeNAS VM has, then the more L2ARC you can provide.

Increasing either disks size is quite easy in the future, and since everything is virtual, you can just remove and re-add.

I will set the Shares to High. This is the priority that Vmware puts on satisfying the virtual disks i/o requests, and since this is an AIO setup, if FreeNAS is waiting on an L2ARC or Swap request, then everything is.

I will use Thin provisioning for these disks, as the swap will be used only minimally, and thus not take any space unless its being used, and the L2ARC is similar. When shutting down, I believe the disk usage is released.

Also, I will use Independant Persistant provisioning. This means that when you backup the VM it will not backup the L2ARC and Swap disks, which will save space in your backup, and since they are ephemeral that's fine, if you had to restore your VM from backup, it would be trivial to re-create/configure the swap/l2arc volumes.

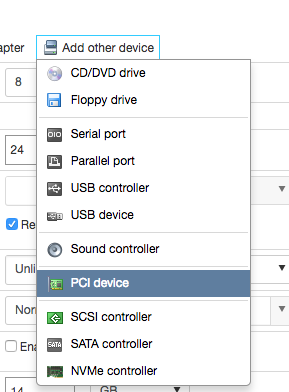

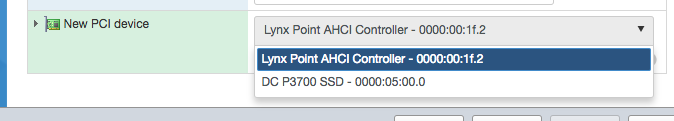

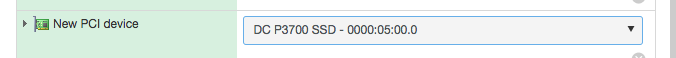

Firstly, lets create the two Virtual disks. If you are using PCIe pass-through you'll need to shutdown the VM to add disks to it.

Creating the virtual disks

Edit the FreeNAS VM settings, Add hard disk -> New hard disk, twice.

The two new hard disks appear...

As mentioned, two disks, one 64GB and one 16GB, both Thin provisioned, both Independent - persistent, and both with Shares set to High.

The disks will tell you their SCSI controller ID (ie 0:2 and 0:3), if you wanted to re-arrange the device ordering in FreeNAS, you can do that by adjusting the controller order.

Next save and boot your VM.

Once booted, you can see the disks in Storage -> View Disks.

It may be a good idea to use the Description field to label the swap disk, the l2arc disk will no longer be present once you add it to a pool, but the swap disk will always be present.

Adding L2ARC

Adding the l2arc disk is trivial.

Storage -> Volumes, Volume Manager

Then in the Volume Manager

Select the Volume to Extend, add the L2ARC disk, and ensure you've set it's type to Cache. When you're sure that everything is right, hit Extend Volume.

And your L2ARC has been added. Congratulations.

You can confirm this by clicking on the Volume Status icon, which you get to by going to Storage -> Volumes, selecting the top level of your pool, and its down the bottom of the screen...

and the volume status with cache on da2p1

NOTE: when booting bare metal, your pool might not mount without being forced to mount, since the virtual L2ARC is missing. If you wanted to, you could offline or remove the l2arc before rebooting.