Hello guys,

I'm new to TrueNAS Scale and found most of the answers of my questions here. Great community !

I have one small but crucial for me issue. This is the my version of TrueNAS Scale: TrueNAS-SCALE-Bluefin-RC - TrueNAS SCALE Bluefin RC

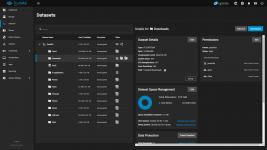

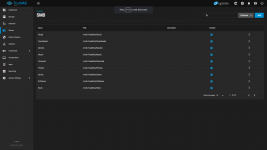

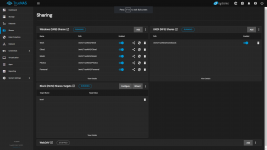

I'm attaching screenshots to help explain the issue I'm having.

I have SMB share pointing to /mnt/TrueNAS/Downloads and NFS share to the same folder.

I want to give the plex app ( for example ) access to my /mnt/TrueNAS/Downloads dataset. No matter if it's plex or another app, and no matter of the repo version ( Truecharts or Official ) I always end up with stuck at deploying app. Here is the full log:

2022-11-22 23:47:41

Updated LoadBalancer with new IPs: [] -> [192.168.0.8]

2022-11-22 23:47:40

Job completed

2022-11-22 23:47:40

Ensuring load balancer

2022-11-22 23:47:40

Applied LoadBalancer DaemonSet kube-system/svclb-plex-04b638ae

2022-11-22 23:47:32

Created pod: plex-manifests-7967c

2022-11-22 23:47:32

Successfully assigned ix-plex/plex-manifests-7967c to ix-truenas

2022-11-22 23:47:32

Add eth0 [172.16.4.62/16] from ix-net

2022-11-22 23:47:32

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:32

Created container plex-manifests

2022-11-22 23:47:32

Started container plex-manifests

2022-11-22 23:47:32

Error: Error response from daemon: invalid volume specification: '/mnt/TrueNAS/Downloads:/Downloads': Invalid mount path. /mnt/TrueNAS/Downloads. Following service(s) uses this path: `NFS Share, SMB Share`.

2022-11-22 23:47:31

Add eth0 [172.16.4.61/16] from ix-net

2022-11-22 23:47:31

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:30

Scaled up replica set plex-764768fbbc to 1 from 0

2022-11-22 23:47:30

Created pod: plex-764768fbbc-l8j5k

2022-11-22 23:47:30

Successfully assigned ix-plex/plex-764768fbbc-l8j5k to ix-truenas

2022-11-22 23:47:21

Scaled down replica set plex-764768fbbc to 0 from 1

2022-11-22 23:47:21

Deleted pod: plex-764768fbbc-nxdnw

2022-11-22 23:47:21

Error: Error response from daemon: invalid volume specification: '/mnt/TrueNAS/Downloads:/Downloads': Invalid mount path. /mnt/TrueNAS/Downloads. Following service(s) uses this path: `NFS Share, SMB Share`.

2022-11-22 23:47:20

Add eth0 [172.16.4.60/16] from ix-net

2022-11-22 23:47:20

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:20

Updated LoadBalancer with new IPs: [] -> [192.168.0.8]

2022-11-22 23:47:20

Deleting load balancer

2022-11-22 23:47:20

Deleted LoadBalancer DaemonSet kube-system/svclb-plex-1a8e0b71

2022-11-22 23:47:20

Deleted load balancer

2022-11-22 23:47:19

Job completed

2022-11-22 23:47:19

Ensuring load balancer

2022-11-22 23:47:19

Applied LoadBalancer DaemonSet kube-system/svclb-plex-1a8e0b71

2022-11-22 23:47:19

Scaled up replica set plex-764768fbbc to 1

2022-11-22 23:47:19

Created pod: plex-764768fbbc-nxdnw

2022-11-22 23:47:19

Successfully assigned ix-plex/plex-764768fbbc-nxdnw to ix-truenas

2022-11-22 23:47:11

Add eth0 [172.16.4.58/16] from ix-net

2022-11-22 23:47:11

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:11

Created container plex-manifests

2022-11-22 23:47:11

Started container plex-manifests

2022-11-22 23:47:10

Created pod: plex-manifests-dvj2c

2022-11-22 23:47:10

Successfully assigned ix-plex/plex-manifests-dvj2c to ix-truenas

If I stop SMB and NFS or remove their paths to /mnt/TrueNAS/Downloads all apps are deploying without a problem. The most interesting thing is that if I deploy the app with stopped services and then turn them on - everything is working fine. I highlighted what I think is the cause for deploying to get stuck.

And also one more thing, if I set the apps to use NFS share ( instead of host path ) everything is working so I think its not permissions related or I'm wrong ?

I'm new to TrueNAS Scale and found most of the answers of my questions here. Great community !

I have one small but crucial for me issue. This is the my version of TrueNAS Scale: TrueNAS-SCALE-Bluefin-RC - TrueNAS SCALE Bluefin RC

I'm attaching screenshots to help explain the issue I'm having.

I have SMB share pointing to /mnt/TrueNAS/Downloads and NFS share to the same folder.

I want to give the plex app ( for example ) access to my /mnt/TrueNAS/Downloads dataset. No matter if it's plex or another app, and no matter of the repo version ( Truecharts or Official ) I always end up with stuck at deploying app. Here is the full log:

2022-11-22 23:47:41

Updated LoadBalancer with new IPs: [] -> [192.168.0.8]

2022-11-22 23:47:40

Job completed

2022-11-22 23:47:40

Ensuring load balancer

2022-11-22 23:47:40

Applied LoadBalancer DaemonSet kube-system/svclb-plex-04b638ae

2022-11-22 23:47:32

Created pod: plex-manifests-7967c

2022-11-22 23:47:32

Successfully assigned ix-plex/plex-manifests-7967c to ix-truenas

2022-11-22 23:47:32

Add eth0 [172.16.4.62/16] from ix-net

2022-11-22 23:47:32

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:32

Created container plex-manifests

2022-11-22 23:47:32

Started container plex-manifests

2022-11-22 23:47:32

Error: Error response from daemon: invalid volume specification: '/mnt/TrueNAS/Downloads:/Downloads': Invalid mount path. /mnt/TrueNAS/Downloads. Following service(s) uses this path: `NFS Share, SMB Share`.

2022-11-22 23:47:31

Add eth0 [172.16.4.61/16] from ix-net

2022-11-22 23:47:31

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:30

Scaled up replica set plex-764768fbbc to 1 from 0

2022-11-22 23:47:30

Created pod: plex-764768fbbc-l8j5k

2022-11-22 23:47:30

Successfully assigned ix-plex/plex-764768fbbc-l8j5k to ix-truenas

2022-11-22 23:47:21

Scaled down replica set plex-764768fbbc to 0 from 1

2022-11-22 23:47:21

Deleted pod: plex-764768fbbc-nxdnw

2022-11-22 23:47:21

Error: Error response from daemon: invalid volume specification: '/mnt/TrueNAS/Downloads:/Downloads': Invalid mount path. /mnt/TrueNAS/Downloads. Following service(s) uses this path: `NFS Share, SMB Share`.

2022-11-22 23:47:20

Add eth0 [172.16.4.60/16] from ix-net

2022-11-22 23:47:20

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:20

Updated LoadBalancer with new IPs: [] -> [192.168.0.8]

2022-11-22 23:47:20

Deleting load balancer

2022-11-22 23:47:20

Deleted LoadBalancer DaemonSet kube-system/svclb-plex-1a8e0b71

2022-11-22 23:47:20

Deleted load balancer

2022-11-22 23:47:19

Job completed

2022-11-22 23:47:19

Ensuring load balancer

2022-11-22 23:47:19

Applied LoadBalancer DaemonSet kube-system/svclb-plex-1a8e0b71

2022-11-22 23:47:19

Scaled up replica set plex-764768fbbc to 1

2022-11-22 23:47:19

Created pod: plex-764768fbbc-nxdnw

2022-11-22 23:47:19

Successfully assigned ix-plex/plex-764768fbbc-nxdnw to ix-truenas

2022-11-22 23:47:11

Add eth0 [172.16.4.58/16] from ix-net

2022-11-22 23:47:11

Container image "tccr.io/truecharts/ubuntu:jammy-20221101@sha256:4b9475e08c5180d4e7417dc6a18a26dcce7691e4311e5353dbb952645c5ff43f" already present on machine

2022-11-22 23:47:11

Created container plex-manifests

2022-11-22 23:47:11

Started container plex-manifests

2022-11-22 23:47:10

Created pod: plex-manifests-dvj2c

2022-11-22 23:47:10

Successfully assigned ix-plex/plex-manifests-dvj2c to ix-truenas

If I stop SMB and NFS or remove their paths to /mnt/TrueNAS/Downloads all apps are deploying without a problem. The most interesting thing is that if I deploy the app with stopped services and then turn them on - everything is working fine. I highlighted what I think is the cause for deploying to get stuck.

And also one more thing, if I set the apps to use NFS share ( instead of host path ) everything is working so I think its not permissions related or I'm wrong ?