pinoli

Dabbler

- Joined

- Feb 20, 2021

- Messages

- 34

I run 6 mirrored pools on my TrueNAS SCALE Bluefin 22.12 install:

After 3-4 hours at 3:00 AM, I received a SCALE notification together with an email saying one of the new drive experienced too many write errors (I believe it was 72) and was put offline, so the pool was now degraded.

I checked dmseg and this was the output for that event

After 36 minutes, at 3:36 AM I receive 6 emails, one for each pool, telling me "A Fail event had been detected on md device /dev/md12x." This time I got no notifications inside SCALE, but after checking dmseg for this event I see:

One drive for each pool issued a Fail event, across 3 controllers. What is weird here is that zpool status didn't show any problem with the other pools, it would only show me the newly installed pool was degraded. Nor TrueNAS SCALE showed any notification (aside from the emails).

This happened while the initial copy was still going on smoothly, even though the destination pool now had one drive.

After almost 9 hours, at 12:12 PM, I received another email telling me that now the other disk in the new pool experienced write errors and was now degraded, but wasn't put offline since files were still copying onto it.

Here the dmseg for this event, identical to the first one in the chain.

After 3 more hours, files finished copying and the new dataset was still there standing, with one disk in a degraded state.

The other pools were active and healthy like nothing happened. I run smartctl -x /dev/sdX for each drive and have zero error, including on the two new drives: smart tests passed all with flying colors. (can't enclose the outputs of smartctl -x for 10 drives, if needed I'll do it in subsequent posts).

This is quite bizarre and very scary, considering all of my pools flipped at one time if what dmseg says is true.

Also, this cannot be related to the HBA PCIe controller, or any other controller since this was across all the available controllers inside my rig.

Moreover, the two new drives were sourced from different vendors to avoid the same batch, and I find it highly suspicious that both failed in the same way immediately after installing them, also SMART tests tell me the drives are all right. I also put the two drives on two different ports on the HBA, using separate cables.

Plus, I copied other 700GB just in case on the new (now degraded) pool, and didn't hit any new error.

My hunch tells me drives and controllers are not at fault here, but other than that I have no clue. Also not sure if I should open a ticket about this.

If there any other test I can perform on the new drives let me know, thanks.

P.S.: after the new pool got degraded and one of the drive was put offline, I started a SMART long test on this drive that is still running (almost at 24hrs). I intend on resilvering the pool once the test is over.

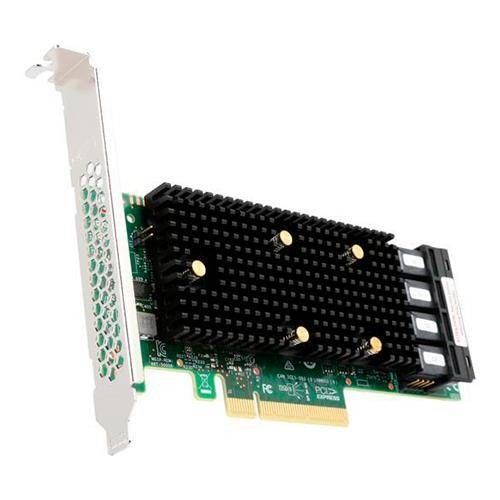

- 3x through a Broadcom 9405W-16i

- 2x18TB Seagate X18 ST18000NM004J SAS

- 2x18TB Seagate X18 ST18000NM004J SAS

- 2x18TB Seagate X18 ST18000NM000J SATA<--- new pool

- 2x through the mobo SATA controller

- 2x 256GB Samsung 870 Evo <-- boot-pool

- 1x through the NVMe PCIe controller on the mobo

- 2x 2TB Samsung 970 Evo Plus <-- system & app pool

After 3-4 hours at 3:00 AM, I received a SCALE notification together with an email saying one of the new drive experienced too many write errors (I believe it was 72) and was put offline, so the pool was now degraded.

I checked dmseg and this was the output for that event

[13145.247058] sd 0:0:1:0: attempting task abort!scmd(0x00000000143350ad), outstanding for 31936 ms & timeout 30000 ms

[13145.247952] sd 0:0:1:0: [sdf] tag#213 CDB: Write(16) 8a 00 00 00 00 01 9c d3 4f 60 00 00 08 00 00 00

[13145.249217] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.250063] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.250920] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.279663] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000143350ad)

[13145.279688] mpt3sas_cm0: log_info(0x31130000): originator(PL), code(0x13), sub_code(0x0000)

[13145.281103] sd 0:0:1:0: [sdf] tag#213 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.283409] sd 0:0:1:0: [sdf] tag#213 CDB: Write(16) 8a 00 00 00 00 01 9c d3 4f 60 00 00 08 00 00 00

[13145.284861] blk_update_request: I/O error, dev sdf, sector 6926061408 op 0x1:(WRITE) flags 0x700 phys_seg 70 prio class 0

[13145.286329] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543995891712 size=1048576 flags=40080c80

[13145.287862] sd 0:0:1:0: attempting task abort!scmd(0x0000000008b8b645), outstanding for 31920 ms & timeout 30000 ms

[13145.289387] sd 0:0:1:0: [sdf] tag#211 CDB: Write(16) 8a 00 00 00 00 01 9c d3 77 10 00 00 07 d0 00 00

[13145.290828] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.291828] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.292732] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.293643] sd 0:0:1:0: No reference found at driver, assuming scmd(0x0000000008b8b645) might have completed

[13145.294569] sd 0:0:1:0: task abort: SUCCESS scmd(0x0000000008b8b645)

[13145.295500] sd 0:0:1:0: [sdf] tag#211 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.296430] sd 0:0:1:0: [sdf] tag#211 CDB: Write(16) 8a 00 00 00 00 01 9c d3 77 10 00 00 07 d0 00 00

[13145.297355] blk_update_request: I/O error, dev sdf, sector 6926071568 op 0x1:(WRITE) flags 0x4700 phys_seg 73 prio class 0

[13145.298299] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3544001093632 size=1024000 flags=40080c80

[13145.299293] sd 0:0:1:0: attempting task abort!scmd(0x00000000bd4f552d), outstanding for 31932 ms & timeout 30000 ms

[13145.300279] sd 0:0:1:0: [sdf] tag#212 CDB: Write(16) 8a 00 00 00 00 01 9c d3 7e e0 00 00 08 00 00 00

[13145.301253] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.302242] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.303242] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.304241] sd 0:0:1:0: No reference found at driver, assuming scmd(0x00000000bd4f552d) might have completed

[13145.305259] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000bd4f552d)

[13145.306265] sd 0:0:1:0: [sdf] tag#212 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.307295] sd 0:0:1:0: [sdf] tag#212 CDB: Write(16) 8a 00 00 00 00 01 9c d3 7e e0 00 00 08 00 00 00

[13145.308327] blk_update_request: I/O error, dev sdf, sector 6926073568 op 0x1:(WRITE) flags 0x4700 phys_seg 103 prio class 0

[13145.309368] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3544002117632 size=1048576 flags=40080c80

[13145.310444] sd 0:0:1:0: attempting task abort!scmd(0x00000000b61440d8), outstanding for 31940 ms & timeout 30000 ms

[13145.311510] sd 0:0:1:0: [sdf] tag#209 CDB: Write(16) 8a 00 00 00 00 01 9c d3 6f 10 00 00 08 00 00 00

[13145.312581] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.313691] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.314773] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.315854] sd 0:0:1:0: No reference found at driver, assuming scmd(0x00000000b61440d8) might have completed

[13145.316951] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000b61440d8)

[13145.318053] sd 0:0:1:0: [sdf] tag#209 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.319167] sd 0:0:1:0: [sdf] tag#209 CDB: Write(16) 8a 00 00 00 00 01 9c d3 6f 10 00 00 08 00 00 00

[13145.320285] blk_update_request: I/O error, dev sdf, sector 6926069520 op 0x1:(WRITE) flags 0x4700 phys_seg 79 prio class 0

[13145.321417] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3544000045056 size=1048576 flags=40080c80

[13145.322784] sd 0:0:1:0: attempting task abort!scmd(0x0000000057516cfd), outstanding for 31952 ms & timeout 30000 ms

[13145.324079] sd 0:0:1:0: [sdf] tag#207 CDB: Write(16) 8a 00 00 00 00 01 9c d3 5f 40 00 00 08 00 00 00

[13145.325317] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.326560] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.327815] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.329083] sd 0:0:1:0: No reference found at driver, assuming scmd(0x0000000057516cfd) might have completed

[13145.330338] sd 0:0:1:0: task abort: SUCCESS scmd(0x0000000057516cfd)

[13145.331608] sd 0:0:1:0: [sdf] tag#207 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.332894] sd 0:0:1:0: [sdf] tag#207 CDB: Write(16) 8a 00 00 00 00 01 9c d3 5f 40 00 00 08 00 00 00

[13145.334159] blk_update_request: I/O error, dev sdf, sector 6926065472 op 0x1:(WRITE) flags 0x4700 phys_seg 70 prio class 0

[13145.335459] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543997972480 size=1048576 flags=40080c80

[13145.336793] sd 0:0:1:0: attempting task abort!scmd(0x000000009f37fabf), outstanding for 31968 ms & timeout 30000 ms

[13145.338060] sd 0:0:1:0: [sdf] tag#208 CDB: Write(16) 8a 00 00 00 00 01 9c d3 67 40 00 00 07 d0 00 00

[13145.339332] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.340598] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.341864] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.343132] sd 0:0:1:0: No reference found at driver, assuming scmd(0x000000009f37fabf) might have completed

[13145.344417] sd 0:0:1:0: task abort: SUCCESS scmd(0x000000009f37fabf)

[13145.345705] sd 0:0:1:0: [sdf] tag#208 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.346998] sd 0:0:1:0: [sdf] tag#208 CDB: Write(16) 8a 00 00 00 00 01 9c d3 67 40 00 00 07 d0 00 00

[13145.348299] blk_update_request: I/O error, dev sdf, sector 6926067520 op 0x1:(WRITE) flags 0x4700 phys_seg 65 prio class 0

[13145.349612] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543999021056 size=1024000 flags=40080c80

[13145.350959] sd 0:0:1:0: attempting task abort!scmd(0x00000000768d6a02), outstanding for 32032 ms & timeout 30000 ms

[13145.352313] sd 0:0:1:0: [sdf] tag#206 CDB: Write(16) 8a 00 00 00 00 01 9c d3 57 60 00 00 07 e0 00 00

[13145.353659] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.355015] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.356373] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.357748] sd 0:0:1:0: No reference found at driver, assuming scmd(0x00000000768d6a02) might have completed

[13145.359121] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000768d6a02)

[13145.360507] sd 0:0:1:0: [sdf] tag#206 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=32s

[13145.361905] sd 0:0:1:0: [sdf] tag#206 CDB: Write(16) 8a 00 00 00 00 01 9c d3 57 60 00 00 07 e0 00 00

[13145.363309] blk_update_request: I/O error, dev sdf, sector 6926063456 op 0x1:(WRITE) flags 0x4700 phys_seg 63 prio class 0

[13145.364720] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543996940288 size=1032192 flags=40080c80

[13145.366161] sd 0:0:1:0: attempting task abort!scmd(0x000000003eb005f8), outstanding for 32056 ms & timeout 30000 ms

[13145.367602] sd 0:0:1:0: [sdf] tag#205 CDB: Write(16) 8a 00 00 00 00 01 9c d3 47 90 00 00 07 d0 00 00

[13145.369045] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.370498] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.371953] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.373420] sd 0:0:1:0: No reference found at driver, assuming scmd(0x000000003eb005f8) might have completed

[13145.374884] sd 0:0:1:0: task abort: SUCCESS scmd(0x000000003eb005f8)

[13145.376354] sd 0:0:1:0: [sdf] tag#205 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=32s

[13145.377832] sd 0:0:1:0: [sdf] tag#205 CDB: Write(16) 8a 00 00 00 00 01 9c d3 47 90 00 00 07 d0 00 00

[13145.379316] blk_update_request: I/O error, dev sdf, sector 6926059408 op 0x1:(WRITE) flags 0x700 phys_seg 76 prio class 0

[13145.380816] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543994867712 size=1024000 flags=40080c80

[13146.114654] sd 0:0:1:0: Power-on or device reset occurred

[13145.247952] sd 0:0:1:0: [sdf] tag#213 CDB: Write(16) 8a 00 00 00 00 01 9c d3 4f 60 00 00 08 00 00 00

[13145.249217] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.250063] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.250920] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.279663] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000143350ad)

[13145.279688] mpt3sas_cm0: log_info(0x31130000): originator(PL), code(0x13), sub_code(0x0000)

[13145.281103] sd 0:0:1:0: [sdf] tag#213 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.283409] sd 0:0:1:0: [sdf] tag#213 CDB: Write(16) 8a 00 00 00 00 01 9c d3 4f 60 00 00 08 00 00 00

[13145.284861] blk_update_request: I/O error, dev sdf, sector 6926061408 op 0x1:(WRITE) flags 0x700 phys_seg 70 prio class 0

[13145.286329] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543995891712 size=1048576 flags=40080c80

[13145.287862] sd 0:0:1:0: attempting task abort!scmd(0x0000000008b8b645), outstanding for 31920 ms & timeout 30000 ms

[13145.289387] sd 0:0:1:0: [sdf] tag#211 CDB: Write(16) 8a 00 00 00 00 01 9c d3 77 10 00 00 07 d0 00 00

[13145.290828] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.291828] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.292732] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.293643] sd 0:0:1:0: No reference found at driver, assuming scmd(0x0000000008b8b645) might have completed

[13145.294569] sd 0:0:1:0: task abort: SUCCESS scmd(0x0000000008b8b645)

[13145.295500] sd 0:0:1:0: [sdf] tag#211 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.296430] sd 0:0:1:0: [sdf] tag#211 CDB: Write(16) 8a 00 00 00 00 01 9c d3 77 10 00 00 07 d0 00 00

[13145.297355] blk_update_request: I/O error, dev sdf, sector 6926071568 op 0x1:(WRITE) flags 0x4700 phys_seg 73 prio class 0

[13145.298299] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3544001093632 size=1024000 flags=40080c80

[13145.299293] sd 0:0:1:0: attempting task abort!scmd(0x00000000bd4f552d), outstanding for 31932 ms & timeout 30000 ms

[13145.300279] sd 0:0:1:0: [sdf] tag#212 CDB: Write(16) 8a 00 00 00 00 01 9c d3 7e e0 00 00 08 00 00 00

[13145.301253] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.302242] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.303242] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.304241] sd 0:0:1:0: No reference found at driver, assuming scmd(0x00000000bd4f552d) might have completed

[13145.305259] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000bd4f552d)

[13145.306265] sd 0:0:1:0: [sdf] tag#212 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.307295] sd 0:0:1:0: [sdf] tag#212 CDB: Write(16) 8a 00 00 00 00 01 9c d3 7e e0 00 00 08 00 00 00

[13145.308327] blk_update_request: I/O error, dev sdf, sector 6926073568 op 0x1:(WRITE) flags 0x4700 phys_seg 103 prio class 0

[13145.309368] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3544002117632 size=1048576 flags=40080c80

[13145.310444] sd 0:0:1:0: attempting task abort!scmd(0x00000000b61440d8), outstanding for 31940 ms & timeout 30000 ms

[13145.311510] sd 0:0:1:0: [sdf] tag#209 CDB: Write(16) 8a 00 00 00 00 01 9c d3 6f 10 00 00 08 00 00 00

[13145.312581] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.313691] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.314773] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.315854] sd 0:0:1:0: No reference found at driver, assuming scmd(0x00000000b61440d8) might have completed

[13145.316951] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000b61440d8)

[13145.318053] sd 0:0:1:0: [sdf] tag#209 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.319167] sd 0:0:1:0: [sdf] tag#209 CDB: Write(16) 8a 00 00 00 00 01 9c d3 6f 10 00 00 08 00 00 00

[13145.320285] blk_update_request: I/O error, dev sdf, sector 6926069520 op 0x1:(WRITE) flags 0x4700 phys_seg 79 prio class 0

[13145.321417] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3544000045056 size=1048576 flags=40080c80

[13145.322784] sd 0:0:1:0: attempting task abort!scmd(0x0000000057516cfd), outstanding for 31952 ms & timeout 30000 ms

[13145.324079] sd 0:0:1:0: [sdf] tag#207 CDB: Write(16) 8a 00 00 00 00 01 9c d3 5f 40 00 00 08 00 00 00

[13145.325317] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.326560] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.327815] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.329083] sd 0:0:1:0: No reference found at driver, assuming scmd(0x0000000057516cfd) might have completed

[13145.330338] sd 0:0:1:0: task abort: SUCCESS scmd(0x0000000057516cfd)

[13145.331608] sd 0:0:1:0: [sdf] tag#207 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.332894] sd 0:0:1:0: [sdf] tag#207 CDB: Write(16) 8a 00 00 00 00 01 9c d3 5f 40 00 00 08 00 00 00

[13145.334159] blk_update_request: I/O error, dev sdf, sector 6926065472 op 0x1:(WRITE) flags 0x4700 phys_seg 70 prio class 0

[13145.335459] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543997972480 size=1048576 flags=40080c80

[13145.336793] sd 0:0:1:0: attempting task abort!scmd(0x000000009f37fabf), outstanding for 31968 ms & timeout 30000 ms

[13145.338060] sd 0:0:1:0: [sdf] tag#208 CDB: Write(16) 8a 00 00 00 00 01 9c d3 67 40 00 00 07 d0 00 00

[13145.339332] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.340598] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.341864] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.343132] sd 0:0:1:0: No reference found at driver, assuming scmd(0x000000009f37fabf) might have completed

[13145.344417] sd 0:0:1:0: task abort: SUCCESS scmd(0x000000009f37fabf)

[13145.345705] sd 0:0:1:0: [sdf] tag#208 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[13145.346998] sd 0:0:1:0: [sdf] tag#208 CDB: Write(16) 8a 00 00 00 00 01 9c d3 67 40 00 00 07 d0 00 00

[13145.348299] blk_update_request: I/O error, dev sdf, sector 6926067520 op 0x1:(WRITE) flags 0x4700 phys_seg 65 prio class 0

[13145.349612] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543999021056 size=1024000 flags=40080c80

[13145.350959] sd 0:0:1:0: attempting task abort!scmd(0x00000000768d6a02), outstanding for 32032 ms & timeout 30000 ms

[13145.352313] sd 0:0:1:0: [sdf] tag#206 CDB: Write(16) 8a 00 00 00 00 01 9c d3 57 60 00 00 07 e0 00 00

[13145.353659] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.355015] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.356373] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.357748] sd 0:0:1:0: No reference found at driver, assuming scmd(0x00000000768d6a02) might have completed

[13145.359121] sd 0:0:1:0: task abort: SUCCESS scmd(0x00000000768d6a02)

[13145.360507] sd 0:0:1:0: [sdf] tag#206 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=32s

[13145.361905] sd 0:0:1:0: [sdf] tag#206 CDB: Write(16) 8a 00 00 00 00 01 9c d3 57 60 00 00 07 e0 00 00

[13145.363309] blk_update_request: I/O error, dev sdf, sector 6926063456 op 0x1:(WRITE) flags 0x4700 phys_seg 63 prio class 0

[13145.364720] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543996940288 size=1032192 flags=40080c80

[13145.366161] sd 0:0:1:0: attempting task abort!scmd(0x000000003eb005f8), outstanding for 32056 ms & timeout 30000 ms

[13145.367602] sd 0:0:1:0: [sdf] tag#205 CDB: Write(16) 8a 00 00 00 00 01 9c d3 47 90 00 00 07 d0 00 00

[13145.369045] scsi target0:0:1: handle(0x0022), sas_address(0x300605b00fe37e21), phy(1)

[13145.370498] scsi target0:0:1: enclosure logical id(0x500605b00fe37e20), slot(8)

[13145.371953] scsi target0:0:1: enclosure level(0x0000), connector name( C2 )

[13145.373420] sd 0:0:1:0: No reference found at driver, assuming scmd(0x000000003eb005f8) might have completed

[13145.374884] sd 0:0:1:0: task abort: SUCCESS scmd(0x000000003eb005f8)

[13145.376354] sd 0:0:1:0: [sdf] tag#205 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=32s

[13145.377832] sd 0:0:1:0: [sdf] tag#205 CDB: Write(16) 8a 00 00 00 00 01 9c d3 47 90 00 00 07 d0 00 00

[13145.379316] blk_update_request: I/O error, dev sdf, sector 6926059408 op 0x1:(WRITE) flags 0x700 phys_seg 76 prio class 0

[13145.380816] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5ae37f3c-2783-47d6-87ef-4f49769037cd error=5 type=2 offset=3543994867712 size=1024000 flags=40080c80

[13146.114654] sd 0:0:1:0: Power-on or device reset occurred

After 36 minutes, at 3:36 AM I receive 6 emails, one for each pool, telling me "A Fail event had been detected on md device /dev/md12x." This time I got no notifications inside SCALE, but after checking dmseg for this event I see:

[15306.052673] md/raid1:md122: Disk failure on sdj1, disabling device.

md/raid1:md122: Operation continuing on 1 devices.

[15306.419884] md122: detected capacity change from 4190080 to 0

[15306.421398] md: md122 stopped.

[15308.804717] md/raid1:md123: Disk failure on sdh1, disabling device.

md/raid1:md123: Operation continuing on 1 devices.

[15308.995956] md123: detected capacity change from 4190080 to 0

[15308.997482] md: md123 stopped.

[15309.901251] md/raid1:md124: Disk failure on sdi1, disabling device.

md/raid1:md124: Operation continuing on 1 devices.

[15310.107277] md124: detected capacity change from 4190080 to 0

[15310.108818] md: md124 stopped.

[15310.628166] md/raid1:md126: Disk failure on sdb4, disabling device.

md/raid1:md126: Operation continuing on 1 devices.

[15310.955525] md126: detected capacity change from 33519616 to 0

[15310.956959] md: md126 stopped.

[15311.164649] md/raid1:md127: Disk failure on nvme0n1p1, disabling device.

md/raid1:md127: Operation continuing on 1 devices.

[15311.344211] md127: detected capacity change from 4190080 to 0

[15311.345664] md: md127 stopped.

[15311.787724] md/raid1:md125: Disk failure on sdd1, disabling device.

md/raid1:md125: Operation continuing on 1 devices.

[15311.972964] md125: detected capacity change from 4190080 to 0

[15311.974416] md: md125 stopped.

[15316.471064] md: md126 stopped.

[15316.490812] md: md124 stopped.

[15316.510425] md: md123 stopped.

[15316.634605] md/raid1:md127: not clean -- starting background reconstruction

[15316.636111] md/raid1:md127: active with 2 out of 2 mirrors

[15316.637676] md127: detected capacity change from 0 to 4190080

[15316.647024] md: resync of RAID array md127

[15316.986642] md/raid1:md126: not clean -- starting background reconstruction

[15316.988101] md/raid1:md126: active with 2 out of 2 mirrors

[15316.989590] md126: detected capacity change from 0 to 4190080

[15317.008469] md: resync of RAID array md126

[15317.884426] md/raid1:md125: not clean -- starting background reconstruction

[15317.885910] md/raid1:md125: active with 2 out of 2 mirrors

[15317.887366] md125: detected capacity change from 0 to 4190080

[15318.053212] md: resync of RAID array md125

[15318.344159] md/raid1:md124: not clean -- starting background reconstruction

[15318.345630] md/raid1:md124: active with 2 out of 2 mirrors

[15318.347084] md124: detected capacity change from 0 to 4190080

[15318.357314] md: resync of RAID array md124

[15319.282522] md/raid1:md123: not clean -- starting background reconstruction

[15319.283987] md/raid1:md123: active with 2 out of 2 mirrors

[15319.285473] md123: detected capacity change from 0 to 4190080

[15319.288991] md: resync of RAID array md123

[15319.575761] Adding 2095036k swap on /dev/mapper/md127. Priority:-2 extents:1 across:2095036k FS

[15320.844446] Adding 2095036k swap on /dev/mapper/md126. Priority:-3 extents:1 across:2095036k FS

[15321.866974] Adding 2095036k swap on /dev/mapper/md125. Priority:-4 extents:1 across:2095036k FS

[15323.368532] Adding 2095036k swap on /dev/mapper/md124. Priority:-5 extents:1 across:2095036k FS

[15323.461809] Adding 2095036k swap on /dev/mapper/md123. Priority:-6 extents:1 across:2095036k SSFS

[15327.498384] md: md127: resync done.

[15329.690523] md: md124: resync done.

[15329.898522] md: md123: resync done.

[15330.833792] md: md126: resync done.

[15336.982469] md/raid1:md123: Disk failure on nvme0n1p1, disabling device.

md/raid1:md123: Operation continuing on 1 devices.

[15337.159918] md123: detected capacity change from 4190080 to 0

[15337.161167] md: md123 stopped.

[15337.684205] md/raid1:md124: Disk failure on sdd1, disabling device.

md/raid1:md124: Operation continuing on 1 devices.

[15338.235562] md124: detected capacity change from 4190080 to 0

[15338.236860] md: md124 stopped.

[15339.518137] md/raid1:md125: Disk failure on sdj1, disabling device.

md/raid1:md125: Operation continuing on 1 devices.

[15339.518823] md: md125: resync interrupted.

[15339.580922] md: resync of RAID array md125

[15339.582335] md: md125: resync done.

[15339.702637] md125: detected capacity change from 4190080 to 0

[15339.703817] md: md125 stopped.

[15340.162468] md/raid1:md126: Disk failure on sdh1, disabling device.

md/raid1:md126: Operation continuing on 1 devices.

[15340.333880] md126: detected capacity change from 4190080 to 0

[15340.335029] md: md126 stopped.

[15340.838505] md/raid1:md127: Disk failure on sdf1, disabling device.

md/raid1:md127: Operation continuing on 1 devices.

[15341.002913] md127: detected capacity change from 4190080 to 0

[15341.004041] md: md127 stopped.

[15347.515596] md/raid1:md127: not clean -- starting background reconstruction

[15347.516762] md/raid1:md127: active with 2 out of 2 mirrors

[15347.518042] md127: detected capacity change from 0 to 4190080

[15347.545119] md: resync of RAID array md127

[15347.829981] md/raid1:md126: not clean -- starting background reconstruction

[15347.831034] md/raid1:md126: active with 2 out of 2 mirrors

[15347.832085] md126: detected capacity change from 0 to 4190080

[15347.833305] md: resync of RAID array md126

[15348.762812] md/raid1:md125: not clean -- starting background reconstruction

[15348.763824] md/raid1:md125: active with 2 out of 2 mirrors

[15348.764907] md125: detected capacity change from 0 to 4190080

[15348.766123] md: resync of RAID array md125

[15349.745678] md/raid1:md124: not clean -- starting background reconstruction

[15349.746671] md/raid1:md124: active with 2 out of 2 mirrors

[15349.747692] md124: detected capacity change from 0 to 4190080

[15349.755828] md: resync of RAID array md124

[15351.189289] md/raid1:md123: not clean -- starting background reconstruction

[15351.190267] md/raid1:md123: active with 2 out of 2 mirrors

[15351.191242] md123: detected capacity change from 0 to 33519616

[15351.193047] md: resync of RAID array md123

[15351.902026] Adding 2095036k swap on /dev/mapper/md127. Priority:-2 extents:1 across:2095036k FS

[15352.553954] Adding 2095036k swap on /dev/mapper/md126. Priority:-3 extents:1 across:2095036k FS

[15354.081531] Adding 2095036k swap on /dev/mapper/md125. Priority:-4 extents:1 across:2095036k FS

[15359.073898] Adding 2095036k swap on /dev/mapper/md124. Priority:-5 extents:1 across:2095036k FS

[15359.180002] Adding 16759804k swap on /dev/mapper/md123. Priority:-6 extents:1 across:16759804k SSFS

[15360.887870] md: md124: resync done.

[15366.238511] md: md125: resync done.

[15366.519767] md: md127: resync done.

[15367.425958] md: md126: resync done.

[15434.410112] md: md123: resync done.

md/raid1:md122: Operation continuing on 1 devices.

[15306.419884] md122: detected capacity change from 4190080 to 0

[15306.421398] md: md122 stopped.

[15308.804717] md/raid1:md123: Disk failure on sdh1, disabling device.

md/raid1:md123: Operation continuing on 1 devices.

[15308.995956] md123: detected capacity change from 4190080 to 0

[15308.997482] md: md123 stopped.

[15309.901251] md/raid1:md124: Disk failure on sdi1, disabling device.

md/raid1:md124: Operation continuing on 1 devices.

[15310.107277] md124: detected capacity change from 4190080 to 0

[15310.108818] md: md124 stopped.

[15310.628166] md/raid1:md126: Disk failure on sdb4, disabling device.

md/raid1:md126: Operation continuing on 1 devices.

[15310.955525] md126: detected capacity change from 33519616 to 0

[15310.956959] md: md126 stopped.

[15311.164649] md/raid1:md127: Disk failure on nvme0n1p1, disabling device.

md/raid1:md127: Operation continuing on 1 devices.

[15311.344211] md127: detected capacity change from 4190080 to 0

[15311.345664] md: md127 stopped.

[15311.787724] md/raid1:md125: Disk failure on sdd1, disabling device.

md/raid1:md125: Operation continuing on 1 devices.

[15311.972964] md125: detected capacity change from 4190080 to 0

[15311.974416] md: md125 stopped.

[15316.471064] md: md126 stopped.

[15316.490812] md: md124 stopped.

[15316.510425] md: md123 stopped.

[15316.634605] md/raid1:md127: not clean -- starting background reconstruction

[15316.636111] md/raid1:md127: active with 2 out of 2 mirrors

[15316.637676] md127: detected capacity change from 0 to 4190080

[15316.647024] md: resync of RAID array md127

[15316.986642] md/raid1:md126: not clean -- starting background reconstruction

[15316.988101] md/raid1:md126: active with 2 out of 2 mirrors

[15316.989590] md126: detected capacity change from 0 to 4190080

[15317.008469] md: resync of RAID array md126

[15317.884426] md/raid1:md125: not clean -- starting background reconstruction

[15317.885910] md/raid1:md125: active with 2 out of 2 mirrors

[15317.887366] md125: detected capacity change from 0 to 4190080

[15318.053212] md: resync of RAID array md125

[15318.344159] md/raid1:md124: not clean -- starting background reconstruction

[15318.345630] md/raid1:md124: active with 2 out of 2 mirrors

[15318.347084] md124: detected capacity change from 0 to 4190080

[15318.357314] md: resync of RAID array md124

[15319.282522] md/raid1:md123: not clean -- starting background reconstruction

[15319.283987] md/raid1:md123: active with 2 out of 2 mirrors

[15319.285473] md123: detected capacity change from 0 to 4190080

[15319.288991] md: resync of RAID array md123

[15319.575761] Adding 2095036k swap on /dev/mapper/md127. Priority:-2 extents:1 across:2095036k FS

[15320.844446] Adding 2095036k swap on /dev/mapper/md126. Priority:-3 extents:1 across:2095036k FS

[15321.866974] Adding 2095036k swap on /dev/mapper/md125. Priority:-4 extents:1 across:2095036k FS

[15323.368532] Adding 2095036k swap on /dev/mapper/md124. Priority:-5 extents:1 across:2095036k FS

[15323.461809] Adding 2095036k swap on /dev/mapper/md123. Priority:-6 extents:1 across:2095036k SSFS

[15327.498384] md: md127: resync done.

[15329.690523] md: md124: resync done.

[15329.898522] md: md123: resync done.

[15330.833792] md: md126: resync done.

[15336.982469] md/raid1:md123: Disk failure on nvme0n1p1, disabling device.

md/raid1:md123: Operation continuing on 1 devices.

[15337.159918] md123: detected capacity change from 4190080 to 0

[15337.161167] md: md123 stopped.

[15337.684205] md/raid1:md124: Disk failure on sdd1, disabling device.

md/raid1:md124: Operation continuing on 1 devices.

[15338.235562] md124: detected capacity change from 4190080 to 0

[15338.236860] md: md124 stopped.

[15339.518137] md/raid1:md125: Disk failure on sdj1, disabling device.

md/raid1:md125: Operation continuing on 1 devices.

[15339.518823] md: md125: resync interrupted.

[15339.580922] md: resync of RAID array md125

[15339.582335] md: md125: resync done.

[15339.702637] md125: detected capacity change from 4190080 to 0

[15339.703817] md: md125 stopped.

[15340.162468] md/raid1:md126: Disk failure on sdh1, disabling device.

md/raid1:md126: Operation continuing on 1 devices.

[15340.333880] md126: detected capacity change from 4190080 to 0

[15340.335029] md: md126 stopped.

[15340.838505] md/raid1:md127: Disk failure on sdf1, disabling device.

md/raid1:md127: Operation continuing on 1 devices.

[15341.002913] md127: detected capacity change from 4190080 to 0

[15341.004041] md: md127 stopped.

[15347.515596] md/raid1:md127: not clean -- starting background reconstruction

[15347.516762] md/raid1:md127: active with 2 out of 2 mirrors

[15347.518042] md127: detected capacity change from 0 to 4190080

[15347.545119] md: resync of RAID array md127

[15347.829981] md/raid1:md126: not clean -- starting background reconstruction

[15347.831034] md/raid1:md126: active with 2 out of 2 mirrors

[15347.832085] md126: detected capacity change from 0 to 4190080

[15347.833305] md: resync of RAID array md126

[15348.762812] md/raid1:md125: not clean -- starting background reconstruction

[15348.763824] md/raid1:md125: active with 2 out of 2 mirrors

[15348.764907] md125: detected capacity change from 0 to 4190080

[15348.766123] md: resync of RAID array md125

[15349.745678] md/raid1:md124: not clean -- starting background reconstruction

[15349.746671] md/raid1:md124: active with 2 out of 2 mirrors

[15349.747692] md124: detected capacity change from 0 to 4190080

[15349.755828] md: resync of RAID array md124

[15351.189289] md/raid1:md123: not clean -- starting background reconstruction

[15351.190267] md/raid1:md123: active with 2 out of 2 mirrors

[15351.191242] md123: detected capacity change from 0 to 33519616

[15351.193047] md: resync of RAID array md123

[15351.902026] Adding 2095036k swap on /dev/mapper/md127. Priority:-2 extents:1 across:2095036k FS

[15352.553954] Adding 2095036k swap on /dev/mapper/md126. Priority:-3 extents:1 across:2095036k FS

[15354.081531] Adding 2095036k swap on /dev/mapper/md125. Priority:-4 extents:1 across:2095036k FS

[15359.073898] Adding 2095036k swap on /dev/mapper/md124. Priority:-5 extents:1 across:2095036k FS

[15359.180002] Adding 16759804k swap on /dev/mapper/md123. Priority:-6 extents:1 across:16759804k SSFS

[15360.887870] md: md124: resync done.

[15366.238511] md: md125: resync done.

[15366.519767] md: md127: resync done.

[15367.425958] md: md126: resync done.

[15434.410112] md: md123: resync done.

One drive for each pool issued a Fail event, across 3 controllers. What is weird here is that zpool status didn't show any problem with the other pools, it would only show me the newly installed pool was degraded. Nor TrueNAS SCALE showed any notification (aside from the emails).

This happened while the initial copy was still going on smoothly, even though the destination pool now had one drive.

After almost 9 hours, at 12:12 PM, I received another email telling me that now the other disk in the new pool experienced write errors and was now degraded, but wasn't put offline since files were still copying onto it.

Here the dmseg for this event, identical to the first one in the chain.

[46280.116429] sd 0:0:5:0: attempting task abort!scmd(0x0000000081c33f81), outstanding for 31612 ms & timeout 30000 ms

[46280.117518] sd 0:0:5:0: [sdj] tag#1446 CDB: Write(16) 8a 00 00 00 00 00 04 47 1d 20 00 00 00 20 00 00

[46280.118726] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.119868] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.121003] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.150026] sd 0:0:5:0: task abort: SUCCESS scmd(0x0000000081c33f81)

[46280.151910] sd 0:0:5:0: [sdj] tag#1446 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.153834] sd 0:0:5:0: [sdj] tag#1446 CDB: Write(16) 8a 00 00 00 00 00 04 47 1d 20 00 00 00 20 00 00

[46280.155740] blk_update_request: I/O error, dev sdj, sector 71769376 op 0x1:(WRITE) flags 0x700 phys_seg 2 prio class 0

[46280.157689] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=34598371328 size=16384 flags=180880

[46280.159756] sd 0:0:5:0: attempting task abort!scmd(0x0000000080c04a03), outstanding for 31792 ms & timeout 30000 ms

[46280.161812] sd 0:0:5:0: [sdj] tag#1445 CDB: Write(16) 8a 00 00 00 00 00 02 d5 1b d0 00 00 00 20 00 00

[46280.163793] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.165821] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.167831] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.169864] sd 0:0:5:0: No reference found at driver, assuming scmd(0x0000000080c04a03) might have completed

[46280.172028] sd 0:0:5:0: task abort: SUCCESS scmd(0x0000000080c04a03)

[46280.174085] sd 0:0:5:0: [sdj] tag#1445 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.176158] sd 0:0:5:0: [sdj] tag#1445 CDB: Write(16) 8a 00 00 00 00 00 02 d5 1b d0 00 00 00 20 00 00

[46280.178246] blk_update_request: I/O error, dev sdj, sector 47520720 op 0x1:(WRITE) flags 0x700 phys_seg 2 prio class 0

[46280.180358] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=22183059456 size=16384 flags=180880

[46280.182571] sd 0:0:5:0: attempting task abort!scmd(0x00000000bd69602c), outstanding for 31676 ms & timeout 30000 ms

[46280.184397] sd 0:0:5:0: [sdj] tag#1442 CDB: Write(16) 8a 00 00 00 00 06 10 92 08 d8 00 00 08 00 00 00

[46280.186206] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.187916] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.189114] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.190147] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000bd69602c) might have completed

[46280.191190] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000bd69602c)

[46280.192340] sd 0:0:5:0: [sdj] tag#1442 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.193390] sd 0:0:5:0: [sdj] tag#1442 CDB: Write(16) 8a 00 00 00 00 06 10 92 08 d8 00 00 08 00 00 00

[46280.194436] blk_update_request: I/O error, dev sdj, sector 26047809752 op 0x1:(WRITE) flags 0x700 phys_seg 83 prio class 0

[46280.195510] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334331043840 size=1048576 flags=40080c80

[46280.196627] sd 0:0:5:0: attempting task abort!scmd(0x0000000005af84fe), outstanding for 31692 ms & timeout 30000 ms

[46280.197728] sd 0:0:5:0: [sdj] tag#1444 CDB: Write(16) 8a 00 00 00 00 06 10 92 16 68 00 00 09 d8 00 00

[46280.198812] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.199903] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.201013] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.202236] sd 0:0:5:0: No reference found at driver, assuming scmd(0x0000000005af84fe) might have completed

[46280.203356] sd 0:0:5:0: task abort: SUCCESS scmd(0x0000000005af84fe)

[46280.204479] sd 0:0:5:0: [sdj] tag#1444 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.205733] sd 0:0:5:0: [sdj] tag#1444 CDB: Write(16) 8a 00 00 00 00 06 10 92 16 68 00 00 09 d8 00 00

[46280.207337] blk_update_request: I/O error, dev sdj, sector 26047813224 op 0x1:(WRITE) flags 0x700 phys_seg 106 prio class 0

[46280.208493] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334333063168 size=1048576 flags=40080c80

[46280.209688] sd 0:0:5:0: attempting task abort!scmd(0x00000000e209cf3f), outstanding for 31704 ms & timeout 30000 ms

[46280.210889] sd 0:0:5:0: [sdj] tag#1443 CDB: Write(16) 8a 00 00 00 00 06 10 92 10 d8 00 00 05 90 00 00

[46280.212118] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.213365] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.214574] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.215790] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000e209cf3f) might have completed

[46280.217093] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000e209cf3f)

[46280.218372] sd 0:0:5:0: [sdj] tag#1443 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.219566] sd 0:0:5:0: [sdj] tag#1443 CDB: Write(16) 8a 00 00 00 00 06 10 92 10 d8 00 00 05 90 00 00

[46280.220825] blk_update_request: I/O error, dev sdj, sector 26047811800 op 0x1:(WRITE) flags 0x4700 phys_seg 128 prio class 0

[46280.222134] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334332092416 size=970752 flags=40080c80

[46280.223435] sd 0:0:5:0: attempting task abort!scmd(0x00000000c519bc86), outstanding for 31716 ms & timeout 30000 ms

[46280.224750] sd 0:0:5:0: [sdj] tag#1441 CDB: Write(16) 8a 00 00 00 00 06 10 92 01 08 00 00 07 d0 00 00

[46280.226054] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.227300] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.228583] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.229825] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000c519bc86) might have completed

[46280.231139] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000c519bc86)

[46280.232443] sd 0:0:5:0: [sdj] tag#1441 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.233760] sd 0:0:5:0: [sdj] tag#1441 CDB: Write(16) 8a 00 00 00 00 06 10 92 01 08 00 00 07 d0 00 00

[46280.235017] blk_update_request: I/O error, dev sdj, sector 26047807752 op 0x1:(WRITE) flags 0x4700 phys_seg 88 prio class 0

[46280.236298] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334330019840 size=1024000 flags=40080c80

[46280.237655] sd 0:0:5:0: attempting task abort!scmd(0x00000000171572b2), outstanding for 31732 ms & timeout 30000 ms

[46280.239006] sd 0:0:5:0: [sdj] tag#1439 CDB: Write(16) 8a 00 00 00 00 06 10 91 f1 88 00 00 07 c0 00 00

[46280.240307] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.241683] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.243026] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.244342] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000171572b2) might have completed

[46280.245712] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000171572b2)

[46280.247030] sd 0:0:5:0: [sdj] tag#1439 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.248789] sd 0:0:5:0: [sdj] tag#1439 CDB: Write(16) 8a 00 00 00 00 06 10 91 f1 88 00 00 07 c0 00 00

[46280.250353] blk_update_request: I/O error, dev sdj, sector 26047803784 op 0x1:(WRITE) flags 0x4700 phys_seg 88 prio class 0

[46280.251703] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334327988224 size=1015808 flags=40080c80

[46280.253220] sd 0:0:5:0: attempting task abort!scmd(0x00000000ff934634), outstanding for 31748 ms & timeout 30000 ms

[46280.254606] sd 0:0:5:0: [sdj] tag#1440 CDB: Write(16) 8a 00 00 00 00 06 10 91 f9 48 00 00 07 c0 00 00

[46280.255993] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.257427] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.258821] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.260247] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000ff934634) might have completed

[46280.261724] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000ff934634)

[46280.263168] sd 0:0:5:0: [sdj] tag#1440 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.264643] sd 0:0:5:0: [sdj] tag#1440 CDB: Write(16) 8a 00 00 00 00 06 10 91 f9 48 00 00 07 c0 00 00

[46280.266093] blk_update_request: I/O error, dev sdj, sector 26047805768 op 0x1:(WRITE) flags 0x4700 phys_seg 86 prio class 0

[46280.267547] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334329004032 size=1015808 flags=40080c80

TRUNCATED FOR MAX CHAR LIMIT BUT IT'S IDENTICAL TO THE FIRST ERROR, THIS TIME FOR SDJ

[46280.117518] sd 0:0:5:0: [sdj] tag#1446 CDB: Write(16) 8a 00 00 00 00 00 04 47 1d 20 00 00 00 20 00 00

[46280.118726] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.119868] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.121003] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.150026] sd 0:0:5:0: task abort: SUCCESS scmd(0x0000000081c33f81)

[46280.151910] sd 0:0:5:0: [sdj] tag#1446 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.153834] sd 0:0:5:0: [sdj] tag#1446 CDB: Write(16) 8a 00 00 00 00 00 04 47 1d 20 00 00 00 20 00 00

[46280.155740] blk_update_request: I/O error, dev sdj, sector 71769376 op 0x1:(WRITE) flags 0x700 phys_seg 2 prio class 0

[46280.157689] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=34598371328 size=16384 flags=180880

[46280.159756] sd 0:0:5:0: attempting task abort!scmd(0x0000000080c04a03), outstanding for 31792 ms & timeout 30000 ms

[46280.161812] sd 0:0:5:0: [sdj] tag#1445 CDB: Write(16) 8a 00 00 00 00 00 02 d5 1b d0 00 00 00 20 00 00

[46280.163793] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.165821] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.167831] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.169864] sd 0:0:5:0: No reference found at driver, assuming scmd(0x0000000080c04a03) might have completed

[46280.172028] sd 0:0:5:0: task abort: SUCCESS scmd(0x0000000080c04a03)

[46280.174085] sd 0:0:5:0: [sdj] tag#1445 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.176158] sd 0:0:5:0: [sdj] tag#1445 CDB: Write(16) 8a 00 00 00 00 00 02 d5 1b d0 00 00 00 20 00 00

[46280.178246] blk_update_request: I/O error, dev sdj, sector 47520720 op 0x1:(WRITE) flags 0x700 phys_seg 2 prio class 0

[46280.180358] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=22183059456 size=16384 flags=180880

[46280.182571] sd 0:0:5:0: attempting task abort!scmd(0x00000000bd69602c), outstanding for 31676 ms & timeout 30000 ms

[46280.184397] sd 0:0:5:0: [sdj] tag#1442 CDB: Write(16) 8a 00 00 00 00 06 10 92 08 d8 00 00 08 00 00 00

[46280.186206] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.187916] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.189114] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.190147] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000bd69602c) might have completed

[46280.191190] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000bd69602c)

[46280.192340] sd 0:0:5:0: [sdj] tag#1442 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.193390] sd 0:0:5:0: [sdj] tag#1442 CDB: Write(16) 8a 00 00 00 00 06 10 92 08 d8 00 00 08 00 00 00

[46280.194436] blk_update_request: I/O error, dev sdj, sector 26047809752 op 0x1:(WRITE) flags 0x700 phys_seg 83 prio class 0

[46280.195510] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334331043840 size=1048576 flags=40080c80

[46280.196627] sd 0:0:5:0: attempting task abort!scmd(0x0000000005af84fe), outstanding for 31692 ms & timeout 30000 ms

[46280.197728] sd 0:0:5:0: [sdj] tag#1444 CDB: Write(16) 8a 00 00 00 00 06 10 92 16 68 00 00 09 d8 00 00

[46280.198812] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.199903] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.201013] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.202236] sd 0:0:5:0: No reference found at driver, assuming scmd(0x0000000005af84fe) might have completed

[46280.203356] sd 0:0:5:0: task abort: SUCCESS scmd(0x0000000005af84fe)

[46280.204479] sd 0:0:5:0: [sdj] tag#1444 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.205733] sd 0:0:5:0: [sdj] tag#1444 CDB: Write(16) 8a 00 00 00 00 06 10 92 16 68 00 00 09 d8 00 00

[46280.207337] blk_update_request: I/O error, dev sdj, sector 26047813224 op 0x1:(WRITE) flags 0x700 phys_seg 106 prio class 0

[46280.208493] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334333063168 size=1048576 flags=40080c80

[46280.209688] sd 0:0:5:0: attempting task abort!scmd(0x00000000e209cf3f), outstanding for 31704 ms & timeout 30000 ms

[46280.210889] sd 0:0:5:0: [sdj] tag#1443 CDB: Write(16) 8a 00 00 00 00 06 10 92 10 d8 00 00 05 90 00 00

[46280.212118] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.213365] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.214574] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.215790] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000e209cf3f) might have completed

[46280.217093] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000e209cf3f)

[46280.218372] sd 0:0:5:0: [sdj] tag#1443 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.219566] sd 0:0:5:0: [sdj] tag#1443 CDB: Write(16) 8a 00 00 00 00 06 10 92 10 d8 00 00 05 90 00 00

[46280.220825] blk_update_request: I/O error, dev sdj, sector 26047811800 op 0x1:(WRITE) flags 0x4700 phys_seg 128 prio class 0

[46280.222134] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334332092416 size=970752 flags=40080c80

[46280.223435] sd 0:0:5:0: attempting task abort!scmd(0x00000000c519bc86), outstanding for 31716 ms & timeout 30000 ms

[46280.224750] sd 0:0:5:0: [sdj] tag#1441 CDB: Write(16) 8a 00 00 00 00 06 10 92 01 08 00 00 07 d0 00 00

[46280.226054] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.227300] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.228583] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.229825] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000c519bc86) might have completed

[46280.231139] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000c519bc86)

[46280.232443] sd 0:0:5:0: [sdj] tag#1441 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.233760] sd 0:0:5:0: [sdj] tag#1441 CDB: Write(16) 8a 00 00 00 00 06 10 92 01 08 00 00 07 d0 00 00

[46280.235017] blk_update_request: I/O error, dev sdj, sector 26047807752 op 0x1:(WRITE) flags 0x4700 phys_seg 88 prio class 0

[46280.236298] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334330019840 size=1024000 flags=40080c80

[46280.237655] sd 0:0:5:0: attempting task abort!scmd(0x00000000171572b2), outstanding for 31732 ms & timeout 30000 ms

[46280.239006] sd 0:0:5:0: [sdj] tag#1439 CDB: Write(16) 8a 00 00 00 00 06 10 91 f1 88 00 00 07 c0 00 00

[46280.240307] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.241683] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.243026] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.244342] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000171572b2) might have completed

[46280.245712] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000171572b2)

[46280.247030] sd 0:0:5:0: [sdj] tag#1439 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.248789] sd 0:0:5:0: [sdj] tag#1439 CDB: Write(16) 8a 00 00 00 00 06 10 91 f1 88 00 00 07 c0 00 00

[46280.250353] blk_update_request: I/O error, dev sdj, sector 26047803784 op 0x1:(WRITE) flags 0x4700 phys_seg 88 prio class 0

[46280.251703] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334327988224 size=1015808 flags=40080c80

[46280.253220] sd 0:0:5:0: attempting task abort!scmd(0x00000000ff934634), outstanding for 31748 ms & timeout 30000 ms

[46280.254606] sd 0:0:5:0: [sdj] tag#1440 CDB: Write(16) 8a 00 00 00 00 06 10 91 f9 48 00 00 07 c0 00 00

[46280.255993] scsi target0:0:5: handle(0x0025), sas_address(0x300605b00fe37e2f), phy(15)

[46280.257427] scsi target0:0:5: enclosure logical id(0x500605b00fe37e20), slot(3)

[46280.258821] scsi target0:0:5: enclosure level(0x0000), connector name( C0 )

[46280.260247] sd 0:0:5:0: No reference found at driver, assuming scmd(0x00000000ff934634) might have completed

[46280.261724] sd 0:0:5:0: task abort: SUCCESS scmd(0x00000000ff934634)

[46280.263168] sd 0:0:5:0: [sdj] tag#1440 FAILED Result: hostbyte=DID_TIME_OUT driverbyte=DRIVER_OK cmd_age=31s

[46280.264643] sd 0:0:5:0: [sdj] tag#1440 CDB: Write(16) 8a 00 00 00 00 06 10 91 f9 48 00 00 07 c0 00 00

[46280.266093] blk_update_request: I/O error, dev sdj, sector 26047805768 op 0x1:(WRITE) flags 0x4700 phys_seg 86 prio class 0

[46280.267547] zio pool=exos-x18-c vdev=/dev/disk/by-partuuid/5c736f36-b9e2-4c47-8c2f-6eea4732f2cd error=5 type=2 offset=13334329004032 size=1015808 flags=40080c80

TRUNCATED FOR MAX CHAR LIMIT BUT IT'S IDENTICAL TO THE FIRST ERROR, THIS TIME FOR SDJ

After 3 more hours, files finished copying and the new dataset was still there standing, with one disk in a degraded state.

The other pools were active and healthy like nothing happened. I run smartctl -x /dev/sdX for each drive and have zero error, including on the two new drives: smart tests passed all with flying colors. (can't enclose the outputs of smartctl -x for 10 drives, if needed I'll do it in subsequent posts).

This is quite bizarre and very scary, considering all of my pools flipped at one time if what dmseg says is true.

Also, this cannot be related to the HBA PCIe controller, or any other controller since this was across all the available controllers inside my rig.

Moreover, the two new drives were sourced from different vendors to avoid the same batch, and I find it highly suspicious that both failed in the same way immediately after installing them, also SMART tests tell me the drives are all right. I also put the two drives on two different ports on the HBA, using separate cables.

Plus, I copied other 700GB just in case on the new (now degraded) pool, and didn't hit any new error.

My hunch tells me drives and controllers are not at fault here, but other than that I have no clue. Also not sure if I should open a ticket about this.

If there any other test I can perform on the new drives let me know, thanks.

P.S.: after the new pool got degraded and one of the drive was put offline, I started a SMART long test on this drive that is still running (almost at 24hrs). I intend on resilvering the pool once the test is over.

Last edited: