HeloJunkie

Patron

- Joined

- Oct 15, 2014

- Messages

- 300

Hey Everyone,

Well, it is that time of the year again, I am (again) running out of available drive space on my plexnas server (freenas server for plex). I am currently at about 78% utilization of my volume and I know that above 80% Freenas will start sending out errors.

I am trying to decide if I should just add another 6 drive vdev to my vol1 utilizing 4TB or 6TB drives or if I should just roll out a second server instead.

Here is my current configuration:

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode V5 to match Freenas driver

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB Thumb Drive for booting

FreeNAS-9.10.1 (d989edd)

I have the 12 drives setup like this:

My NAS is connected directly to my plex server via a 10ge connection, and my plex server usually has a max of about 7 people on it at any given time, but averages more like 3 or 4. Plexnas is strictly used just for Plex and has no jails, etc on it.

Looking at the graphs, it seems like the system is basically idle even when my Plex server is under it's highest load, so I am thinking I should be able to drop another vdev or two on the box by adding in another LSI controller. Initially I am thinking another 6 x 4TB drives in the same raidz2 config.

To me, this would be easier and simpler than managing a completely separate server, but I guess I am looking for advice from those running larger systems than I as to the best approach give my particular server configuration.

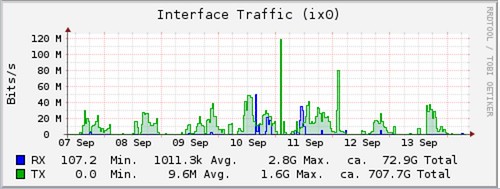

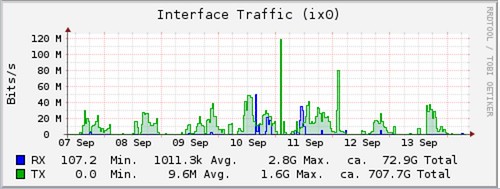

10Gb Interface Traffic to Plex server:

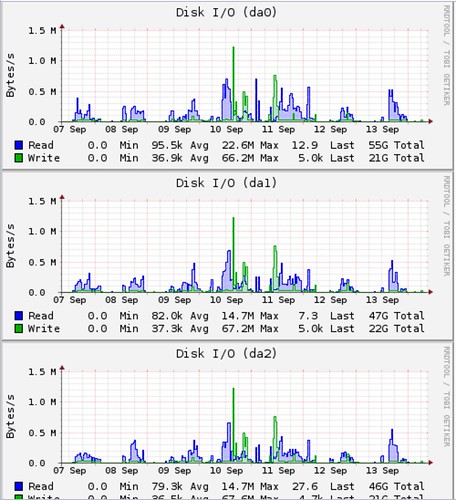

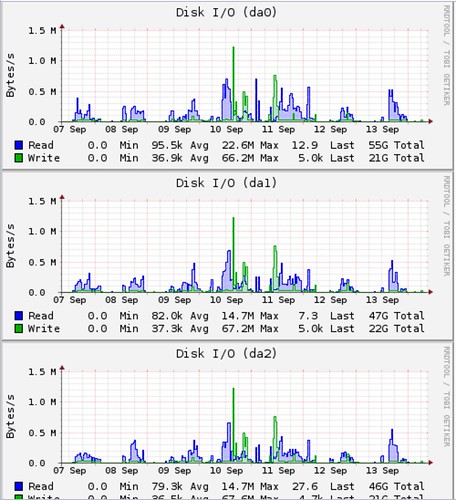

My average disk IO looks like this (Partial list, they all look the same):

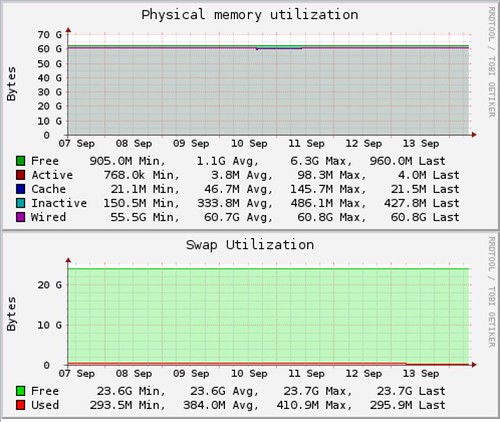

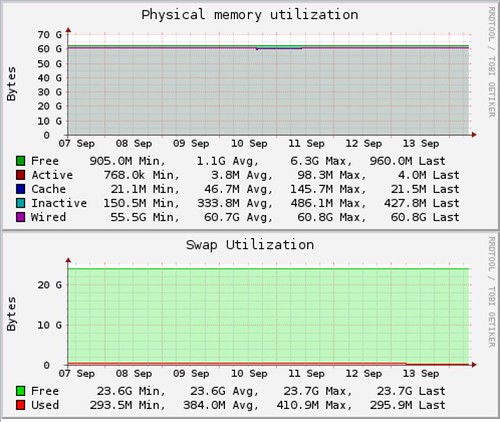

Memory and Swap:

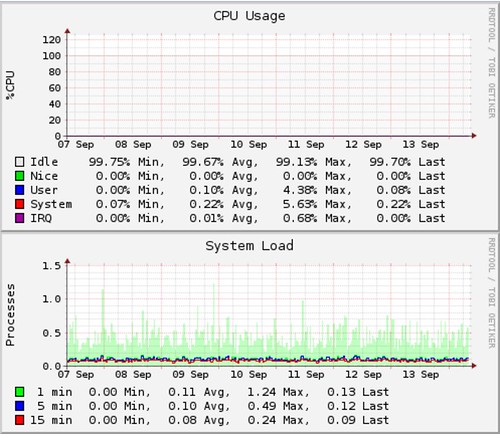

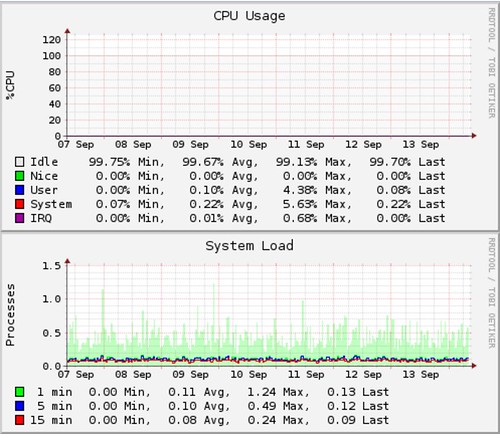

CPU & System Load:

Well, it is that time of the year again, I am (again) running out of available drive space on my plexnas server (freenas server for plex). I am currently at about 78% utilization of my volume and I know that above 80% Freenas will start sending out errors.

I am trying to decide if I should just add another 6 drive vdev to my vol1 utilizing 4TB or 6TB drives or if I should just roll out a second server instead.

Here is my current configuration:

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode V5 to match Freenas driver

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB Thumb Drive for booting

FreeNAS-9.10.1 (d989edd)

I have the 12 drives setup like this:

Code:

[root@plexnas] ~# zpool status

pool: vol1

state: ONLINE

scan: scrub repaired 0 in 9h16m with 0 errors on Thu Sep 1 10:16:51 2016

config:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/f46fb4ec-ed62-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/f69f4e21-ed62-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/f8cde372-ed62-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/faeb3d6d-ed62-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/fd087ff0-ed62-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/ff28300a-ed62-11e4-a956-0cc47a31abcc ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/013d5491-ed63-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/0357b342-ed63-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/05811f51-ed63-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/079f5f22-ed63-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/09b81318-ed63-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/a82dda8c-ef5f-11e4-bb0a-0cc47a31abcc ONLINE 0 0 0

errors: No known data errors

My NAS is connected directly to my plex server via a 10ge connection, and my plex server usually has a max of about 7 people on it at any given time, but averages more like 3 or 4. Plexnas is strictly used just for Plex and has no jails, etc on it.

Looking at the graphs, it seems like the system is basically idle even when my Plex server is under it's highest load, so I am thinking I should be able to drop another vdev or two on the box by adding in another LSI controller. Initially I am thinking another 6 x 4TB drives in the same raidz2 config.

To me, this would be easier and simpler than managing a completely separate server, but I guess I am looking for advice from those running larger systems than I as to the best approach give my particular server configuration.

10Gb Interface Traffic to Plex server:

My average disk IO looks like this (Partial list, they all look the same):

Memory and Swap:

CPU & System Load: