mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

I mentioned this as a side note in another thread, but it really needs it's own discussion. Adding in the notes from the previous discussion.

I have an old FreeNAS 9.10 box maxed out at 8GB RAM running with a 240 GB SSD for the L2ARC with just shy of 17 TB written to it.....

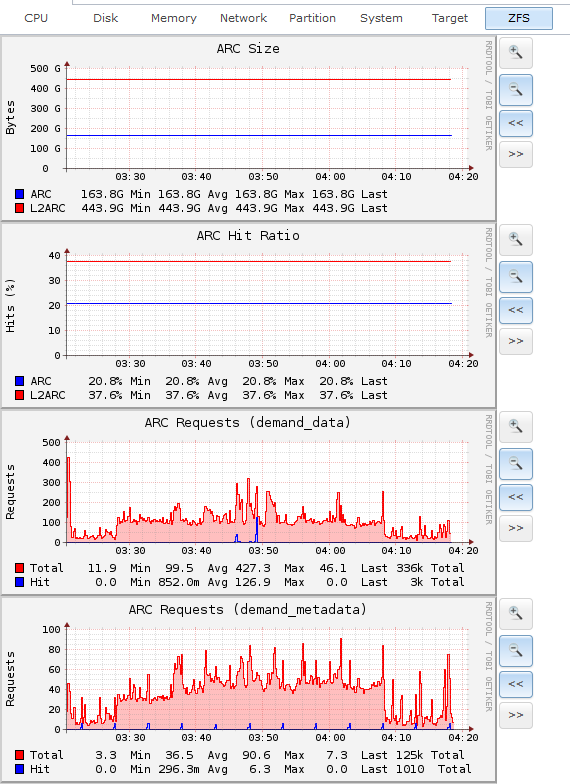

What is strange is the ARC/L2ARC sizes reported there vs my 11.2 box.

(How can 8GB RAM equal 163 GB ARC and a 240 GB SSD equal a 443 GB L2ARC???)

We weighed the options, and ultimately, it came down to being able to compensate to the limitation of the integrated SATA-II controller. Although the array is built out of 4 WD Golds (RAID10), the ammount of I/O trraffic beween the steady flow of email and database transactions made it crawl. The L2ARC helps alleviate that.

I have an old FreeNAS 9.10 box maxed out at 8GB RAM running with a 240 GB SSD for the L2ARC with just shy of 17 TB written to it.....

What is strange is the ARC/L2ARC sizes reported there vs my 11.2 box.

(How can 8GB RAM equal 163 GB ARC and a 240 GB SSD equal a 443 GB L2ARC???)

We weighed the options, and ultimately, it came down to being able to compensate to the limitation of the integrated SATA-II controller. Although the array is built out of 4 WD Golds (RAID10), the ammount of I/O trraffic beween the steady flow of email and database transactions made it crawl. The L2ARC helps alleviate that.

Code:

[root@fn1] ~# arc_summary.py

System Memory:

1.59% 126.18 MiB Active, 10.05% 797.65 MiB Inact

75.83% 5.88 GiB Wired, 0.20% 16.10 MiB Cache

12.33% 978.83 MiB Free, 0.00% 0 Bytes Gap

Real Installed: 8.00 GiB

Real Available: 99.61% 7.97 GiB

Real Managed: 97.28% 7.75 GiB

Logical Total: 8.00 GiB

Logical Used: 78.12% 6.25 GiB

Logical Free: 21.88% 1.75 GiB

Kernel Memory: 254.07 MiB

Data: 89.11% 226.41 MiB

Text: 10.89% 27.67 MiB

Kernel Memory Map: 7.75 GiB

Size: 27.90% 2.16 GiB

Free: 72.10% 5.59 GiB

Page: 1

------------------------------------------------------------------------

ARC Summary: (HEALTHY)

Storage pool Version: 5000

Filesystem Version: 5

Memory Throttle Count: 0

ARC Misc:

Deleted: 2.19b

Mutex Misses: 2.24b

Evict Skips: 2.24b

ARC Size: 2427.36% 163.88 GiB

Target Size: (Adaptive) 100.00% 6.75 GiB

Min Size (Hard Limit): 12.50% 864.18 MiB

Max Size (High Water): 8:1 6.75 GiB

ARC Size Breakdown:

Recently Used Cache Size: 2.87% 4.71 GiB

Frequently Used Cache Size: 97.13% 159.17 GiB

ARC Hash Breakdown:

Elements Max: 40.48m

Elements Current: 36.82% 14.90m

Collisions: 9.39b

Chain Max: 70

Chains: 1.05m

Page: 2

------------------------------------------------------------------------

ARC Total accesses: 4.40b

Cache Hit Ratio: 20.71% 911.03m

Cache Miss Ratio: 79.29% 3.49b

Actual Hit Ratio: 17.89% 786.84m

Data Demand Efficiency: 18.04% 2.92b

Data Prefetch Efficiency: 30.50% 387.71m

CACHE HITS BY CACHE LIST:

Most Recently Used: 73.12% 666.15m

Most Frequently Used: 13.25% 120.69m

Most Recently Used Ghost: 121.64% 1.11b

Most Frequently Used Ghost: 46.88% 427.08m

CACHE HITS BY DATA TYPE:

Demand Data: 57.74% 526.06m

Prefetch Data: 12.98% 118.25m

Demand Metadata: 23.73% 216.20m

Prefetch Metadata: 5.55% 50.52m

CACHE MISSES BY DATA TYPE:

Demand Data: 68.56% 2.39b

Prefetch Data: 7.73% 269.46m

Demand Metadata: 19.52% 680.71m

Prefetch Metadata: 4.19% 146.01m

Page: 3

------------------------------------------------------------------------

L2 ARC Summary: (DEGRADED)

Passed Headroom: 23.59m

Tried Lock Failures: 9.61m

IO In Progress: 9.16m

Low Memory Aborts: 249

Free on Write: 9.74m

Writes While Full: 499.02k

R/W Clashes: 0

Bad Checksums: 762.89m

IO Errors: 0

SPA Mismatch: 159.11m

L2 ARC Size: (Adaptive) 443.70 GiB

Header Size: 0.27% 1.19 GiB

L2 ARC Evicts:

Lock Retries: 6.18k

Upon Reading: 0

L2 ARC Breakdown: 3.49b

Hit Ratio: 37.55% 1.31b

Miss Ratio: 62.45% 2.18b

Feeds: 47.70m

L2 ARC Buffer:

Bytes Scanned: 1.08 PiB

Buffer Iterations: 47.70m

List Iterations: 190.81m

NULL List Iterations: 130.01m

L2 ARC Writes:

Writes Sent: 100.00% 15.41m

Page: 4

------------------------------------------------------------------------

DMU Prefetch Efficiency: 17.78b

Hit Ratio: 2.75% 489.48m

Miss Ratio: 97.25% 17.29b

Page: 5

------------------------------------------------------------------------

Page: 6

------------------------------------------------------------------------

ZFS Tunable (sysctl):

kern.maxusers 845

vm.kmem_size 8323047424

vm.kmem_size_scale 1

vm.kmem_size_min 0

vm.kmem_size_max 1319413950874

vfs.zfs.vol.unmap_enabled 1

vfs.zfs.vol.mode 2

vfs.zfs.sync_pass_rewrite 2

vfs.zfs.sync_pass_dont_compress 5

vfs.zfs.sync_pass_deferred_free 2

vfs.zfs.zio.dva_throttle_enabled 1

vfs.zfs.zio.exclude_metadata 0

vfs.zfs.zio.use_uma 1

vfs.zfs.zil_slog_limit 786432

vfs.zfs.cache_flush_disable 0

vfs.zfs.zil_replay_disable 0

vfs.zfs.version.zpl 5

vfs.zfs.version.spa 5000

vfs.zfs.version.acl 1

vfs.zfs.version.ioctl 7

vfs.zfs.debug 0

vfs.zfs.super_owner 0

vfs.zfs.min_auto_ashift 12

vfs.zfs.max_auto_ashift 13

vfs.zfs.vdev.queue_depth_pct 1000

vfs.zfs.vdev.write_gap_limit 4096

vfs.zfs.vdev.read_gap_limit 32768

vfs.zfs.vdev.aggregation_limit 131072

vfs.zfs.vdev.trim_max_active 64

vfs.zfs.vdev.trim_min_active 1

vfs.zfs.vdev.scrub_max_active 2

vfs.zfs.vdev.scrub_min_active 1

vfs.zfs.vdev.async_write_max_active 10

vfs.zfs.vdev.async_write_min_active 1

vfs.zfs.vdev.async_read_max_active 3

vfs.zfs.vdev.async_read_min_active 1

vfs.zfs.vdev.sync_write_max_active 10

vfs.zfs.vdev.sync_write_min_active 10

vfs.zfs.vdev.sync_read_max_active 10

vfs.zfs.vdev.sync_read_min_active 10

vfs.zfs.vdev.max_active 1000

vfs.zfs.vdev.async_write_active_max_dirty_percent60

vfs.zfs.vdev.async_write_active_min_dirty_percent30

vfs.zfs.vdev.mirror.non_rotating_seek_inc1

vfs.zfs.vdev.mirror.non_rotating_inc 0

vfs.zfs.vdev.mirror.rotating_seek_offset1048576

vfs.zfs.vdev.mirror.rotating_seek_inc 5

vfs.zfs.vdev.mirror.rotating_inc 0

vfs.zfs.vdev.trim_on_init 1

vfs.zfs.vdev.larger_ashift_minimal 0

vfs.zfs.vdev.bio_delete_disable 0

vfs.zfs.vdev.bio_flush_disable 0

vfs.zfs.vdev.cache.bshift 16

vfs.zfs.vdev.cache.size 0

vfs.zfs.vdev.cache.max 16384

vfs.zfs.vdev.metaslabs_per_vdev 200

vfs.zfs.vdev.trim_max_pending 10000

vfs.zfs.txg.timeout 5

vfs.zfs.trim.enabled 1

vfs.zfs.trim.max_interval 1

vfs.zfs.trim.timeout 30

vfs.zfs.trim.txg_delay 32

vfs.zfs.space_map_blksz 4096

vfs.zfs.spa_min_slop 134217728

vfs.zfs.spa_slop_shift 5

vfs.zfs.spa_asize_inflation 24

vfs.zfs.deadman_enabled 1

vfs.zfs.deadman_checktime_ms 5000

vfs.zfs.deadman_synctime_ms 1000000

vfs.zfs.debug_flags 0

vfs.zfs.recover 0

vfs.zfs.spa_load_verify_data 1

vfs.zfs.spa_load_verify_metadata 1

vfs.zfs.spa_load_verify_maxinflight 10000

vfs.zfs.ccw_retry_interval 300

vfs.zfs.check_hostid 1

vfs.zfs.mg_fragmentation_threshold 85

vfs.zfs.mg_noalloc_threshold 0

vfs.zfs.condense_pct 200

vfs.zfs.metaslab.bias_enabled 1

vfs.zfs.metaslab.lba_weighting_enabled 1

vfs.zfs.metaslab.fragmentation_factor_enabled1

vfs.zfs.metaslab.preload_enabled 1

vfs.zfs.metaslab.preload_limit 3

vfs.zfs.metaslab.unload_delay 8

vfs.zfs.metaslab.load_pct 50

vfs.zfs.metaslab.min_alloc_size 33554432

vfs.zfs.metaslab.df_free_pct 4

vfs.zfs.metaslab.df_alloc_threshold 131072

vfs.zfs.metaslab.debug_unload 0

vfs.zfs.metaslab.debug_load 0

vfs.zfs.metaslab.fragmentation_threshold70

vfs.zfs.metaslab.gang_bang 16777217

vfs.zfs.free_bpobj_enabled 1

vfs.zfs.free_max_blocks 18446744073709551615

vfs.zfs.no_scrub_prefetch 0

vfs.zfs.no_scrub_io 0

vfs.zfs.resilver_min_time_ms 3000

vfs.zfs.free_min_time_ms 1000

vfs.zfs.scan_min_time_ms 1000

vfs.zfs.scan_idle 50

vfs.zfs.scrub_delay 4

vfs.zfs.resilver_delay 2

vfs.zfs.top_maxinflight 32

vfs.zfs.delay_scale 500000

vfs.zfs.delay_min_dirty_percent 60

vfs.zfs.dirty_data_sync 67108864

vfs.zfs.dirty_data_max_percent 10

vfs.zfs.dirty_data_max_max 4294967296

vfs.zfs.dirty_data_max 855611392

vfs.zfs.max_recordsize 1048576

vfs.zfs.zfetch.array_rd_sz 1048576

vfs.zfs.zfetch.max_distance 8388608

vfs.zfs.zfetch.min_sec_reap 2

vfs.zfs.zfetch.max_streams 8

vfs.zfs.prefetch_disable 0

vfs.zfs.send_holes_without_birth_time 1

vfs.zfs.mdcomp_disable 0

vfs.zfs.nopwrite_enabled 1

vfs.zfs.dedup.prefetch 1

vfs.zfs.l2c_only_size 0

vfs.zfs.mfu_ghost_data_esize 3325952

vfs.zfs.mfu_ghost_metadata_esize 111502336

vfs.zfs.mfu_ghost_size 114828288

vfs.zfs.mfu_data_esize 5120

vfs.zfs.mfu_metadata_esize 0

vfs.zfs.mfu_size 95766528

vfs.zfs.mru_ghost_data_esize 2437057024

vfs.zfs.mru_ghost_metadata_esize 4690575872

vfs.zfs.mru_ghost_size 7127632896

vfs.zfs.mru_data_esize 0

vfs.zfs.mru_metadata_esize 0

vfs.zfs.mru_size 116867072

vfs.zfs.anon_data_esize 0

vfs.zfs.anon_metadata_esize 0

vfs.zfs.anon_size 7898624

vfs.zfs.l2arc_norw 1

vfs.zfs.l2arc_feed_again 1

vfs.zfs.l2arc_noprefetch 1

vfs.zfs.l2arc_feed_min_ms 200

vfs.zfs.l2arc_feed_secs 1

vfs.zfs.l2arc_headroom 2

vfs.zfs.l2arc_write_boost 8388608

vfs.zfs.l2arc_write_max 8388608

vfs.zfs.arc_meta_limit 1812326400

vfs.zfs.arc_free_target 14135

vfs.zfs.compressed_arc_enabled 1

vfs.zfs.arc_shrink_shift 7

vfs.zfs.arc_average_blocksize 8192

vfs.zfs.arc_min 906163200

vfs.zfs.arc_max 7249305600

Page: 7

------------------------------------------------------------------------

[root@fn1] ~#This box mostly handles emails and databases.@mpyusko

"(How can 8GB RAM equal 163 GB ARC and a 240 GB SSD equal a 443 GB L2ARC???)"

Possibly a UI bug; although ARC and L2ARC both show values considering compression. 20:1 compression on your ARC though would imply you've got something extremely compressible loaded there though.arc_summary.pymay show some insight there as well.