sfcredfox

Patron

- Joined

- Aug 26, 2014

- Messages

- 340

Experts,

I can't figure out what's going on with ARC on my system. I imagine I have to be doing something wrong, but can't figure out what it is.

System config in signature below.

I have 72GB of RAM, ARC starts in the low 60GB range when the system first loads, it ends up being around 12-13GB after a long period of time.

The system has three pools. Two pools have a SLOG and L2ARC which is a 120GB SSD for each one (total of 2 120GB SSDs), these two pools are used for VMware connected over FC. Complete range of windows server VMs (DCs, SQLs, Exchange, File servers, etc)

I figured I was smoking the ARC by having too large of L2ARCs, but if I'm reading the summary output correctly, it should only be using 821MB right? (L2 ARC Size / Header Size?)

I have included ARC Summary report:

I also keep seeing an error in the output:

: main()

File "/usr/local/www/freenasUI/tools/arc_summary.py", line 1197, in main

_call_all(Kstat)

File "/usr/local/www/freenasUI/tools/arc_summary.py", line 1153, in _call_all

unsub(Kstat)

File "/usr/local/www/freenasUI/tools/arc_summary.py", line 926, in _l2arc_summary

if int(arc["l2_arc_evicts"]['lock_retries']) + int(arc["l2_arc_evicts"]["reading"]) > 0:

ValueError: invalid literal for int() with base 10: '4.42k'

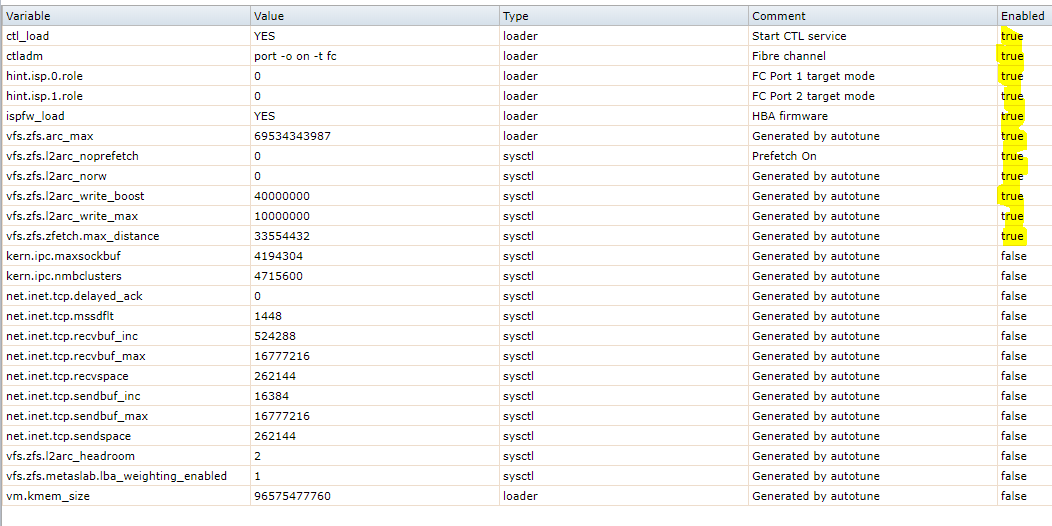

Here's the tunables set:

Notice most of it's disabled, but after trying to research all the settings, I can't figure out what would be telling it to use 40-50 some GB of ARC.

1. What other reports can I run or where can we look to see why there is only 13GB of ARC rather than 60ish?

2. Should I ditch L2ARC based on your opinion of summary output? I've read so many posts and pages of people discussing L2 and performance and still can't get a full understanding of how to evaluate whether it's doing me any good or not. I guess I'm too stupid to understand some of the postings about it, sorry :/

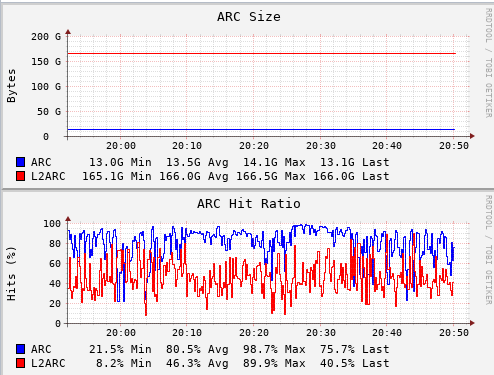

ARC GUI Report:

I can't figure out what's going on with ARC on my system. I imagine I have to be doing something wrong, but can't figure out what it is.

System config in signature below.

I have 72GB of RAM, ARC starts in the low 60GB range when the system first loads, it ends up being around 12-13GB after a long period of time.

The system has three pools. Two pools have a SLOG and L2ARC which is a 120GB SSD for each one (total of 2 120GB SSDs), these two pools are used for VMware connected over FC. Complete range of windows server VMs (DCs, SQLs, Exchange, File servers, etc)

I figured I was smoking the ARC by having too large of L2ARCs, but if I'm reading the summary output correctly, it should only be using 821MB right? (L2 ARC Size / Header Size?)

I have included ARC Summary report:

Code:

root@Carmel-SANG2:~ # arc_summary.py System Memory: 0.05% 32.40 MiB Active, 0.30% 218.56 MiB Inact 98.66% 69.20 GiB Wired, 0.00% 0 Bytes Cache 0.92% 659.08 MiB Free, 0.08% 55.41 MiB Gap Real Installed: 80.00 GiB Real Available: 89.94% 71.95 GiB Real Managed: 97.48% 70.14 GiB Logical Total: 80.00 GiB Logical Used: 98.93% 79.14 GiB Logical Free: 1.07% 877.64 MiB Kernel Memory: 1.07 GiB Data: 96.52% 1.04 GiB Text: 3.48% 38.23 MiB Kernel Memory Map: 70.14 GiB Size: 2.15% 1.51 GiB Free: 97.85% 68.63 GiB Page: 1 ------------------------------------------------------------------------ ARC Summary: (HEALTHY) Storage pool Version: 5000 Filesystem Version: 5 Memory Throttle Count: 0 ARC Misc: Deleted: 153.38m Mutex Misses: 159.79k Evict Skips: 159.79k ARC Size: 20.72% 13.42 GiB Target Size: (Adaptive) 20.85% 13.51 GiB Min Size (Hard Limit): 12.50% 8.09 GiB Max Size (High Water): 8:1 64.76 GiB ARC Size Breakdown: Recently Used Cache Size: 85.44% 11.54 GiB Frequently Used Cache Size: 14.56% 1.97 GiB ARC Hash Breakdown: Elements Max: 18.54m Elements Current: 65.89% 12.22m Collisions: 415.29m Chain Max: 11 Chains: 2.78m Page: 2 ------------------------------------------------------------------------ ARC Total accesses: 740.13m Cache Hit Ratio: 55.99% 414.38m Cache Miss Ratio: 44.01% 325.76m Actual Hit Ratio: 49.10% 363.43m Data Demand Efficiency: 60.55% 371.00m Data Prefetch Efficiency: 51.91% 176.86m CACHE HITS BY CACHE LIST: Anonymously Used: 5.95% 24.66m Most Recently Used: 43.35% 179.65m Most Frequently Used: 44.35% 183.78m Most Recently Used Ghost: 1.96% 8.12m Most Frequently Used Ghost: 4.38% 18.16m CACHE HITS BY DATA TYPE: Demand Data: 54.21% 224.64m Prefetch Data: 22.16% 91.81m Demand Metadata: 22.68% 93.98m Prefetch Metadata: 0.95% 3.95m CACHE MISSES BY DATA TYPE: Demand Data: 44.93% 146.37m Prefetch Data: 26.11% 85.05m Demand Metadata: 28.66% 93.35m Prefetch Metadata: 0.30% 988.93k Page: 3 ------------------------------------------------------------------------ L2 ARC Summary: (HEALTHY) Passed Headroom: 5.93m Tried Lock Failures: 5.72m IO In Progress: 9 Low Memory Aborts: 161 Free on Write: 145.50k Writes While Full: 19.10k R/W Clashes: 0 Bad Checksums: 0 IO Errors: 0 SPA Mismatch: 8.65b L2 ARC Size: (Adaptive) 166.25 GiB Header Size: 0.48% 821.29 MiB

I also keep seeing an error in the output:

: main()

File "/usr/local/www/freenasUI/tools/arc_summary.py", line 1197, in main

_call_all(Kstat)

File "/usr/local/www/freenasUI/tools/arc_summary.py", line 1153, in _call_all

unsub(Kstat)

File "/usr/local/www/freenasUI/tools/arc_summary.py", line 926, in _l2arc_summary

if int(arc["l2_arc_evicts"]['lock_retries']) + int(arc["l2_arc_evicts"]["reading"]) > 0:

ValueError: invalid literal for int() with base 10: '4.42k'

Here's the tunables set:

Notice most of it's disabled, but after trying to research all the settings, I can't figure out what would be telling it to use 40-50 some GB of ARC.

1. What other reports can I run or where can we look to see why there is only 13GB of ARC rather than 60ish?

2. Should I ditch L2ARC based on your opinion of summary output? I've read so many posts and pages of people discussing L2 and performance and still can't get a full understanding of how to evaluate whether it's doing me any good or not. I guess I'm too stupid to understand some of the postings about it, sorry :/

ARC GUI Report:

Last edited: