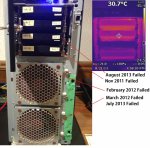

I am looking for advice on what my next step should be in terms of drive replacement in my current setup as I am experiencing a large percentage of my Western Digital 2 tb drives failing. Before I continue I will give you some background information about my setup. I am currently running FreeNas 8.2 x64 with 8 gb of ram on E8400 (I know more is recommended but this setup is going back 3 years) and raidz1 I know it’s not recommend. I have a total of 10 x 2tb WD green drives that have had intellipark disabled to avoid unnecessary head parks. The system has been operating for 2 ½ years, within the first year 3 drives have failed and I replaced them. Approaching my 3rd year I had another 2 drives fail one two weeks ago which I replaced with a new drive and one a few days ago. To make matters worse the brand new drive's controller board is gone. The drives are running around 32-35C.

I know my settings are not optimal by today standards and scrubbing occurs once a month. That being said is there something I am missing why I am experience such a high failure rate? Reading cyberjocks posts about 24 drives and only 1 failing within 3 years is amazing. What am I missing? I am considering a new build with more ram, and WD RE drives if necessary. Is this a setting or hardware configuration issue for the failure rate or bad luck? The server is just used for streaming nothing really intensive.

I know my settings are not optimal by today standards and scrubbing occurs once a month. That being said is there something I am missing why I am experience such a high failure rate? Reading cyberjocks posts about 24 drives and only 1 failing within 3 years is amazing. What am I missing? I am considering a new build with more ram, and WD RE drives if necessary. Is this a setting or hardware configuration issue for the failure rate or bad luck? The server is just used for streaming nothing really intensive.