I am sure that there are scars associated with that comment.

Actually not; I own a number of bits of gear with 10GBase-T ports, including a very nice X9DR7-TF+ board and some Dell PowerConnect 8024F switches. These were basically incidental acquisitions where I did not deliberately seek them out, and generally use the ports as conventional 1G copper ports.

The most immediate arguments against 10GBase-T are:

1) that it consumes more power than an equivalent SFP+ or DAC setup. Which might seem like *shrug*, except that once you get to the point of burning several extra watts per port on a 48-port switch, it becomes a meaningful ongoing operational expense.

Newer estimates are two to five watts per port, whereas SFP+ is about 0.5-0.7. It is worth noting that in a data center environment, if you burn five extra watts in equipment, there is usually about a five to ten watt cost to provide cooling as well. The electrical costs for 10GBase-T add up.

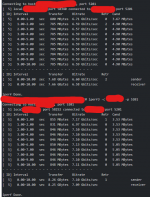

2) that it experiences higher latency than an SFP+ or DAC setup. SFP+ is typically about 300 nanoseconds. 10GBase-T, on the other hand, uses PHY block encoding,

so there is a 3 microsecond step (perhaps 2.5 us more than the SFP+). This shows up as additional latency in 10GBase-T based networks, which is undesirable, especially in the context of the topic of this thread, which is performance maximization. I'm sure someone will point out that it isn't a major hit. True, but there regardless.

3) that people argue for 10GBase-T because they've already got copper physical plant. The problem is that this is generally a stupid argument. Unless you installed Cat7 years ago, your copper plant is unlikely to be suitable for carrying Cat7 at distance, and that janky old 5e or 6 needs to be replaced. Today's kids did not live through the trauma of the 90's, where we went from Cat3 to Cat5 to Cat5e as ethernet evolved from 10 to 100 to 1000Mbps. Replacing physical plant is not trivial, and making 10GBase-T run at 100 meters from the switch is very rough; Cat6 won't cut it (only 55m), you need Cat6A or Cat7 and an installer with testing/certification gear, because all four pairs have to be virtually perfect, and any problems with any pair can render the connection dead.

By way of comparison, fiber is very friendly. OM4 can take 10G hundreds of meters very efficiently. It's easy to work with, inexpensive to stock various lengths of patch, and you can get it in milspec variants that are resistant to damage. You can run it well past the standards-specced maximum length in many cases.

On the flip side, 10GBase-T has the advantage of being a familiar sort of plug and play that generally doesn't require extra bits like SFP+ modules and may be easier to work with inside a rack.

I am starting to see more of my customers wanting to use 10GBase-T

I think the big driver for many people is the familiarity thing I just mentioned; they can wrap their heads around 10GBase-T because at the superficial level it feels very much like 1GbE. There's a lot of FUD that has slowly percolated into the industry over the last few decades about fiber and fiber installers, because terminating fiber in the field is specialist work that requires expensive gear and supplies. However, these days you can often cheat and get factory-terminated prebuilt assemblies that can avoid the field termination work. Very easy to work with.