indy

Patron

- Joined

- Dec 28, 2013

- Messages

- 287

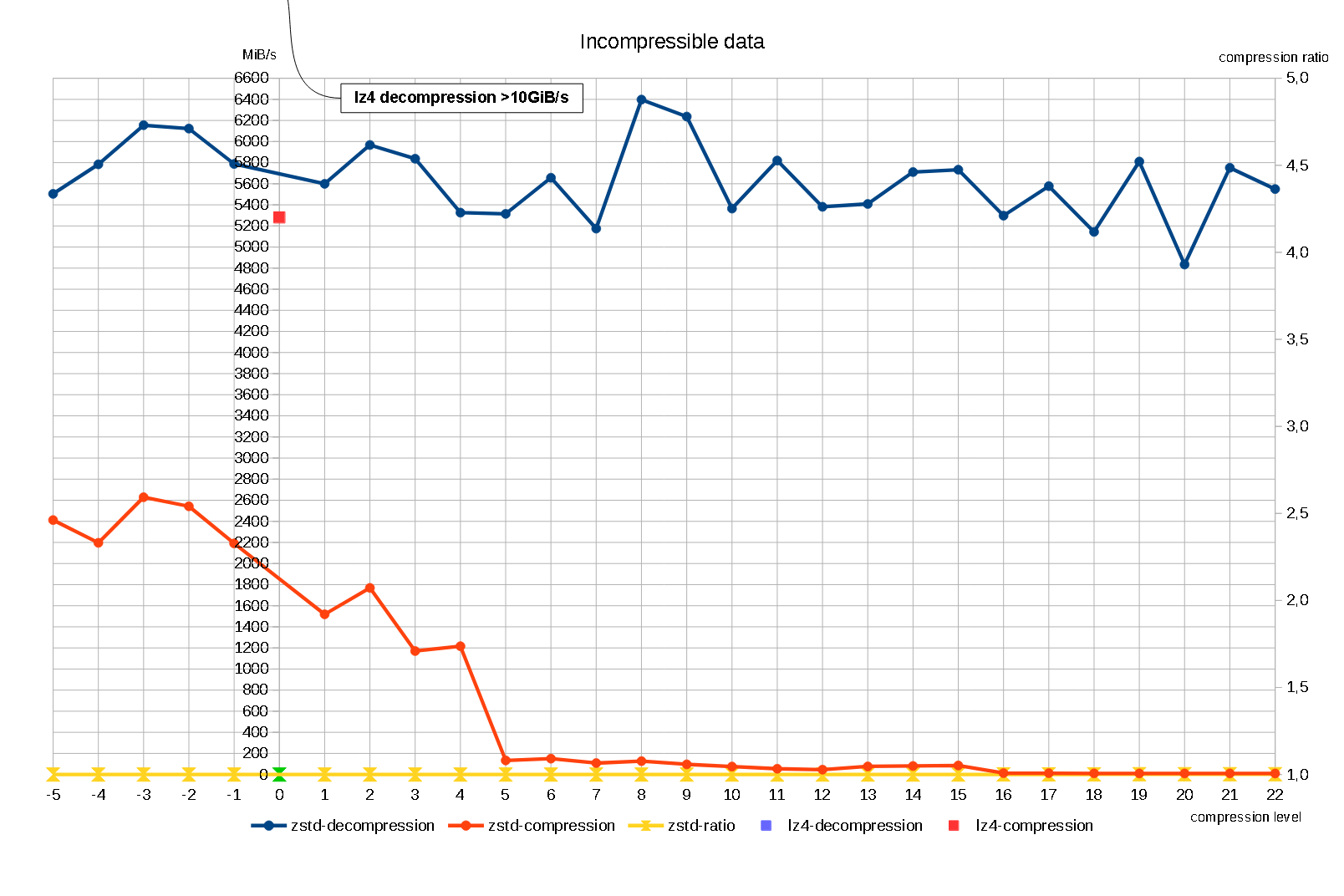

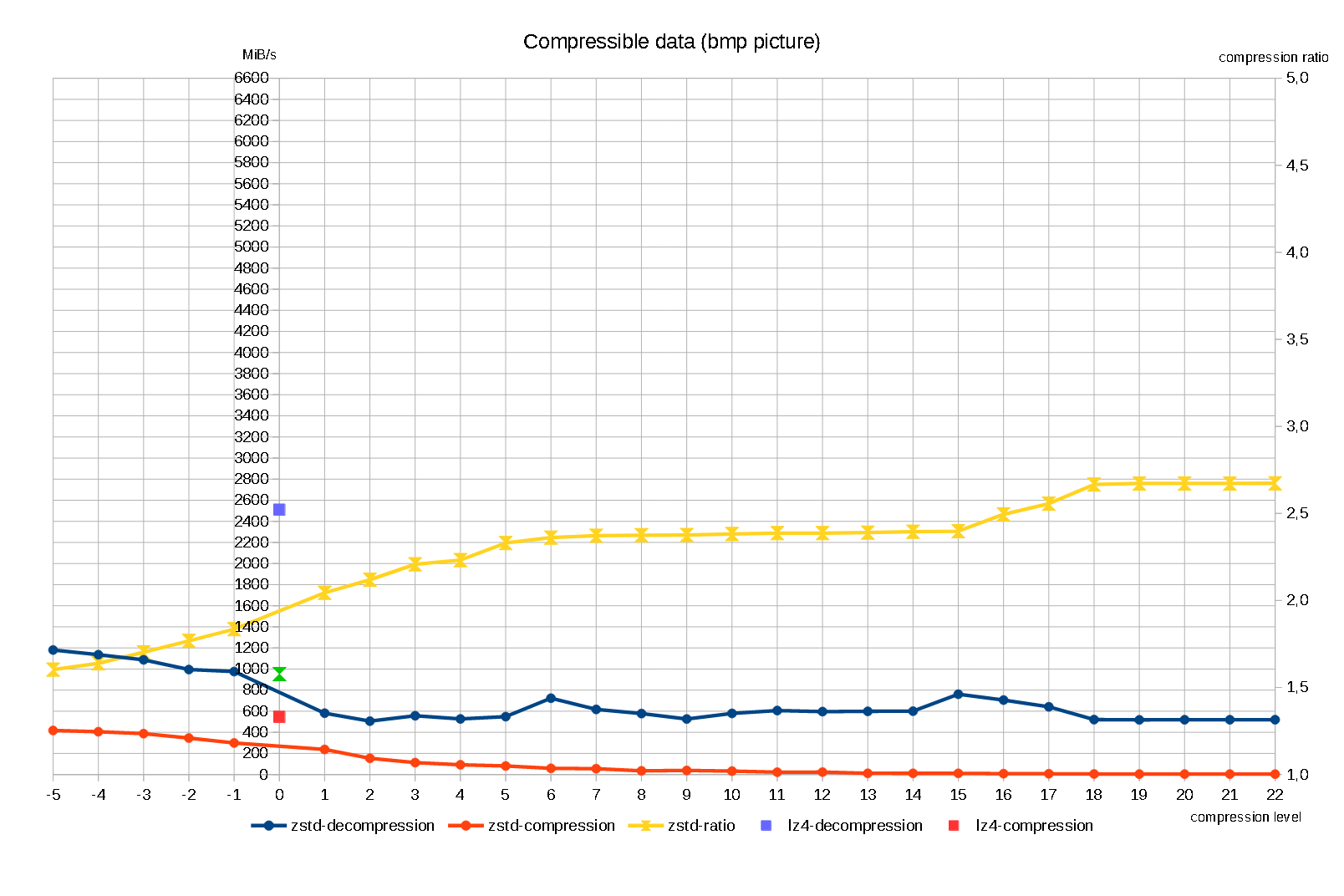

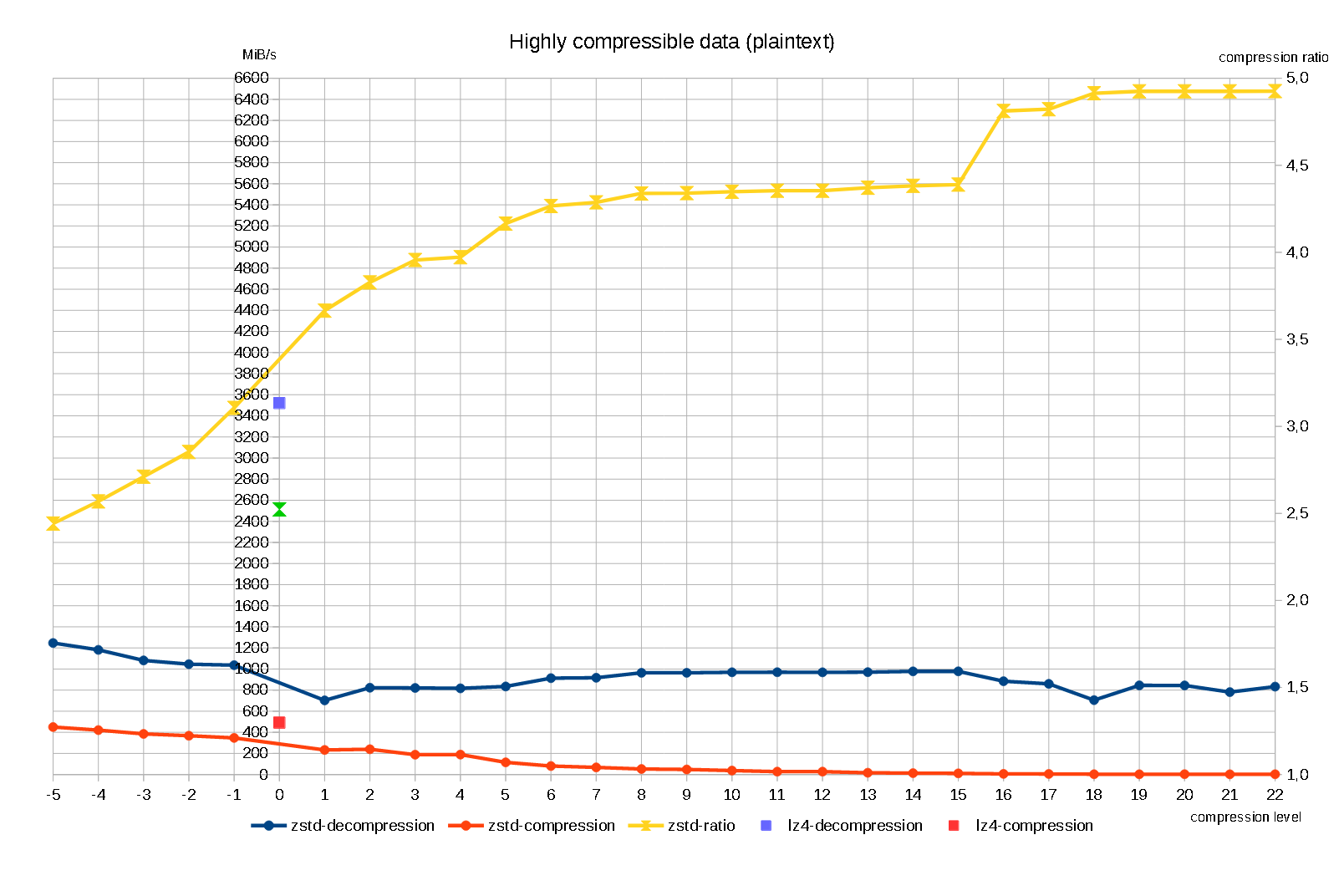

I did some benchmarks to illustrate the performance characteristics of zstd with different data types.

The focus is on 10G network speeds and readability/comparability (hence the lack of resolution at lower speeds).

How to read these graphs?

X-axis is the compression level.

Major Y-axis is the speed in Megabyte/s

Minor Y-axis is the compression ratio.

Datapoints for lz4 occupy 0 on the x-axis.

Points of interest (in my opinion)

- Different types of data produce widely different results.

- Decompression speeds are generally pretty flat.

- There is no "early abort" and a massive drop-off in compression speed for incompressible data past level 4.

Some notes:

- Tests are done on Windows, single-threaded with an E5-1650 v4 (3.6-4Ghz), hyperthreading disabled).

- Values in this benchmark will not be directly applicable to the zfs implementation of zstd.

The focus is on 10G network speeds and readability/comparability (hence the lack of resolution at lower speeds).

How to read these graphs?

X-axis is the compression level.

Major Y-axis is the speed in Megabyte/s

Minor Y-axis is the compression ratio.

Datapoints for lz4 occupy 0 on the x-axis.

Points of interest (in my opinion)

- Different types of data produce widely different results.

- Decompression speeds are generally pretty flat.

- There is no "early abort" and a massive drop-off in compression speed for incompressible data past level 4.

Decompression of incompressible data is very fast for any compression level tough.

Some notes:

- Tests are done on Windows, single-threaded with an E5-1650 v4 (3.6-4Ghz), hyperthreading disabled).

- Values in this benchmark will not be directly applicable to the zfs implementation of zstd.

Code:

zstd command line interface 64-bits v1.5.2, by Yann Collet LZ4 command line interface 64-bits v1.9.3, by Yann Collet zstd incompressible data -5# 10 files : 10485760 -> 10486100 (x1.000), 2412.0 MB/s, 5504.3 MB/s -4# 10 files : 10485760 -> 10486100 (x1.000), 2196.4 MB/s, 5784.0 MB/s -3# 10 files : 10485760 -> 10486100 (x1.000), 2628.6 MB/s 6155.0 MB/s -2# 10 files : 10485760 -> 10486100 (x1.000), 2542.5 MB/s, 6123.0 MB/s -1# 10 files : 10485760 -> 10483774 (x1.000), 2190.4 MB/s 5787.5 MB/s 0# 10 files : 10485760 -> 10483695 (x1.000), 1271.4 MB/s 6182.7 MB/s 1# 10 files : 10485760 -> 10483892 (x1.000), 1518.6 MB/s, 5599.0 MB/s 2# 10 files : 10485760 -> 10483578 (x1.000), 1769.2 MB/s, 5967.2 MB/s 3# 10 files : 10485760 -> 10483695 (x1.000), 1170.5 MB/s, 5837.1 MB/s 4# 10 files : 10485760 -> 10483675 (x1.000), 1216.4 MB/s, 5326.1 MB/s 5# 10 files : 10485760 -> 10480770 (x1.000), 133.8 MB/s, 5314.4 MB/s 6# 10 files : 10485760 -> 10480733 (x1.000), 150.6 MB/s 5656.6 MB/s 7# 10 files : 10485760 -> 10480733 (x1.000), 108.6 MB/s, 5174.9 MB/s 8# 10 files : 10485760 -> 10480720 (x1.000), 127.0 MB/s, 6397.4 MB/s 9# 10 files : 10485760 -> 10480720 (x1.000), 96.6 MB/s, 6236.6 MB/s 10# 10 files : 10485760 -> 10480678 (x1.000), 75.7 MB/s, 5364.8 MB/s 11# 10 files : 10485760 -> 10480677 (x1.000), 54.8 MB/s, 5820.1 MB/s 12# 10 files : 10485760 -> 10480677 (x1.000), 46.2 MB/s, 5381.6 MB/s 13# 10 files : 10485760 -> 10480696 (x1.000), 76.9 MB/s, 5408.3 MB/s 14# 10 files : 10485760 -> 10480677 (x1.000), 80.9 MB/s, 5710.8 MB/s 15# 10 files : 10485760 -> 10480673 (x1.000), 86.1 MB/s, 5733.1 MB/s 16# 10 files : 10485760 -> 10480081 (x1.001), 13.2 MB/s, 5297.3 MB/s 17# 10 files : 10485760 -> 10479840 (x1.001), 12.8 MB/s, 5577.4 MB/s 18# 10 files : 10485760 -> 10472669 (x1.001), 11.0 MB/s, 5143.6 MB/s 19# 10 files : 10485760 -> 10466424 (x1.002), 10.2 MB/s, 5810.3 MB/s 20# 10 files : 10485760 -> 10466424 (x1.002), 9.94 MB/s, 4834.4 MB/s 21# 10 files : 10485760 -> 10466424 (x1.002), 10.5 MB/s, 5751.4 MB/s 22# 10 files : 10485760 -> 10466424 (x1.002), 9.77 MB/s, 5549.6 MB/s lz4 incompressible data 1# 10 files : 10485760 -> 10519684 (0.997),5280.6 MB/s ,10384.7 MB/s zstd compressible data -5# 10 files : 10485760 -> 6539854 (x1.603), 417.8 MB/s, 1180.5 MB/s -4# 10 files : 10485760 -> 6398463 (x1.639), 405.8 MB/s, 1135.9 MB/s -3# 10 files : 10485760 -> 6160465 (x1.702), 387.7 MB/s, 1087.2 MB/s -2# 10 files : 10485760 -> 5929762 (x1.768), 346.0 MB/s, 995.3 MB/s -1# 10 files : 10485760 -> 5715385 (x1.835), 299.1 MB/s, 976.1 MB/s 0# 10 files : 10485760 -> 4750055 (x2.208), 119.7 MB/s, 598.0 MB/s 1# 10 files : 10485760 -> 5129637 (x2.044), 237.9 MB/s 581.4 MB/s 2# 10 files : 10485760 -> 4949014 (x2.119), 153.7 MB/s, 506.1 MB/s 3# 10 files : 10485760 -> 4750055 (x2.208), 113.1 MB/s, 557.2 MB/s 4# 10 files : 10485760 -> 4697633 (x2.232), 92.5 MB/s, 526.9 MB/s 5# 10 files : 10485760 -> 4500404 (x2.330), 81.1 MB/s, 548.7 MB/s 6# 10 files : 10485760 -> 4441009 (x2.361), 58.7 MB/s, 723.6 MB/s 7# 10 files : 10485760 -> 4419852 (x2.372), 55.4 MB/s, 617.4 MB/s 8# 10 files : 10485760 -> 4415145 (x2.375), 35.0 MB/s, 578.0 MB/s 9# 10 files : 10485760 -> 4413466 (x2.376), 38.9 MB/s, 526.4 MB/s 10# 10 files : 10485760 -> 4401460 (x2.382), 32.6 MB/s, 579.5 MB/s 11# 10 files : 10485760 -> 4392615 (x2.387), 22.1 MB/s, 606.7 MB/s 12# 10 files : 10485760 -> 4392615 (x2.387), 23.0 MB/s 596.4 MB/s 13# 10 files : 10485760 -> 4388202 (x2.390), 11.6 MB/s, 599.1 MB/s 14# 10 files : 10485760 -> 4377685 (x2.395), 11.3 MB/s, 600.8 MB/s 15# 10 files : 10485760 -> 4372413 (x2.398), 11.2 MB/s, 761.5 MB/s 16# 10 files : 10485760 -> 4202809 (x2.495), 8.28 MB/s, 705.9 MB/s 17# 10 files : 10485760 -> 4102566 (x2.556), 7.44 MB/s, 642.1 MB/s 18# 10 files : 10485760 -> 3933105 (x2.666), 5.27 MB/s, 520.7 MB/s 19# 10 files : 10485760 -> 3924082 (x2.672), 4.24 MB/s, 518.4 MB/s 20# 10 files : 10485760 -> 3924082 (x2.672), 4.23 MB/s, 519.3 MB/s 21# 10 files : 10485760 -> 3923856 (x2.672), 4.11 MB/s, 519.1 MB/s 22# 10 files : 10485760 -> 3923462 (x2.673), 3.93 MB/s, 519.6 MB/s lz4 compressible data 1# 10 files : 10485760 -> 6648416 (1.577), 546.2 MB/s ,2509.9 MB/s zstd highly compressible data -5# 10 files : 10485760 -> 4293607 (x2.442), 450.3 MB/s, 1246.7 MB/s -4# 10 files : 10485760 -> 4082081 (x2.569), 421.1 MB/s, 1181.6 MB/s -3# 10 files : 10485760 -> 3867266 (x2.711), 385.2 MB/s, 1081.6 MB/s -2# 10 files : 10485760 -> 3674702 (x2.853), 367.9 MB/s, 1045.9 MB/s -1# 10 files : 10485760 -> 3376007 (x3.106), 347.2 MB/s, 1036.6 MB/s 0# 10 files : 10485760 -> 2650468 (x3.956), 162.0 MB/s, 759.5 MB/s 1# 10 files : 10485760 -> 2860865 (x3.665), 232.9 MB/s, 702.9 MB/s 2# 10 files : 10485760 -> 2740125 (x3.827), 239.7 MB/s 823.0 MB/s 3# 10 files : 10485760 -> 2650468 (x3.956), 188.0 MB/s 820.8 MB/s 4# 10 files : 10485760 -> 2639808 (x3.972), 189.1 MB/s, 817.1 MB/s 5# 10 files : 10485760 -> 2517311 (x4.165), 115.6 MB/s, 834.8 MB/s 6# 10 files : 10485760 -> 2457762 (x4.266), 80.6 MB/s, 913.6 MB/s 7# 10 files : 10485760 -> 2445663 (x4.287), 67.6 MB/s, 917.5 MB/s 8# 10 files : 10485760 -> 2416958 (x4.338), 52.4 MB/s, 964.3 MB/s 9# 10 files : 10485760 -> 2416308 (x4.340), 48.0 MB/s, 964.3 MB/s 10# 10 files : 10485760 -> 2411428 (x4.348), 37.6 MB/s, 969.2 MB/s 11# 10 files : 10485760 -> 2408209 (x4.354), 27.5 MB/s, 969.9 MB/s 12# 10 files : 10485760 -> 2408209 (x4.354), 27.5 MB/s, 968.9 MB/s 13# 10 files : 10485760 -> 2398818 (x4.371), 16.8 MB/s, 970.4 MB/s 14# 10 files : 10485760 -> 2393152 (x4.382), 13.3 MB/s, 977.9 MB/s 15# 10 files : 10485760 -> 2388939 (x4.389), 11.0 MB/s, 978.1 MB/s 16# 10 files : 10485760 -> 2179098 (x4.812), 6.29 MB/s, 884.5 MB/s 17# 10 files : 10485760 -> 2174662 (x4.822), 5.48 MB/s, 859.5 MB/s 18# 10 files : 10485760 -> 2134027 (x4.914), 3.02 MB/s, 705.2 MB/s 19# 10 files : 10485760 -> 2128916 (x4.925), 2.47 MB/s, 845.1 MB/s 20# 10 files : 10485760 -> 2128916 (x4.925), 3.28 MB/s, 844.6 MB/s 21# 10 files : 10485760 -> 2128916 (x4.925), 2.28 MB/s, 781.3 MB/s 22# 10 files : 10485760 -> 2128665 (x4.926), 2.73 MB/s, 833.3 MB/s lz4 highly compressible data 1# 10 files : 10485760 -> 4156863 (2.523), 491.6 MB/s ,3520.6 MB/s

Last edited: