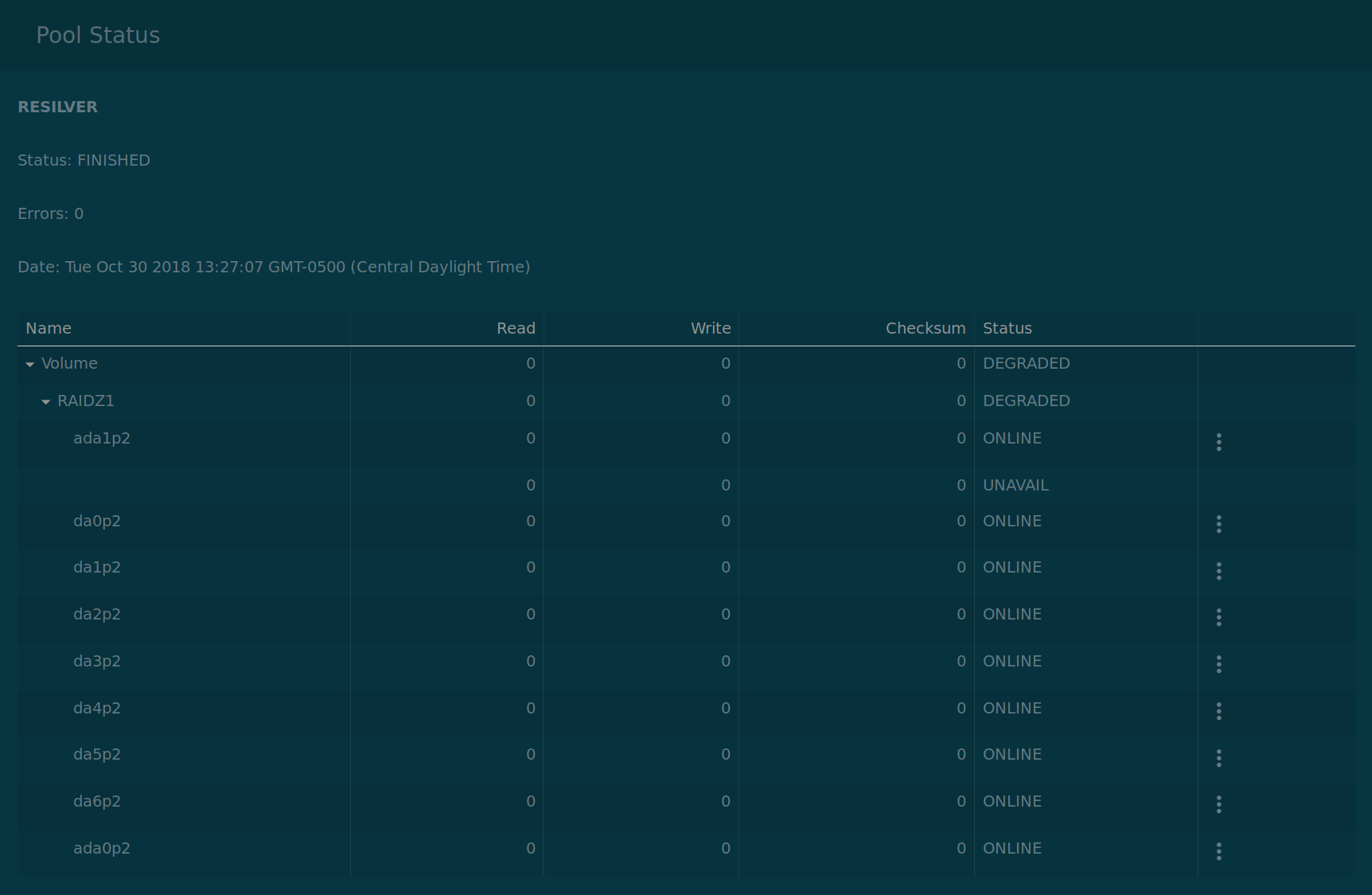

I had a backplane fail on an 11.2-RC1 test system. In the process of troubleshooting, one of the disks was physically removed from a 10 HDD RAIDZ1 array before being offlined. This HDD was RMAd and no longer available. A replacement drive verified as good was used for replacement and physically connected. The array remains degraded, and the documentation does not address my current situation of a drive that is listed as "UNAVAIL" and not having an "option" button to select "replace" as per documentation (https://doc.freenas.org/11.2/storage.html#replacing-a-failed-disk). I have rebooted and attempted to troubleshoot by reviewing prior posts on this forum as well as reading the documentation, to no avail.

My main question is how to address this issue. However, as a secondary concern, I am seeing conflicting information from the command line. "zpool status -x" states the pool is healthy, but the -v parameter status the pool is degraded (which it is):

---------------------------------------------------------

----------------------------------------------------

I kindly request the assistance of the FreeNAS community in helping me get the pool back to healthy status. How can I remove the "UNAVAIL" entries and resilver using my new disk, either from the command line or GUI? Thanks!

Jeff Arnholt

My main question is how to address this issue. However, as a secondary concern, I am seeing conflicting information from the command line. "zpool status -x" states the pool is healthy, but the -v parameter status the pool is degraded (which it is):

---------------------------------------------------------

Code:

root@Offsite1:~ # zpool status -x all pools are healthy root@Offsite1:~ # zpool status -v pool: Volume state: DEGRADED status: Some supported features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(7) for details. scan: resilvered 458G in 6 days 15:23:37 with 0 errors on Tue Nov 6 03:50:44 2018 config: NAME STATE READ WRITE CKSUM Volume DEGRADED 0 0 0 raidz1-0 DEGRADED 0 0 0 gptid/a7601136-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 replacing-1 UNAVAIL 0 0 0 10210022842724997111 UNAVAIL 0 0 0 was /dev/gptid/0f38e63e-daab-11e8-a7a0-60a44c42e357 9750869321900414361 UNAVAIL 0 0 0 was /dev/gptid/66e9fe9b-dc71-11e8-b980-60a44c42e357 gptid/a9369f8b-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/aa46726d-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/ab281f76-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/ac375292-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/ad432bb1-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/ae18ccc3-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/aef869b3-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 gptid/afe7a69f-9014-11e7-9b37-60a44c42e357 ONLINE 0 0 0 errors: No known data errors root@Offsite1:~ # glabel status Name Status Components gptid/afe7a69f-9014-11e7-9b37-60a44c42e357 N/A ada0p2 gptid/a7601136-9014-11e7-9b37-60a44c42e357 N/A ada1p2 gptid/a9369f8b-9014-11e7-9b37-60a44c42e357 N/A da0p2 gptid/aa46726d-9014-11e7-9b37-60a44c42e357 N/A da1p2 gptid/ab281f76-9014-11e7-9b37-60a44c42e357 N/A da2p2 gptid/ac375292-9014-11e7-9b37-60a44c42e357 N/A da3p2 gptid/ad432bb1-9014-11e7-9b37-60a44c42e357 N/A da4p2 gptid/ae18ccc3-9014-11e7-9b37-60a44c42e357 N/A da5p2 gptid/aef869b3-9014-11e7-9b37-60a44c42e357 N/A da6p2 gptid/1aeb4cce-cf82-11e6-bb7c-0021ccdbce2c N/A da8p1 gptid/afd55912-9014-11e7-9b37-60a44c42e357 N/A ada0p1 root@Offsite1:~ # camcontrol devlist <ATA ST8000DM002-1YW1 DN02> at scbus0 target 25 lun 0 (pass0,da0) <ATA ST8000DM002-1YW1 DN02> at scbus0 target 26 lun 0 (pass1,da1) <ATA ST8000DM002-1YW1 DN02> at scbus0 target 27 lun 0 (pass2,da2) <ATA ST8000DM002-1YW1 DN02> at scbus0 target 28 lun 0 (pass3,da3) <ATA ST8000DM002-1YW1 DN02> at scbus0 target 29 lun 0 (pass4,da4) <ATA ST8000DM002-1YW1 DN02> at scbus0 target 30 lun 0 (pass5,da5) <ATA ST8000DM002-1YW1 DN02> at scbus0 target 31 lun 0 (pass6,da6) <ATA ST8000DM004-2CX1 0001> at scbus0 target 37 lun 0 (pass7,da7) <ST8000DM002-1YW112 DN02> at scbus4 target 0 lun 0 (pass8,ada0) <ST8000DM002-1YW112 DN02> at scbus5 target 0 lun 0 (pass9,ada1) < Patriot Memory PMAP> at scbus7 target 0 lun 0 (pass10,da8)

----------------------------------------------------

I kindly request the assistance of the FreeNAS community in helping me get the pool back to healthy status. How can I remove the "UNAVAIL" entries and resilver using my new disk, either from the command line or GUI? Thanks!

Jeff Arnholt