Hey Forum,

I admit it - I don't understand ZFS Snapshots and already found this: https://www.truenas.com/community/threads/snapshots-and-used-space.63810/

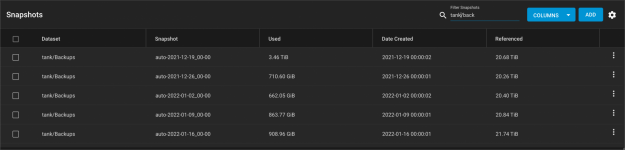

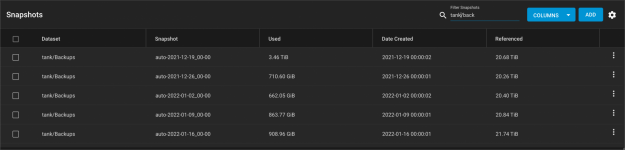

If I understand the mentioned post above correctly, the UI and also the output of "zfs list -ro space -t all tank/Backups" doesn't tell me what the snapshots are consuming in terms of disk space.

zfs list -ro space -t all tank/Backups = 6,6 TB but USEDSNAP = 16,4 TB - How ?

But the output of this small script or gives me the same amount of roughly 6,6 TB

If I really delete it without the dry-run (-n) it reclaims much more space: 16,36 TB:

Can anyone please give me a hint ;)

Many thanks and best regards, Flo.

I admit it - I don't understand ZFS Snapshots and already found this: https://www.truenas.com/community/threads/snapshots-and-used-space.63810/

If I understand the mentioned post above correctly, the UI and also the output of "zfs list -ro space -t all tank/Backups" doesn't tell me what the snapshots are consuming in terms of disk space.

zfs list -ro space -t all tank/Backups = 6,6 TB but USEDSNAP = 16,4 TB - How ?

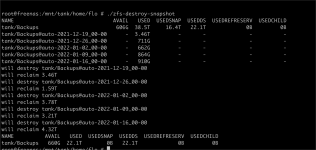

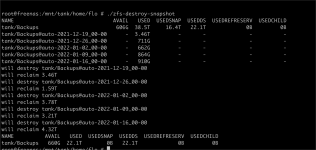

Code:

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD tank/Backups 608G 38.5T 16.4T 22.1T 0B 0B tank/Backups@auto-2021-12-19_00-00 - 3.46T - - - - tank/Backups@auto-2021-12-26_00-00 - 711G - - - - tank/Backups@auto-2022-01-02_00-00 - 662G - - - - tank/Backups@auto-2022-01-09_00-00 - 864G - - - - tank/Backups@auto-2022-01-16_00-00 - 909G - - - -

But the output of this small script or gives me the same amount of roughly 6,6 TB

Code:

#!/bin/bash # greps a list of snapshots, edit grep parameter # or tank/ for all dataset="tank/Backups" zfs list -ro space -t all $dataset for snapshot in `zfs list -H -t snapshot | cut -f 1 | grep $dataset` do zfs destroy -v -n $snapshot done

Code:

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD tank/Backups 608G 38.5T 16.4T 22.1T 0B 0B tank/Backups@auto-2021-12-19_00-00 - 3.46T - - - - tank/Backups@auto-2021-12-26_00-00 - 711G - - - - tank/Backups@auto-2022-01-02_00-00 - 662G - - - - tank/Backups@auto-2022-01-09_00-00 - 864G - - - - tank/Backups@auto-2022-01-16_00-00 - 909G - - - - would destroy tank/Backups@auto-2021-12-19_00-00 would reclaim 3.46T would destroy tank/Backups@auto-2021-12-26_00-00 would reclaim 711G would destroy tank/Backups@auto-2022-01-02_00-00 would reclaim 662G would destroy tank/Backups@auto-2022-01-09_00-00 would reclaim 864G would destroy tank/Backups@auto-2022-01-16_00-00 would reclaim 909G

If I really delete it without the dry-run (-n) it reclaims much more space: 16,36 TB:

Can anyone please give me a hint ;)

Many thanks and best regards, Flo.

Last edited: