hello,

i would like to migrate my data from my old nas running on Freenas 9.10.2-U6 to TrueNAS-13.0-U1.1. Is it safe to create a recursive snapshot of the freenas dataset and send it ssh to the truenas one?

not sure about that step - if its required/safe?

Promote truenas-pool/freenas-dataset_init’s new snapshot to become the dataset’s data: zfs rollback truenas-pool/freenas-dataset_init@22082022

Just want to be 100% sure that i wont damage data on the target pool; ie in case i type existing dataset name on the target it will fail the job or data on target pool could be replaced/etc...? Do i need to create new dataset on the target / Truenas pool or zfs send/rec will create it?

as per documentation:

"The target dataset on the receiving system is automatically created in read-only mode to protect the data. To mount or browse the data on the receiving system, create a clone of the snapshot and use the clone. Clones are created in read/write mode, making it possible to browse or mount them. See Snapshots for more information on creating clones."

So before the execution of the send/recv - dataset on the target system has to exist? Once the send/recv job is done; i have to set rw on target dataset and do the snapshot clone? Confusing ;/

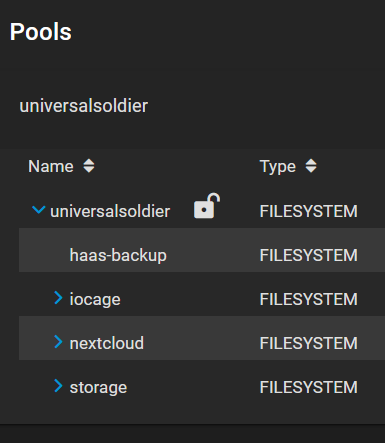

Is it a good practice to create a "backup/snapshot" of all datasets on Truenas in my case (see attached screenshot)... or maybe snapshot of all datasets on the pool level (if possible?)

Do I have to snapshot all datasets separately?

Thank you!

i would like to migrate my data from my old nas running on Freenas 9.10.2-U6 to TrueNAS-13.0-U1.1. Is it safe to create a recursive snapshot of the freenas dataset and send it ssh to the truenas one?

Code:

# zfs set readonly=on old-pool/root-dataset # zfs snapshot -r old-pool/root-dataset@22082022 # zfs send -R old-pool/root-dataset@22082022 | pv | ssh root@truenas-ip "zfs receive -Fv truenas-pool/freenas-dataset_init"

not sure about that step - if its required/safe?

Promote truenas-pool/freenas-dataset_init’s new snapshot to become the dataset’s data: zfs rollback truenas-pool/freenas-dataset_init@22082022

Just want to be 100% sure that i wont damage data on the target pool; ie in case i type existing dataset name on the target it will fail the job or data on target pool could be replaced/etc...? Do i need to create new dataset on the target / Truenas pool or zfs send/rec will create it?

as per documentation:

"The target dataset on the receiving system is automatically created in read-only mode to protect the data. To mount or browse the data on the receiving system, create a clone of the snapshot and use the clone. Clones are created in read/write mode, making it possible to browse or mount them. See Snapshots for more information on creating clones."

So before the execution of the send/recv - dataset on the target system has to exist? Once the send/recv job is done; i have to set rw on target dataset and do the snapshot clone? Confusing ;/

Is it a good practice to create a "backup/snapshot" of all datasets on Truenas in my case (see attached screenshot)... or maybe snapshot of all datasets on the pool level (if possible?)

Do I have to snapshot all datasets separately?

Thank you!

Last edited: