Because English is not good, use Google translate

I already guessed that.

already flash H310 to IT mode

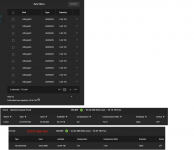

The image above says otherwise. In your first attachment, it shows disks with mfisyspdX devices. That is an MFI-driver based card. Card is not crossflashed to IT mode.

I do not have 12 6TB hard drives laying around to experiment with. However, I do have oodles of hypervisor capacity. So I have created a virtual machine with an array of 12 hard drives that are 5723166MB, which is the standard real size of a so-called "6TB HDD". I'm unclear on what your complaint is so I'm trying to look at the numbers you've provided to see where things might be unclear.

Let's walk through this.

So there's your 54.56TiB. This is literally just ten times the disk size. That's actually a tragic estimate, because that is only in the best case scenario. And I think this is what you're getting at, but it was buried in some other confusing numbers.

This 10 x 5.46TB number is the MAXIMUM amount of data that could EVER be stored on ANY RAIDZ2 device under the BEST circumstances. It does not represent what you will actually be able to store.

Forget RAIDZ2 and consider RAIDZ1 for a moment, because I have a picture handy to help illustrate. For discussion purposes I am assuming 4KB sector sizes, but this applies the same to 512B.

This is parity and data storage for data blocks stored by RAIDZ1. If we look at the tan blocks beginning at LBA0, this is what everyone THINKS happens with RAIDZ1. You have 5 disks, one parity, you get four disks of stored data and one for parity. 20% overhead for parity.

But if we store a shorter ZFS block, such as a 12KB block, such as the yellow or green in LBA 2, the data must still be protected by parity, so ZFS writes a parity sector for these blocks, making the overhead 25% for parity. Next comes the humdinger. We have a tiny block, whether this is metadata or a 1KB file or whatever, the red block in LBA 3. This too has to be protected by parity, so it works out to 50% overhead for parity. Moving down to LBA 7 and 8, we also see a different behaviour, which is where ZFS has an odd number of sectors to write, and pads them to an even number (the "X"'d out sectors). All of this works to eat away at your theoretical maximum RAIDZ1 capacity.

It gets worse with RAIDZ2. I don't have a picture for this. But just consider the case where you have a single 4K block to write. It needs two parity sectors, and then, because you now have three sectors, and three is an odd number, ZFS will pad it with an additional sector out to four sectors. So it takes four sectors of raw space to protect a 4K-sized block on RAIDZ2.

So, if we circle back around to our test box, we see that the pool is created:

And there's your 49.74TiB. This number isn't being generated by TrueNAS, but rather by ZFS itself. ZFS is giving you an approximation of how much usable space it thinks there could be, and it isn't as optimistic as the TrueNAS middleware, which as discussed is simply assuming the loss of two disks to parity, which is in my opinion a dumb assumption.

Code:

root@truenas[~]# zfs list foo

NAME USED AVAIL REFER MOUNTPOINT

foo 21.5M 49.7T 219K /mnt/foo

root@truenas[~]#

There's nothing wrong here. The amount of space on a RAIDZ2 volume is not a guaranteed number, because of how parity works. You are just seeing two different estimates.