Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Still accelerating... 225M/s now...

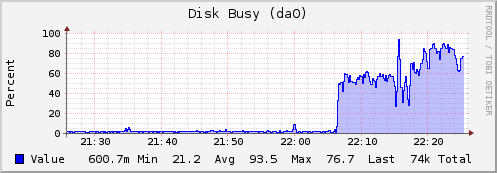

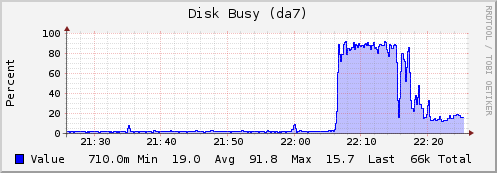

da0 is a source drive, da7 is the resilvering drive.

Looking at the reports, the slow 20M/s part earlier seemed to co-incide with an incoming replication ;)

Anyway, its the weekend now... so this can resilver in piece.

Your welcome.

da0 is a source drive, da7 is the resilvering drive.

Looking at the reports, the slow 20M/s part earlier seemed to co-incide with an incoming replication ;)

Anyway, its the weekend now... so this can resilver in piece.

Your welcome.