Thanks for some real world figures. What's keeping it from going faster? Interrupts and context switches saturated?

I don't actually know the EXACT answer here, but.... you're generally on the right track.

One of the many things I've expected out of FreeBSD over the years is to act as a router. Those of you who are thinking "uh huh me too", no, I don't mean a NAT gateway like the pfSense or OpenWRT you use as customer-side of a residential connection, but an actual internetwork router.

Back in the day, routers like the Cisco 4700M were an integral part of the network, but they were software-based routers, meaning that they had a limited number of PPS (packets-per-second) and Mbps (megabits-per-second), and they were quite expensive. In comparison, KA9Q NOS on a PC with a floppy disk made a credible low-end router for modest use, and later, FreeBSD added the ability to do more complex things like IGP's (internal gateway protocols) like RIP and OSPF.

Software-based routers like the 4700M or a PC are inherently at a disadvantage compared to silicon-based routers that have specialized ASICs to move the packets, because each packet needs to be fondled by the CPU. I have some of the very earliest silicon routers, the Netstar (Ascend) GRF's, and their ability to route any-to-any at full wire speed on all ports was unparalleled at the time. However, it was limited to 150K routes, in silicon, and also 100Mbps, even if it did have 32 ports of 100Mbps.

And the damn things cost like $80K. In 1997 dollars.

On the other hand, it was easy to see years ago that CPU was going to be getting very competitive with silicon over time. I made some statements at the time that I think caused some NANOG'er heads to explode, but I was ultimately correct in that we're seeing products like the

Ubiquiti EdgeRouter Infinity and the

Mikrotik CCR1072, which are basically high-core-count CPU-based devices that get packet forwarding rates into the 100Mpps rate arena on 8x10GbE.

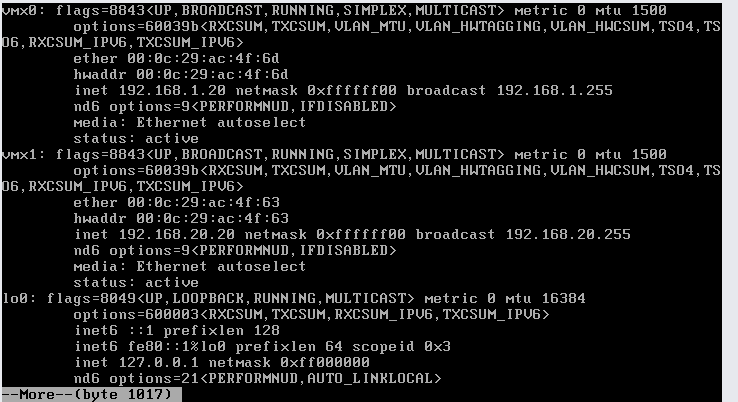

The problem is that it takes a LOT of CPU to get there, and also the hardware needs to be able to work optimally with the host system. We no longer have serious issues with stuff like PCI (not PCIe) bus contention, but when push comes to shove, the design of lots of bits of FreeBSD aren't optimized for the task. Luigi Rizzo and others have spent years improving this, and he had an impressive demo of 185Kpps packet handling in the mid 2000's, but this is really where the PC hardware gets stressed out, and you really need to have everything lined up just right. To get there on modern hardware, you need multiple queues and a variety of other things, intended to spread the load across multiple cores, which are things that were not a consideration when Intel designed the E1000 hardware. I *suspect* that the vast majority of the answer to your question is related to this.

For those of us building routers, or servers too really, there is a lot of value in looking at BSD router performance tuning, a lot of which has recently been summarized at

https://wiki.freebsd.org/Networking/10GbE/Router

The primary difference for a FreeNAS/TrueNAS server is that unlike a router, LRO/TSO are good for a server.

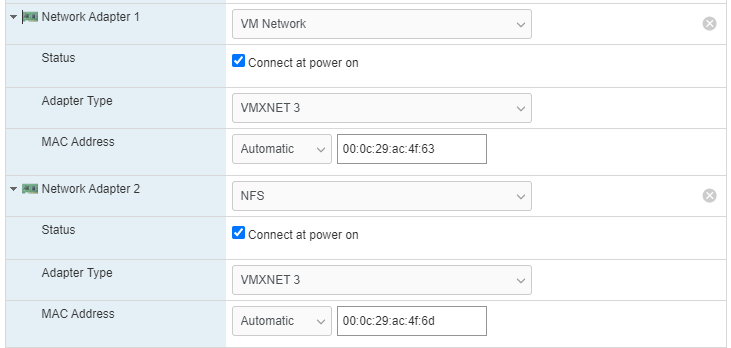

So I feel like the correct answer to your question is that CPU speeds have plateaued -- we've had 3GHz for about twenty years, and okay fine we're around 5GHz now, and to get "faster" computers we've gone to multiple cores and GPU's -- and that the E1000 isn't designed to be able to take true advantage of multiple cores, because Intel expected E1000 to be physically limited to 1GbE, and their CPU's at the time could cope. Additionally, there is a lot of superfluous bit-twiddling going on that doesn't really need to happen, none of which would be there in a virtio setup, so you waste a bunch of potential.

My best guess is that if you could double CPU core speed, you could probably get a lot more out of E1000, but as I said, we've been generally plateaued on CPU speeds for like twenty years, especially on the server CPU's, where higher core count has kept the GHz-per-core somewhat lower.

I hope this has been particularly enlightening for an answer that is essentially "I don't really know".

;-)

I always wonder why VMware does not adopt VirtIO like everyone else, btw ...

The ten ethernet limit is really annoying, too.