Hello,

I just switched to TrueNAS to run my Nextcloud. Yesterday I installed everything and now my old Nextcloud files will upload to my new Nextcloud. To the TrueNAS. But this sync will stop every few hours, because the vm will give me this error:

2023-07-05 22:55:22.135+0000: shutting down, reason=crashed

This is very annoying as I thought TrueNAS was running without any problems. I did a clean installation. And yet now there are problems...

At the beginning I had problems that the computer time had not matched with the current time, whereby the reports in TrueNAS were all on null. I then adjusted the time and fixed it.

I am also very unsure about my VM configuration. Would be very nice if someone can tell me what the optimal configuration is for my Nextcloud VM. In the TrueNAS PC I have 64GB DDR4. And actually I wanted to give 32GB to Nextcloud, even though I know this is overkill. And the PC has 8 cores with 16 threads in total (AMD Ryzen 7 5700U). Had assigned 4 cores to the VM.

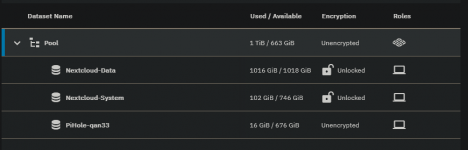

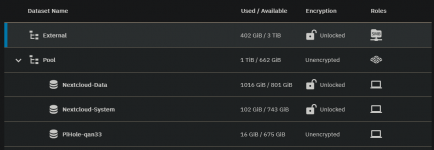

Attached a few pictures of my configuration. I have two volumes for the Nextcloud VM. Both are encrypted with AES. One volume for the Nextcloud system and one volume for the Nextcloud data.

And just now I set the VM to 16GB RAM because ZFS was somehow using more than 32GB RAM, which prevented me from using the original 32GB for the VM. This is a bit annoying. Would already like to use 32GB RAM. Maybe the crash is also because of that? That I use too much RAM for the VM? But why would too much RAM be a problem?

Thank you....

I just switched to TrueNAS to run my Nextcloud. Yesterday I installed everything and now my old Nextcloud files will upload to my new Nextcloud. To the TrueNAS. But this sync will stop every few hours, because the vm will give me this error:

2023-07-05 22:55:22.135+0000: shutting down, reason=crashed

This is very annoying as I thought TrueNAS was running without any problems. I did a clean installation. And yet now there are problems...

At the beginning I had problems that the computer time had not matched with the current time, whereby the reports in TrueNAS were all on null. I then adjusted the time and fixed it.

I am also very unsure about my VM configuration. Would be very nice if someone can tell me what the optimal configuration is for my Nextcloud VM. In the TrueNAS PC I have 64GB DDR4. And actually I wanted to give 32GB to Nextcloud, even though I know this is overkill. And the PC has 8 cores with 16 threads in total (AMD Ryzen 7 5700U). Had assigned 4 cores to the VM.

Attached a few pictures of my configuration. I have two volumes for the Nextcloud VM. Both are encrypted with AES. One volume for the Nextcloud system and one volume for the Nextcloud data.

And just now I set the VM to 16GB RAM because ZFS was somehow using more than 32GB RAM, which prevented me from using the original 32GB for the VM. This is a bit annoying. Would already like to use 32GB RAM. Maybe the crash is also because of that? That I use too much RAM for the VM? But why would too much RAM be a problem?

Thank you....