KarmaGotMyBack

Cadet

- Joined

- May 14, 2023

- Messages

- 6

Hello all,

System Specs:

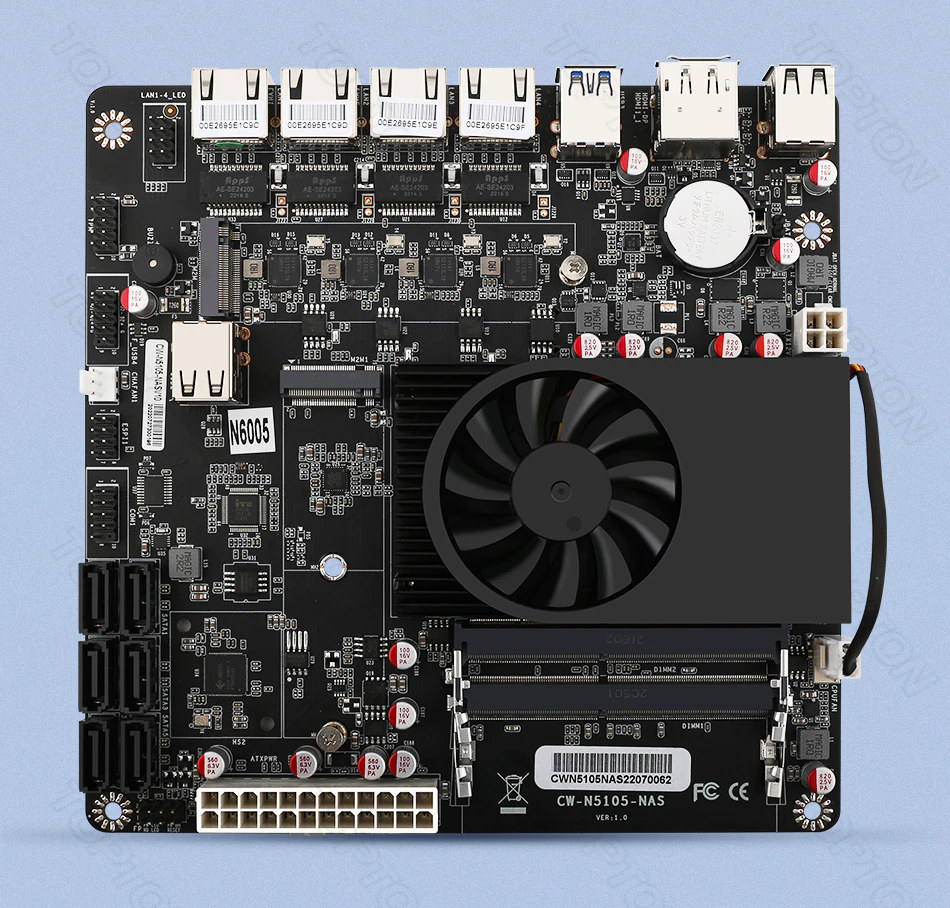

Mobo: Topton n5105

CPU: Intel Celeron N5105

RAM: TEAMGROUP Elite DDR4 64GB Kit (2 x 32GB) 3200MHz PC4-25600 CL22 Unbuffered Non-ECC (Running @2933 MHz)

Drives:

SSD: PNY CS1030 250GB M.2 NVMe (x2) Raid 1

HDD: Seagate IronWolf 4TB NAS 5400 RPM 64MB Cache (x5) RAIDZ1

Network Cards: Intel i266-V (x4) - only using one

Currently, this is set up to run Proxmox with a VM TrueNAS Scale inside. This is due to the fact how unstable it is. I did try running TrueNAS Core originally and had no crashing issues until I switched to Scale, which has way better features. Ideally, if Scale runs stably, I would eliminate ProxMox and just transfer the Pool. The system has 50 GB of RAM allocated to it and 104 GB on the SSD. The HDDs are all passed through to TrueNAS.

I am having instability on TrueNAS Scale. In large amounts of Data Transfer, I find that the system crashes, the CPU doesn't appear to be overloaded and HDD temps are. I have read a bit of the form and know this might be related to not having enough ARC storage, which is where I tend to drift. I understand that the MOBO is new and might not be fully supported either.

Any advice/tips/tricks would be much appreciated!

System Specs:

Mobo: Topton n5105

CPU: Intel Celeron N5105

RAM: TEAMGROUP Elite DDR4 64GB Kit (2 x 32GB) 3200MHz PC4-25600 CL22 Unbuffered Non-ECC (Running @2933 MHz)

Drives:

SSD: PNY CS1030 250GB M.2 NVMe (x2) Raid 1

HDD: Seagate IronWolf 4TB NAS 5400 RPM 64MB Cache (x5) RAIDZ1

Network Cards: Intel i266-V (x4) - only using one

Currently, this is set up to run Proxmox with a VM TrueNAS Scale inside. This is due to the fact how unstable it is. I did try running TrueNAS Core originally and had no crashing issues until I switched to Scale, which has way better features. Ideally, if Scale runs stably, I would eliminate ProxMox and just transfer the Pool. The system has 50 GB of RAM allocated to it and 104 GB on the SSD. The HDDs are all passed through to TrueNAS.

I am having instability on TrueNAS Scale. In large amounts of Data Transfer, I find that the system crashes, the CPU doesn't appear to be overloaded and HDD temps are. I have read a bit of the form and know this might be related to not having enough ARC storage, which is where I tend to drift. I understand that the MOBO is new and might not be fully supported either.

Any advice/tips/tricks would be much appreciated!