Longfellow75

Dabbler

- Joined

- Aug 24, 2022

- Messages

- 12

Hi all,

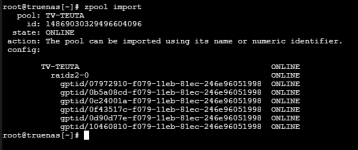

I have using truenas for a year now without any problem. My system built is a Dell PowerEdge R730xd (dual Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz, 32 GB RAM, no raid card) with one SSD boot disk and six 8TB NAS disks in raidz-2 configuration. After a series of power outage the pool went offline. I tried to import (reconnect it) with zpool import -f 'pool name' command. It showed that an import was possible with 10 seconds change loss. I run zpool import -F 'pool name' but the machine kept rebooting when trying to load the pool with KBD panic error, tries to use some specific uberblock and reboots again. The only way to have access to truenas again is to take out all the nas disks. I can see in GUI the pool name indicated as offline and when I insert back all the disks they show in pool column as N/A. When I tried in shell the command 'zpool import' it shows the pool name and all the disks as online. I ran S.M.A.R.T tests for disks they all came success. I have tried from GUI to export the pool, reboot the machine and tried to import the pool again with the same result. At rebooting it get stuck at the same precise point to that uberblock using and reboots infinitely.

I'm really concern. I have read most of the posts here for a solution but haven't found a way around. Please someone help. It will be highly appriciated.

Thanks,

I have using truenas for a year now without any problem. My system built is a Dell PowerEdge R730xd (dual Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz, 32 GB RAM, no raid card) with one SSD boot disk and six 8TB NAS disks in raidz-2 configuration. After a series of power outage the pool went offline. I tried to import (reconnect it) with zpool import -f 'pool name' command. It showed that an import was possible with 10 seconds change loss. I run zpool import -F 'pool name' but the machine kept rebooting when trying to load the pool with KBD panic error, tries to use some specific uberblock and reboots again. The only way to have access to truenas again is to take out all the nas disks. I can see in GUI the pool name indicated as offline and when I insert back all the disks they show in pool column as N/A. When I tried in shell the command 'zpool import' it shows the pool name and all the disks as online. I ran S.M.A.R.T tests for disks they all came success. I have tried from GUI to export the pool, reboot the machine and tried to import the pool again with the same result. At rebooting it get stuck at the same precise point to that uberblock using and reboots infinitely.

I'm really concern. I have read most of the posts here for a solution but haven't found a way around. Please someone help. It will be highly appriciated.

Thanks,