My Configuration:

Truenas 12.0U1.1

SuperMicro Server w/93008i8e HBA controller (main machine)

-> SOC Xeon

-> 128GB ECC memory

-> 2xUSB boot Drive

-> 8x8TB HD

-> 2x1TB SSD

(external) Supermicro JBOD enclosure (connected via HBA controller from main machine)

-> 13x14TB HD

-> 8x16TB HD

Pools:

SSD

-> 1 vdev + 0 hotspare

--> 2disk mirror (1TB SSD)

Data

-> 4 vdevs + 3 hotspares

--> 8disk raidz2 (8TB) vdev1

--> 6disk raidz2 (14TB) vdev2

--> 6disk raidz2 (14TB) vdev3

--> 6disk raidz2 (16tb) vdev4

I had 2 disk failures in one of my vdevs (vdev2). The hotspares automatically replaced the failing disks and the pool operated in a degraded state.

The 2 disk failures are under warranty so I removed them and shipped them back to the manufacturer.

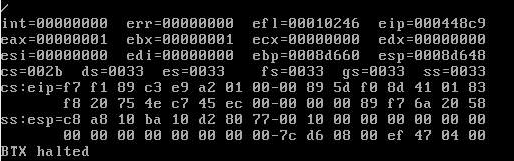

I then rebooted Truenas and upon boot I get the BTX Halted error and Freenas does not boot.

Things I tried:

1. If I disconnect the (external) JBOD enclosure then Truenas boots correctly but with my Data pool "offline".

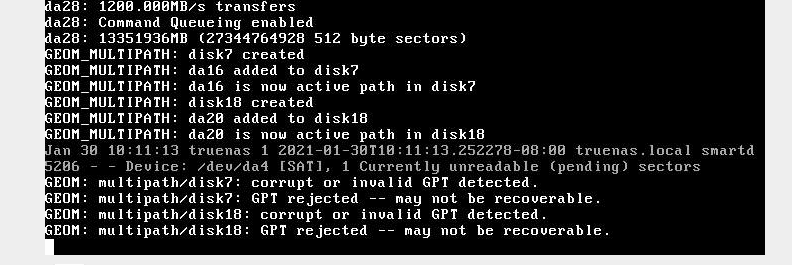

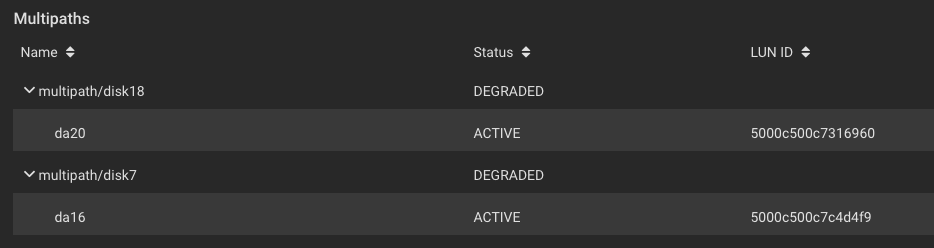

2. One other thing I tried was during the boot of Truenas I connected the JBOD enclosure (instead of start of boot) and Freenas boots and finds my disks but the data pool is still "offline" and I see all these disks now under Storage->Multipaths.

3. I turned on JBOD enclosure after Truenas was fully booted. Similiar experience to #2 try but only the 2 hot spares show up in the Multipaths.

Console Info:

Multipath Info:

Multipath Info:

I assumed the data pool with the hotspares in use (with the faulty drives removed) it would operate normally, but this does not appear to be the case.

Maybe it is because I didn’t mark the failed drives as offline?

How do I resolve this issue with Freenas seeing my Data pool normally with the hotspares in use? Or do I need to wait for the replacement disks to be added before things are back to "normal"?

Any help would be appreciative, and thanks in advance.

Regards,

Art

Truenas 12.0U1.1

SuperMicro Server w/93008i8e HBA controller (main machine)

-> SOC Xeon

-> 128GB ECC memory

-> 2xUSB boot Drive

-> 8x8TB HD

-> 2x1TB SSD

(external) Supermicro JBOD enclosure (connected via HBA controller from main machine)

-> 13x14TB HD

-> 8x16TB HD

Pools:

SSD

-> 1 vdev + 0 hotspare

--> 2disk mirror (1TB SSD)

Data

-> 4 vdevs + 3 hotspares

--> 8disk raidz2 (8TB) vdev1

--> 6disk raidz2 (14TB) vdev2

--> 6disk raidz2 (14TB) vdev3

--> 6disk raidz2 (16tb) vdev4

I had 2 disk failures in one of my vdevs (vdev2). The hotspares automatically replaced the failing disks and the pool operated in a degraded state.

The 2 disk failures are under warranty so I removed them and shipped them back to the manufacturer.

I then rebooted Truenas and upon boot I get the BTX Halted error and Freenas does not boot.

Things I tried:

1. If I disconnect the (external) JBOD enclosure then Truenas boots correctly but with my Data pool "offline".

2. One other thing I tried was during the boot of Truenas I connected the JBOD enclosure (instead of start of boot) and Freenas boots and finds my disks but the data pool is still "offline" and I see all these disks now under Storage->Multipaths.

3. I turned on JBOD enclosure after Truenas was fully booted. Similiar experience to #2 try but only the 2 hot spares show up in the Multipaths.

Console Info:

I assumed the data pool with the hotspares in use (with the faulty drives removed) it would operate normally, but this does not appear to be the case.

Maybe it is because I didn’t mark the failed drives as offline?

How do I resolve this issue with Freenas seeing my Data pool normally with the hotspares in use? Or do I need to wait for the replacement disks to be added before things are back to "normal"?

Any help would be appreciative, and thanks in advance.

Regards,

Art

Last edited: